Deploy a Kubernetes cluster using Terraform and Ansible on AWS: Use EC2 or EKS

While I was in the initial stages of learning Kubernetes, I always struggled to get a quick Kubernetes cluster to work on my practice labs. Though there are many online alternatives available which can be used in such scenarios but somehow I always felt if I could easily spin up a lab environment of my own, it will be good for my learning. Thats when I came up with this process to spin up a basic Kubernetes cluster easily and quickly. The process described here can be a good learning to use Terraform and Ansible in such scenarios too. With modifications to the steps, this can also be used to launch actual clusters in projects.

For this post, I will go through the process of spinning up a basic Kubernetes cluster using Terraform on AWS. I will cover two ways to launch the cluster:

- Using Terraform to launch EC2 instances on AWS and use Ansible to bootstrap and start a Kubernetes cluster

- Using Terraform launch an AWS EKS cluster

The GitHub repo for this post can be found Here

Pre Requisites

Before I start the walkthrough, there are some some pre-requisites which need to be met if you want to follow along or want to install your own cluster:

- Some Kubernetes, Terraform and Ansible knowledge. This will help understand the workings of the process

- Jenkins server to run Jenkins jobs

- An AWS account. The EKS cluster may incur some charges so make sure to monitor that

- Terraform installed

- Jenkins Knowledge

Apart from this I will explain all of the steps so it can be followed easily. I will cover each of the steps in detail so if you have some basic conceptual understanding of the above mentioned topics, you should be able to follow along.

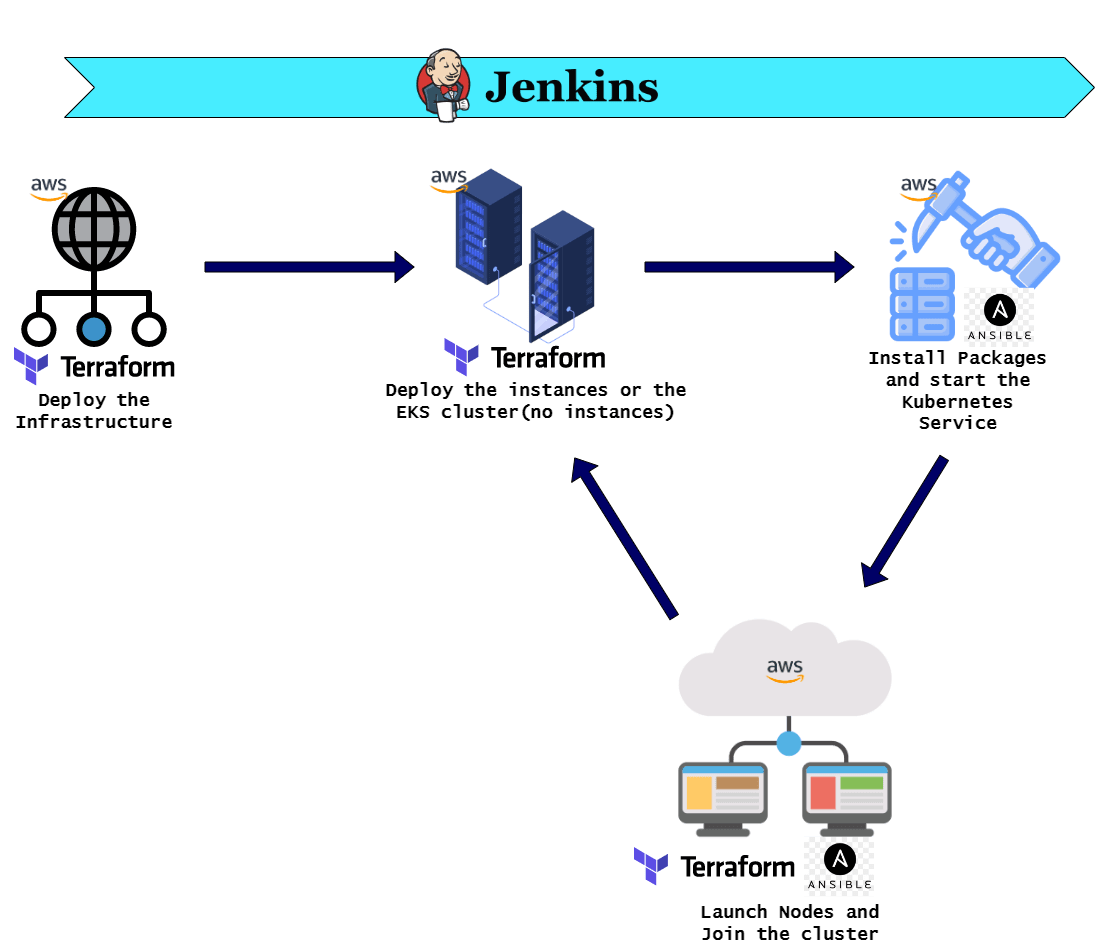

Functional Flow

Let me first explain whats the process I will be following to deploy the cluster. I will go through two methods of deploying the cluster:

- Using EC2: In this method the cluster will be deployed using just EC2 instances on AWS. Both control plane and the nodes will be EC2 instances which you can directly access and control. The controlplane and nodes EC2 instances will be configured accordingly.

- Using AWS EKS: In this method the cluster will be deployed on the managed platform called EKS, which is provided by EKS. Since EKS is a managed cluster by AWS, we will be doing a declarative config here and deploy the cluster. To have a serverless approach, for the nodes I will be using Fargate instances. To know more about what is Fargate, click Here.

Lets go through each of the methods and see how this will work.

Using EC2 Instances

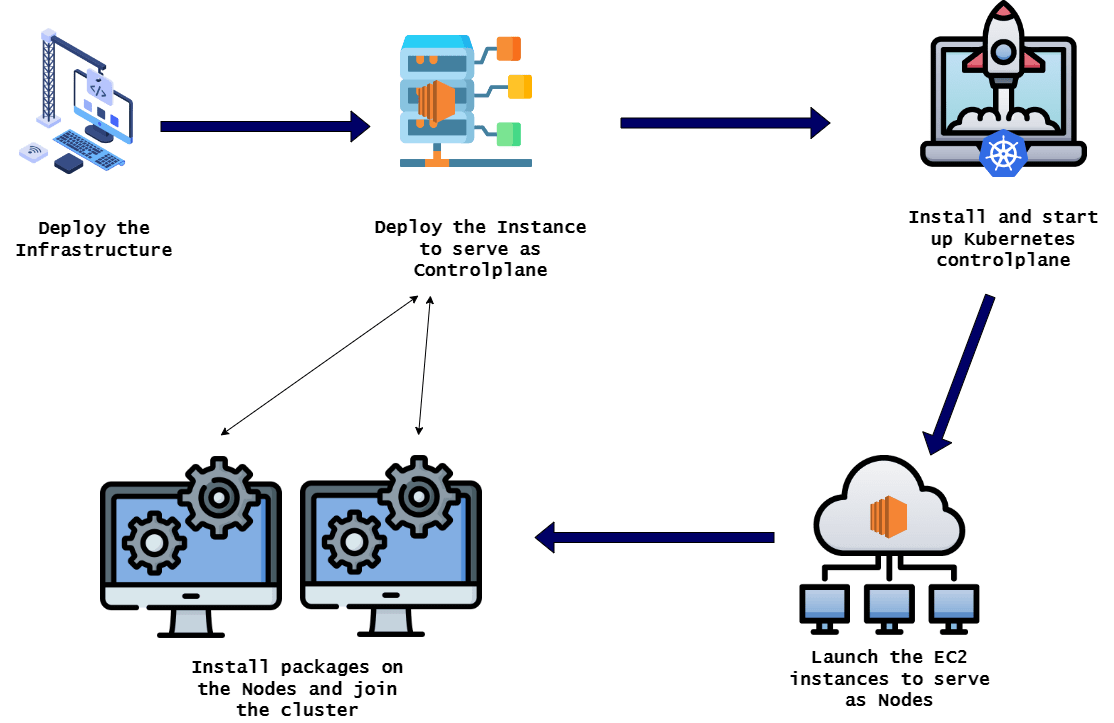

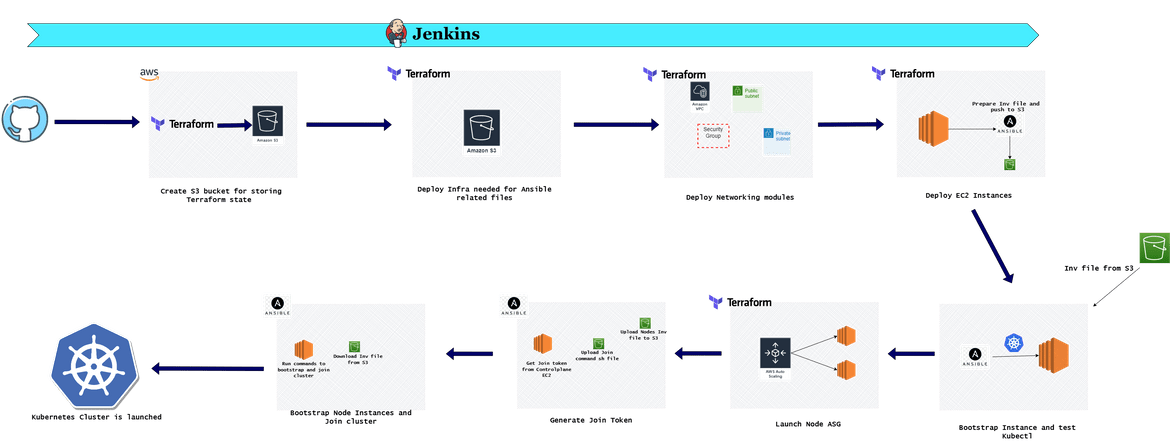

First method we will go through is deploying the cluster using EC2 instances. In this we will deploy both the controlplane and some nodes on various EC2 instances. Below flow shows the overall process.

-

Deploy the Infrastructure: This is the first step where the infrastructure to support the cluster will be deployed. The infrastructure will include different parts of the whole architecture which will be supporting the cluster running on the EC2 instances. Some of the items which will be deployed as part of the infrastructure are:

- Networking components like VPC, Subnets etc.

- S3 buckets to stora various temporary files needed during the deployment flow. Also to store the infrastructure state during the deployment

- Deploy the Controlplane Instance: In this stage the EC2 instance is deployed on the above infrastructure. In this process I am deploying one EC2 instance as the controlplane of the cluster. Normally Production grade Kubernetes cluster can have multiple controlplane nodes and add a scalability to it. But for this example, I am deploying one EC2 instance as the controlplane.

- Bootstrap the controlplane: The EC2 instance deployed above is needed to be prepared so Kubernetes cluster can start on it. In this stage multiple packages will be installed on the EC2 instance and the Kubernetes cluster will be started on the instance. At this point the cluster only comprises of the controlplane node.

- Launch the EC2 instances for Nodes: To make the Kubernetes cluster scalable, we will need more nodes added to it. In this step I am deploying some more EC2 instances which will become the child nodes in the Kubernetes cluster with the controlplane EC2 instance as the controller.

- Prepare the Nodes and join cluster: For the nodes to be part of the cluster, they need to be prepared. In this step the needed packages are installed on the Nodes and the Kubernetes cluster join command is executed on each of the node EC2 instance, so they join the cluster.

That completes the high level flow of deploying the cluster on EC2 instances.

Using AWS EKS

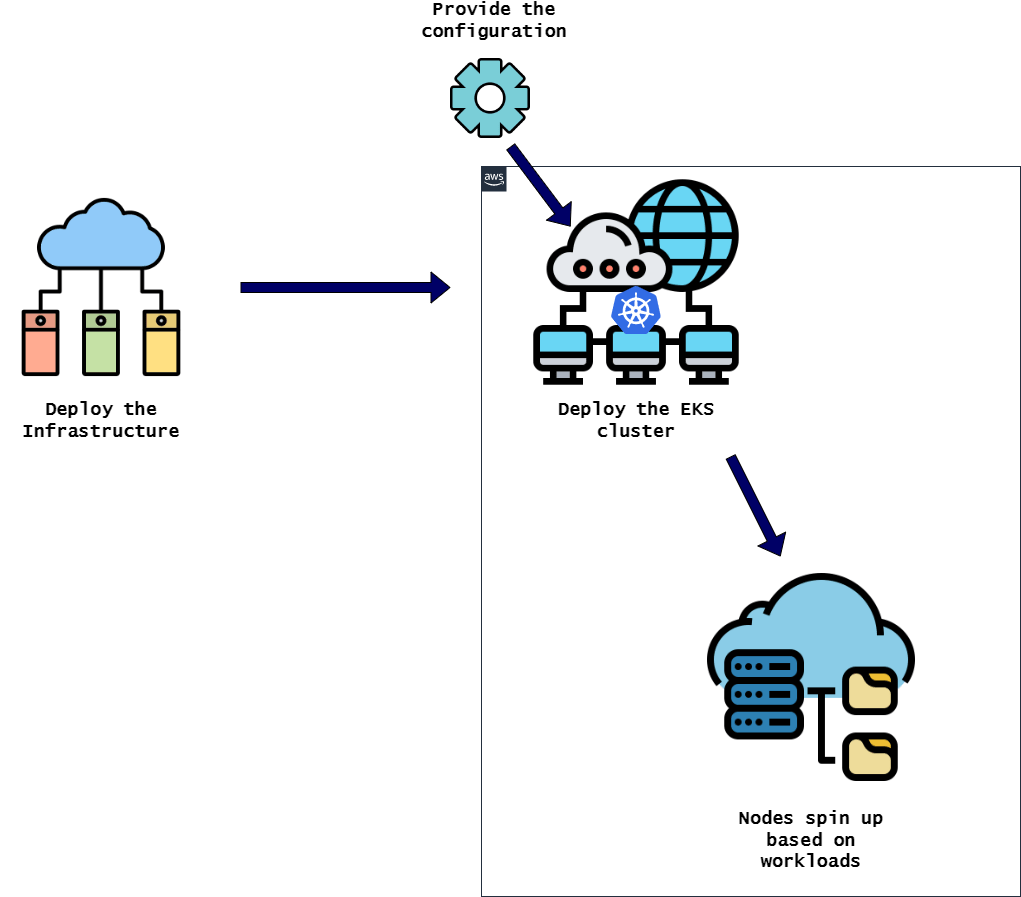

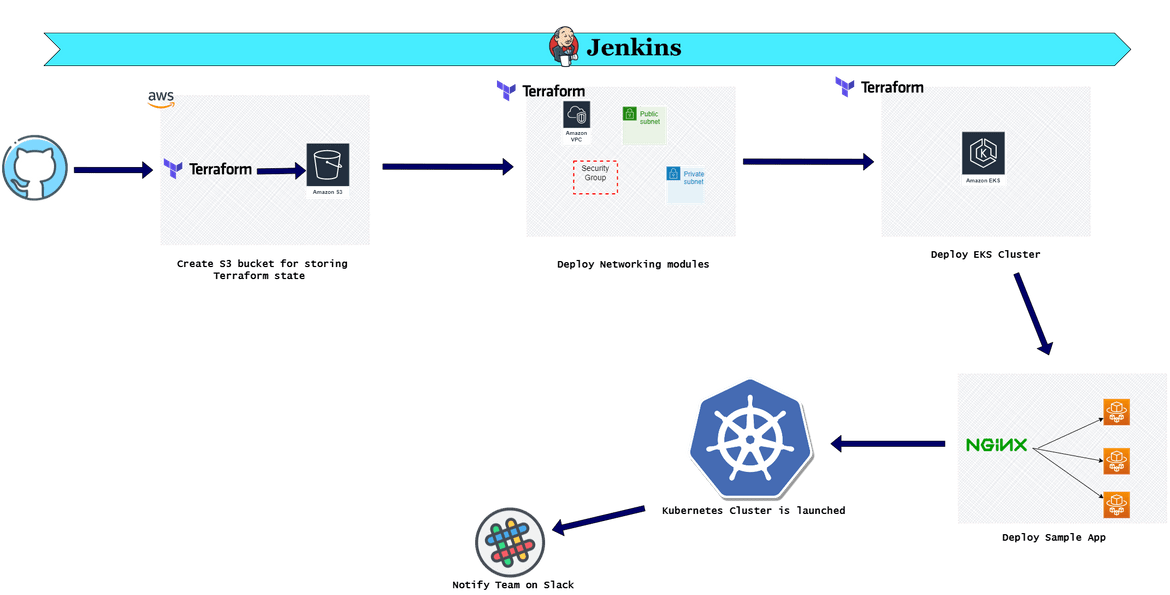

In this method, the cluster will be deployed on the AWS managed platform called EKS (Elastic Kubernetes Service). This platform takes away many of the steps to prepare the cluster and takes a more declarative approach. Below flow shows what I will be following in this process:

-

Deploy the Infrastructure: In this step, the supporting infrastructure is deployed. Even though the cluster is AWS managed, the supporting infrastructure has to be deployed and can be controlled by us. As part of this step, these are the items which are deployed:

- Networking components like VPC, subnets etc.

- S3 buckets to store any temporaray files needed during the deployment

- Any IAM roles needed by the components

- Deploy the EKS Cluster: Once we have the infrastructure ready, we can go ahead and deploy the EKS cluster. Since the cluster is managed by AWS, we dont need to do any installations manually. We can pass the configuration needed for the cluster (like VPC, subnets etc.) and AWS handles spinning up the cluster

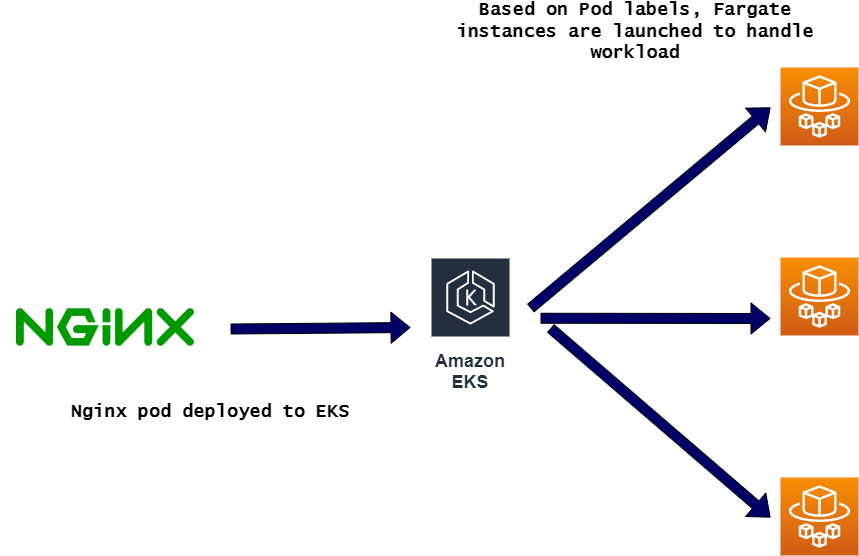

- Spin up Nodes for the cluster: With the EKS cluster, AWS spins up the controlplane for the cluster. For this example I am using Fargate instances as Nodes. So those nodes spin up automatically as workloads gets deployed on the cluster. Based on the workloads more Fargate nodes spin up to handle the scalability. Apart from Fargate instances, even normal EC2 instances can be used as nodes and attach to the cluster. Even if using EC2, no need of manual installations. AWS EKS will handle spinning up the EC2 nodes and manage as part of the cluster.

Now that we have some basic understanding of what I will be deploying, lets dive into some technical details of the parts.

Tech Details

Let me explain how each of the cluster will be composed using different AWS components. For both of the methods, I will be using Jenkins to automate the whole deployment. Here I am describing each of the cluster.

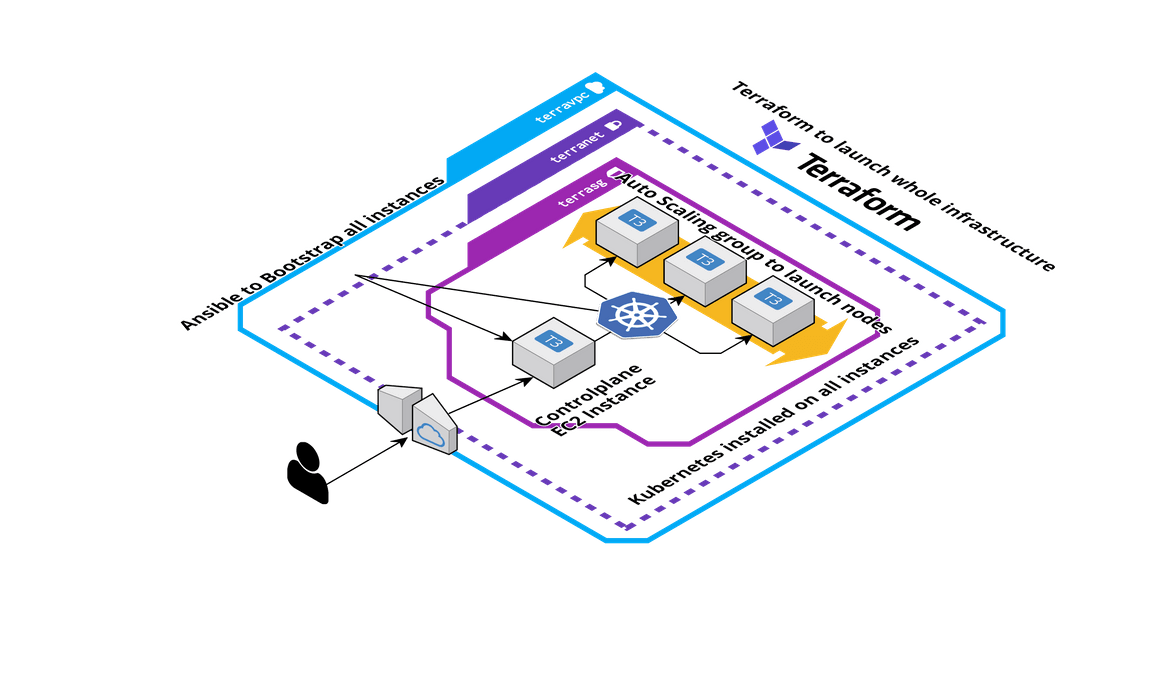

Cluster on EC2

This cluster comprises of EC2 instances which are deployed separately. Below image will show the overall cluster which will be built in this process:

-

Controlplane EC2 Instance: One EC2 instance is deployed which will be serving as the controlplane node for this cluster. Since its the controlplane node, the size of the EC2 should be larger like t3. For the EC2 instance to act as the controlplane, few packages are installed on the instance. Ansible is used to bootstrap the instance and install all the needed packages. At high level these are the steps which will be followed on the controlplane instance after it is spun up:

- Install Docker

- Install Kubernetes

- Start Kubernetes using Kubeadm and start the service on this node

- Generate the Kubeadm join command for other nodes to join the cluster

-

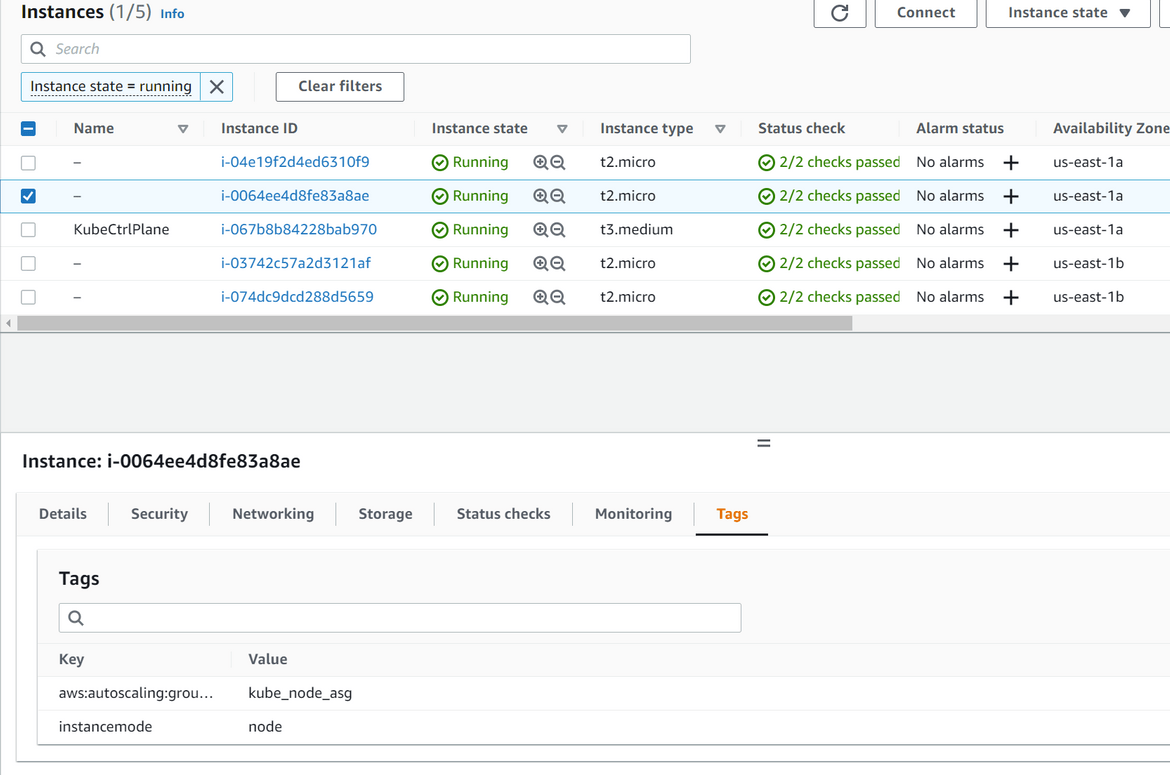

Auto Scaling Group for the nodes: Now we have the controlplane for the cluster but we will need other nodes joined to the cluster to have a scalable Kubernetes cluster. To launch the nodes automatically based on load on the cluster, an auto scaling group is being deployed here. The ASG scales in and out with the number of nodes which become part of the cluster. Based on the load on the cluster, the node count varies and is controlled by the ASG. Once the nodes launch, Ansible is used to bootstrap the nodes and make them join the cluster. These are the steps which are performed using Ansible on the nodes:

- Install Docker

- Install Kubernetes

- Run the Kubeadm join command with the join token, so the node becomes part of the cluster

-

Networking: All of the instances which are spun up by this process are deployed inside a VPC which also gets created part of the process. These are the components which gets deployed as part of the networking for the whole cluster:

- VPC for the whole cluster

- Subnets for diferent instances across multiple AZs

- Security groups to control the traffic to these instances

- Internet Gateway for outside access to these instances

- Use of Terraform: All of the infrastructure which is mentioned here, is being deployed using Terraform. Different Terraform modules have been created to handle each of the components of the cluster. I will be going through the Terraform scripts in detail below.

- Use of Ansible: Ansible is used to handle of the package installations and steps needed on all of the instances. Since the installations and the cluster commands are automated, they are all ran by different Ansible playbooks. These playbooks are executed on the instances via SSH from the Jenkins pipeline. I will go through these playbooks in detail in below sections.

That should explain about the whole cluster architecture and it is deployed across multiple EC2 instances.

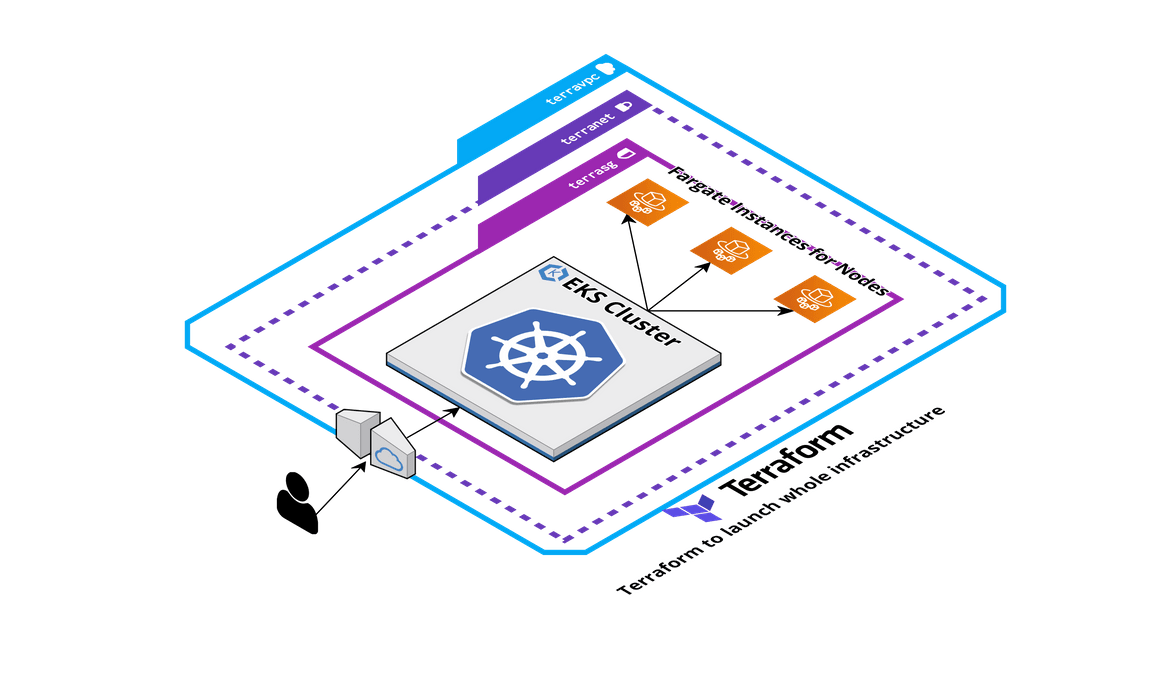

Cluster on EKS

In the next method, I am describing the cluster architecture which is deployed using AWS EKS. In this there are no direct involvement with launching EC2 instances and bootstrapping them. Below image describes the cluster architecture:

- AWS EKS Cluster: This is the central component of the cluster. An EKS cluster is deployed using a declarative configuration. Using Terraform the EKS cluster is deployed and AWS manages spinning up the cluster and operational. I will cover in detail how this is deployed.

- Networking: The networking part is the same as the above method with EC2 instances. Even for this EKS cluster we will need the networking components deployed separately, so the cluster can run in it. Same networking components are also deployed in this process

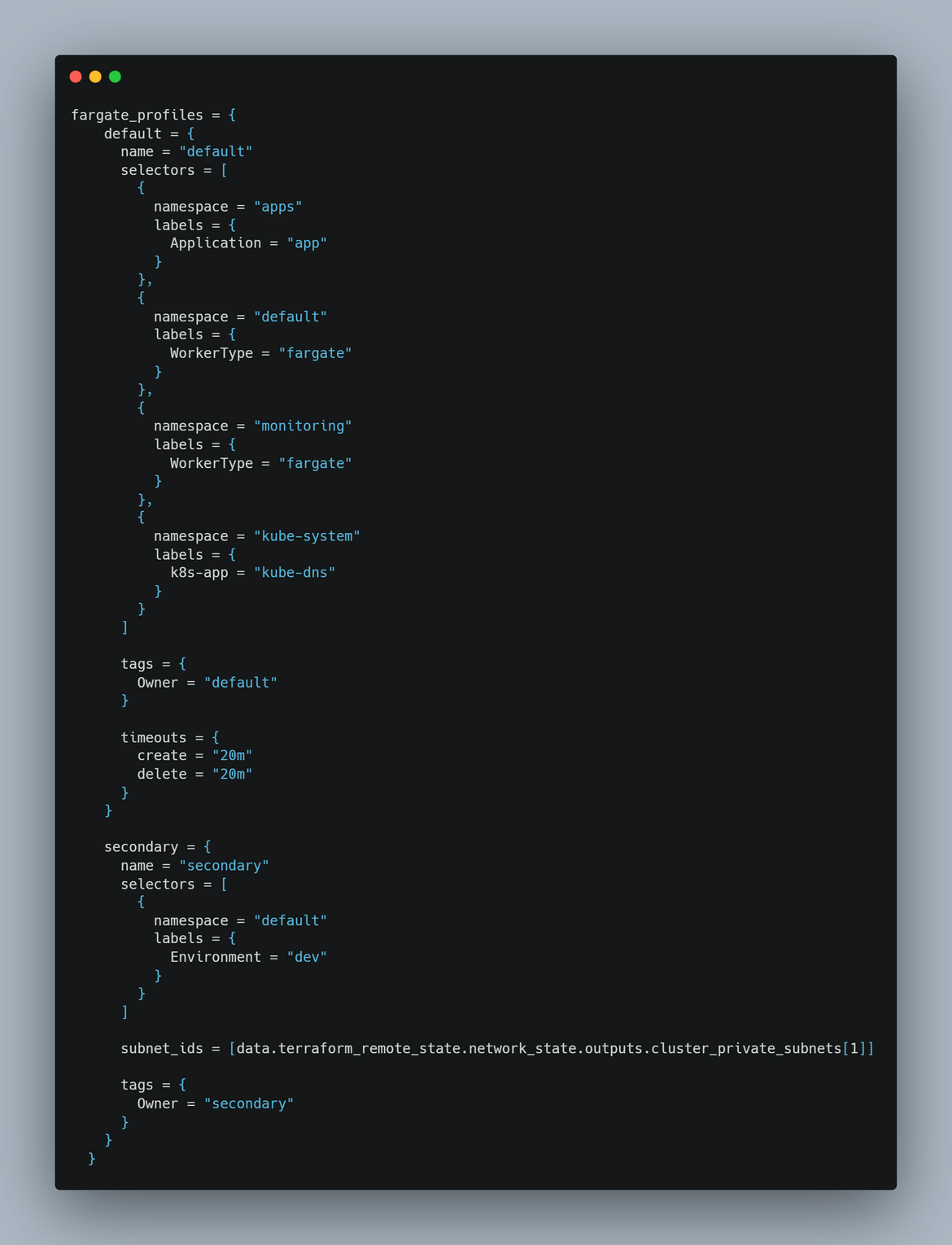

- Fargate Instances: For the nodes in the cluster, I am using Fargate instances. These are AWS managed instances which work as nodes in the cluster. To work with the autoscaling, these instances spin up automatically as workloads get deployed to the cluster. This is is also a serverless node added to the cluster. Will cover in detail later how the workloads get deployed to the Fargate instances.

- Use of Terraform: All of the infrastructure described above is deployed using Terraform. The declarative configuration for the cluster is specified in the Terraform script which deploys the whole cluster.

That explanation should give a good idea of both of the processes and what we will be setting up in this post. Lets walk through setting up each of the clusters.

Setup Walkthrough

In this setup I will walk you through the setting up of the whole cluster via both of the processes. You can use any of these to spin up your own cluster. At high level this is the general flow which I will be setting up on Jenkins to launch each of the cluster.

Above is a very high level depiction of the process. Let me go through the different parts of the process in detail.

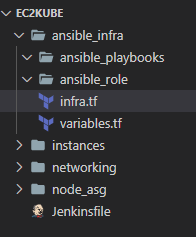

Folder Structure

Before diving in the setup process, let me explain the folder structure of the scripts on my repo. If you are using my repo for spinning up your cluster, this should help understand the setup. I have divided both of the approaches in two separate folders with their own folder structure in each.

- Using EC2 Instances: Below is the folder structure for this

ansible_infra:This folder holds everything related to ansible used to bootstrap the instances in the cluster. It holds all the playbooks and the role to install and start Kubernetes on the controlplane and other nodes in the cluster. It also holds the Terraform script to launch the infrastructure needed to hold the the ansible playbooks and inventory files for the nodes to pull from. more on that later.

instances:This folder holds the Terraform scripts to launch the EC2 instances for the controlplane node.

networking:This folder holds the Terraform scripts to launch all the networking components of the cluster like VPC, subnets etc.

node_asg:This folder contains all the Terraform scripts to launch the Auto scaling group that will launch all of the other nodes in the cluster.

Jenkinsfile:Finally the Jenkinsfile for the pipeline to launch the whole cluster step by step

- Using EKS Cluster: Below is the folder structure for this process:

cluster:This folder contains all the Terraform scripts to launch the EKS cluster. This is defined as a Terraform module which gets deployed via Jenkins

networking:This folder contains all of the Terraform scripts to launch the networking components of the cluster like VPC, subnets et. The EKS cluster gets deployed on this network

sample_app:This folder contains a Yaml file to deploy a sample Nginx pod. This pod i deployed to demonstrate how Fargate instances gets deployed once workloads are created on the EKS cluster.

Jenkinsfile:Finally the Jenkinsfile to define the pipeline which will deploy this whole cluster and its components.

That is the folder structure I have created in the repo I shared for this post. But in the steps below, what I have done is separate out those folders in separate branches in the same repo. Each of those branches trigger different Jenkins stages. I will go through the stages below. But just to understand the branch structure, this is what I will be following in this post. You can take my repo as is or convert to a branch structure as this to easily trigger separate pipelines.

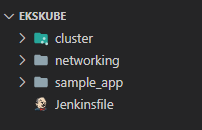

Jenkins Setup

Now that we have seen the structure of how the codebase looks, now its the time to prepare Jenkins for running the deployment steps. Let go through the steps one by one. But before starting to setup Jenkins, since we will be deploying to AWS, we will need an IAM user which the pipeline will use to connect to AWS. So first go ahead and create an IAM user with permissions to work with EC2 and EKS. Not e down the AWS keys for the user as we will be needing those next.

Once we have the IAM user lets move to setting up jenkins.

-

Install Jenkins:

Of course the first step is to install Jenkins on a server or a system as suitable. I wont go through the steps to install Jenkins but you can follow the steps Here.

-

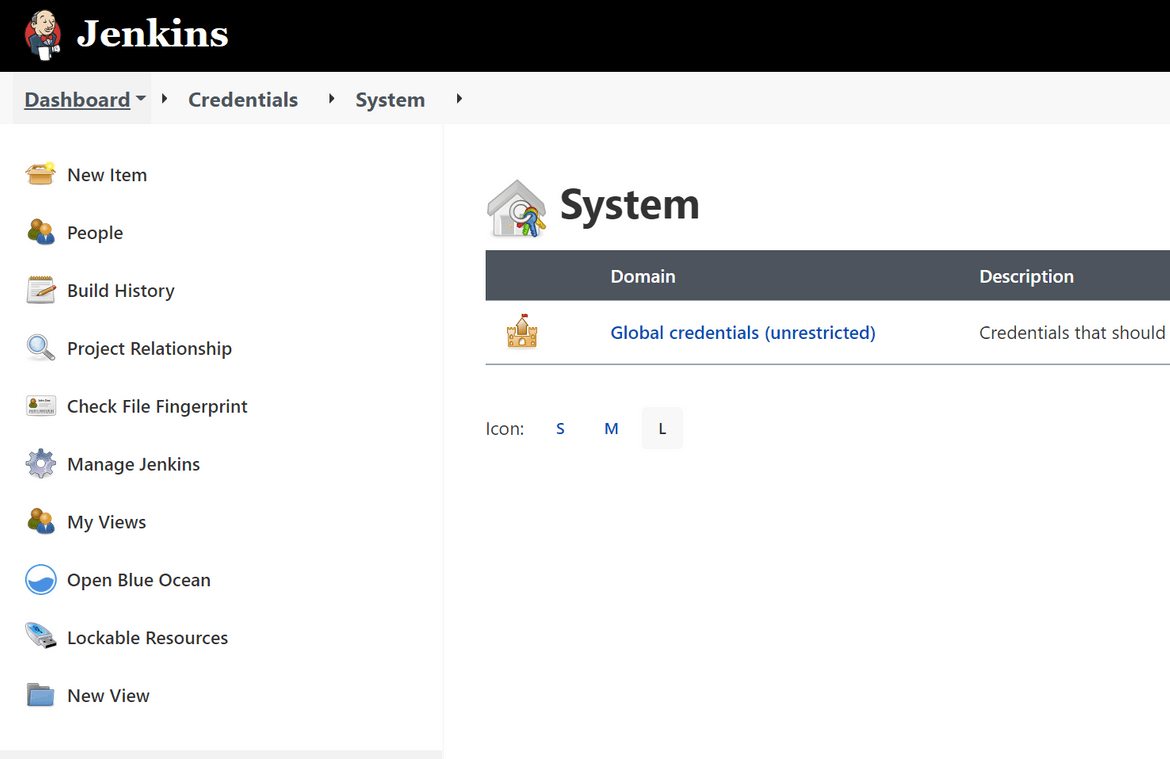

Setup Credentials:

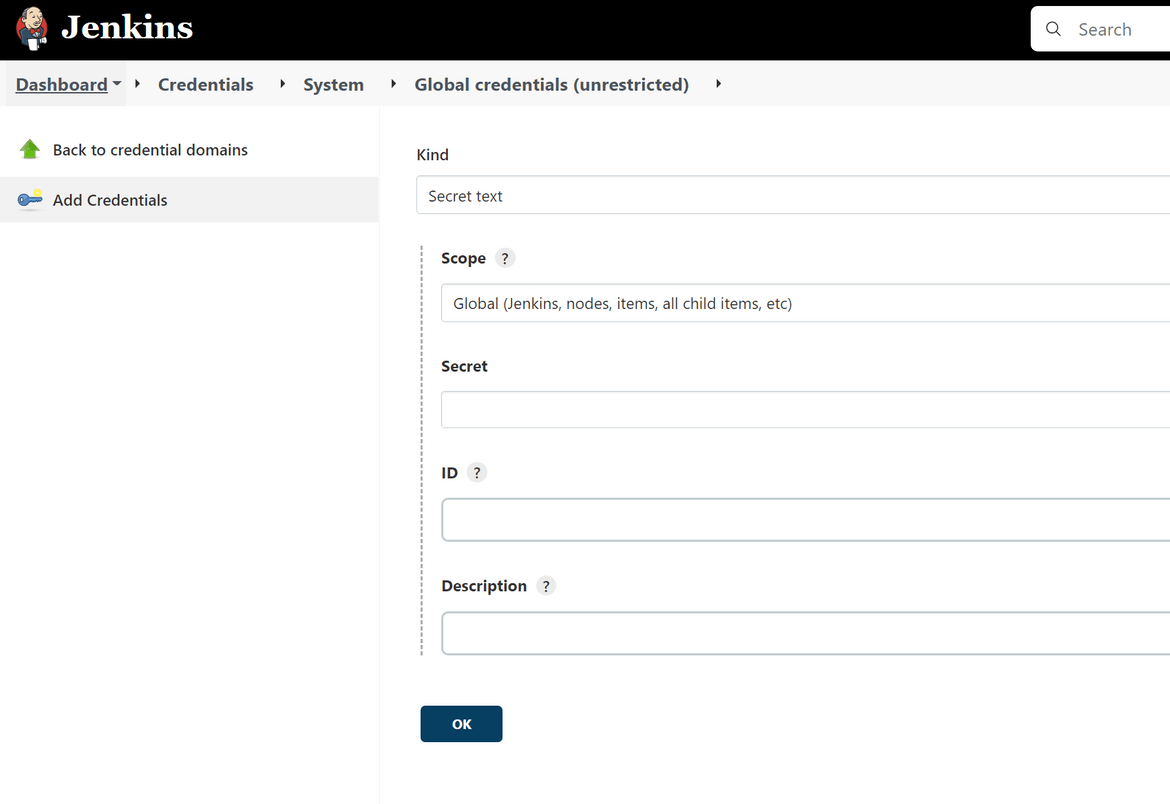

For Jenkins to connect to AWS, it needs the AWS credentials. In this step we will setup the credentials in Jenkins. Login to Jenkins and Navigate to the Manage credentials page:

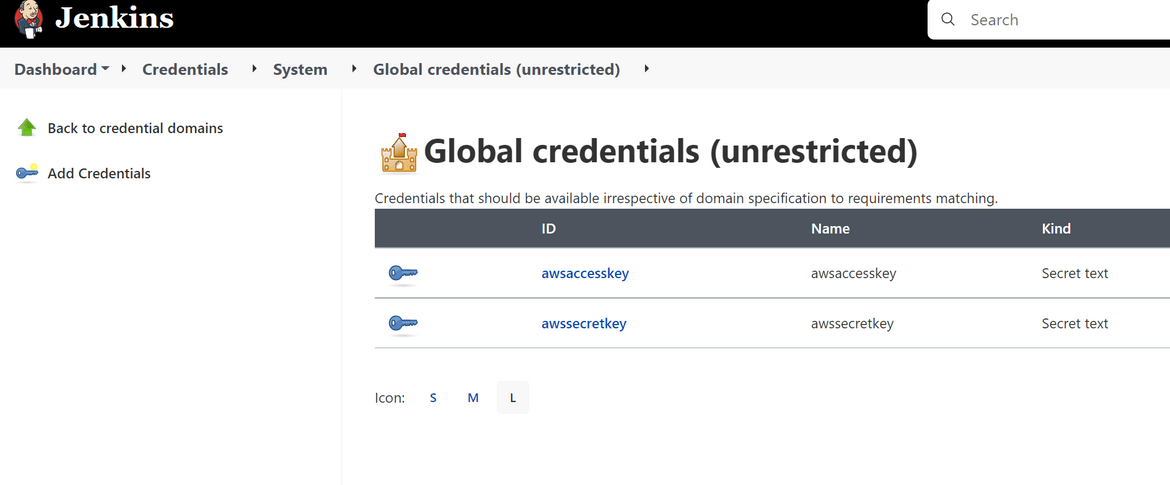

On the credentials page, add a new Credential of kind Secret Text. Put the AWS key value in the Secret content field and provide a name for the credential on the ID field. This is the name which will be referred in the Jenkinsfile to access the credential.

Add two such credentials for each of the AWS key from the IAM user we created earlier. There should be two credentials now. If you added on a different name, update accordingly on the Jenkinsfile too. This is what I have used for my Jenkins pipeline:

These two credentias will be used by the pipeline to communicate with AWS. -

Setup Pipeline:

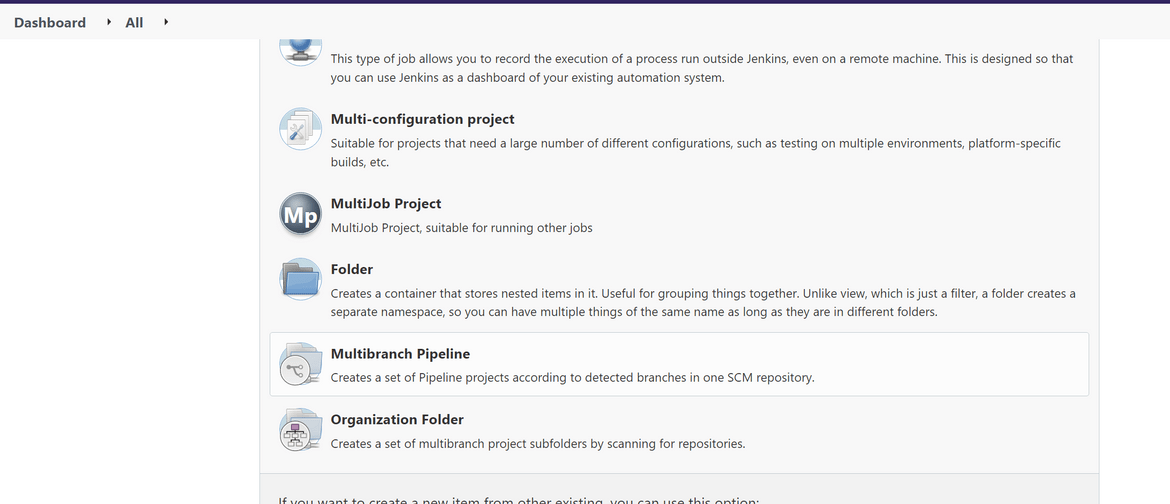

Now we will setup the actual pipeline which will be running and performing the deployment. As I stated above, in this example I have kept the EKS related and EC2 cluster related files in two separate branches. So I will be creating a multi branch pipeline to scan both branches and run accordingly. Login to Jenkins and create a new Multibranch pipeline from the create option

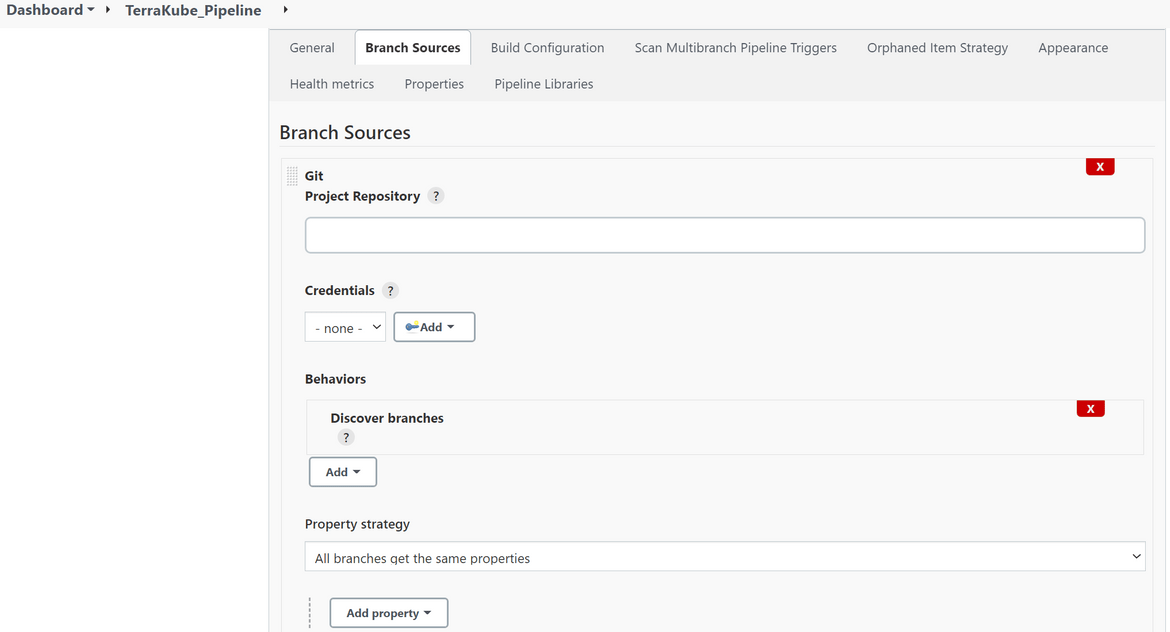

On the options page, select the GIT repo source which will be the source for the scripts and the two branches. To be able to connect the repo, the credentials will also have to be specified on the same option. If its a public repo no credentials needed. I would suggest clone my repo and use your own repo for the pipeline.

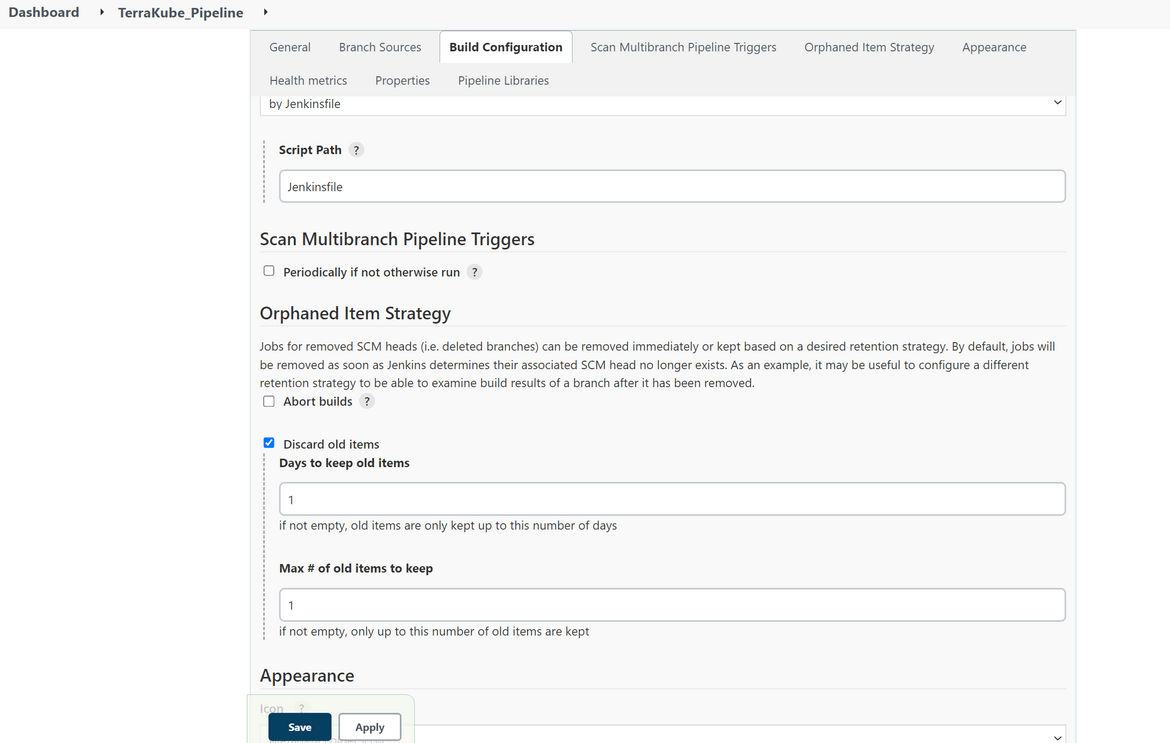

I am using Jenkinsfile as the name of the file but if you are using some other name specify name in the options. This will identify which file to read the pipeline content from, in the codebase when the code is checked out by Jenkins. Other settings can be kept default or tweaked as needed. I have kept default values for other settings

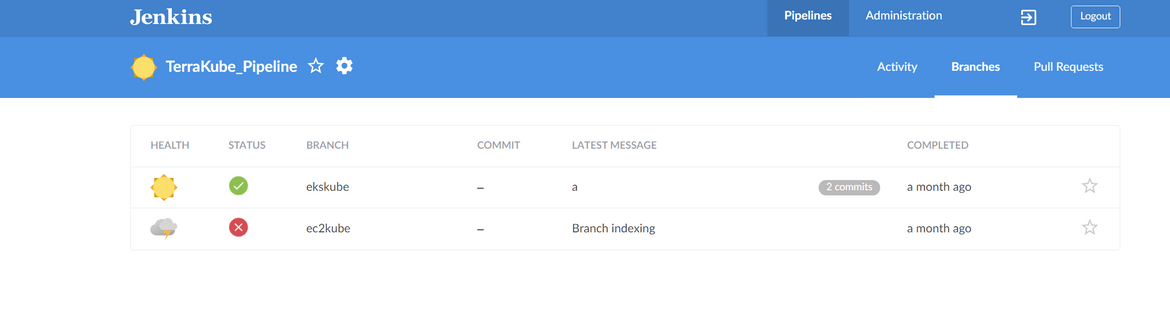

Save the pipeline. Once you save, Jenkins will start scanning for branches in the repo. At this point it will fail since you wont have any content in the pipeline. If you have created the branches already, you should have the multiple branches showing up as separate runnable pipeline on Jenkins

That should complete the initial Jenkins setup and you are ready to run the pipelines for deployment. Next lets move on to understanding the two pipelines and run them.

Deploy EC2 Cluster

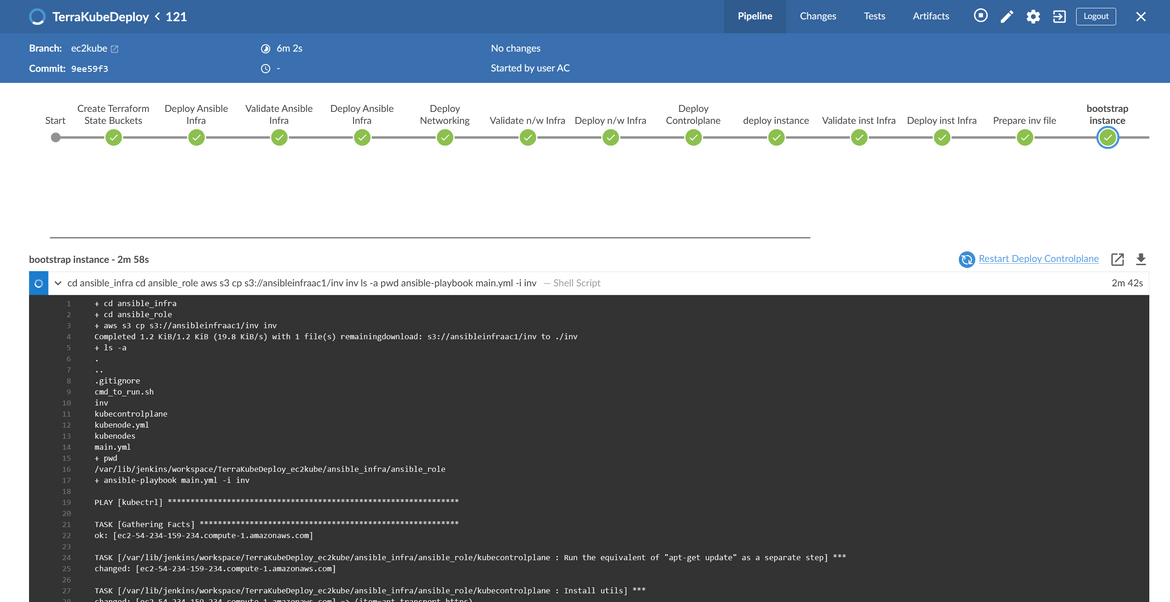

Lets first go through deploying the Kubernetes cluster using EC2 instances. Below pipeline shows the whole deployment steps which are carried out by Jenkins:

Lets understand each of the stages.

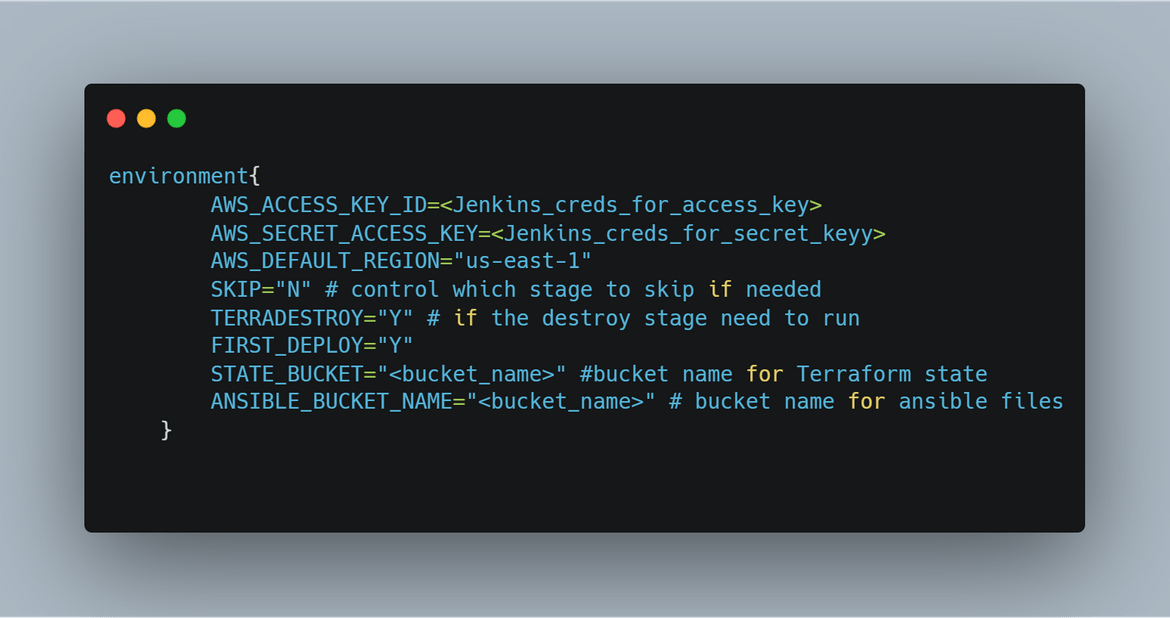

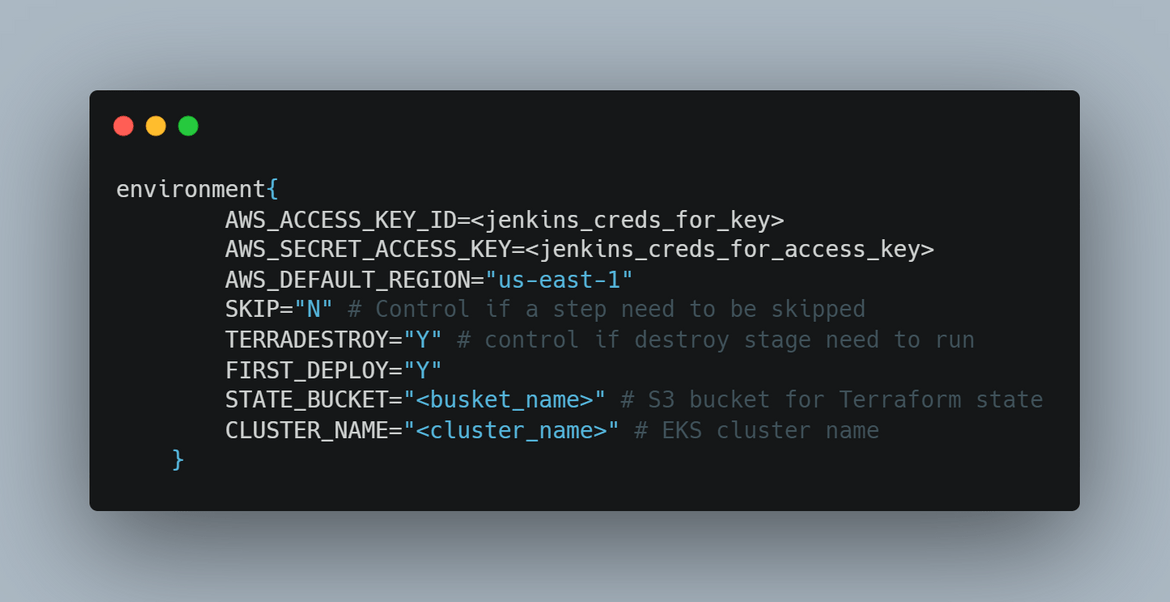

- Environment Variables: First step is to set the needed environment variables which are used by different steps in the pipeline. Here are the environment variables which are being set:

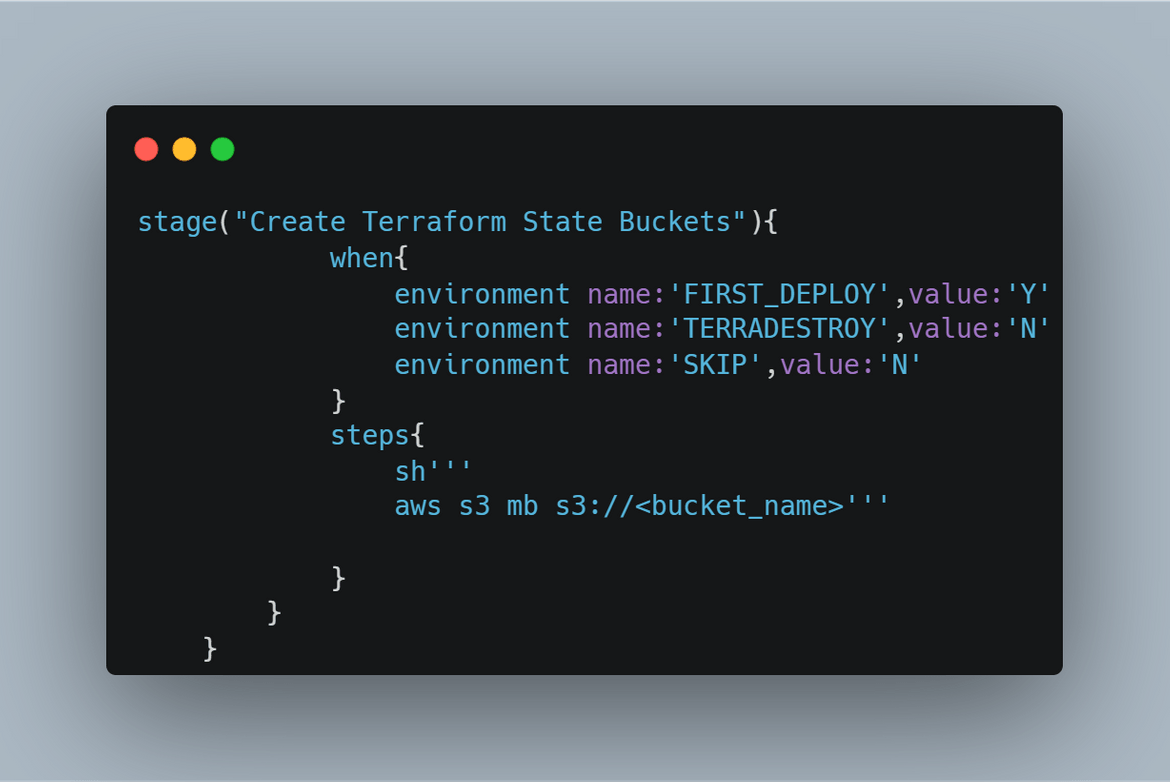

- Create S3 Buckets for State: In this stage, the pipeline creates the S3 buckets which are needed for storing the Terraform state. The bucket name is mentioned on the env variables and same name gets specified in the Terraform scripts so the state can be managed in this bucket.

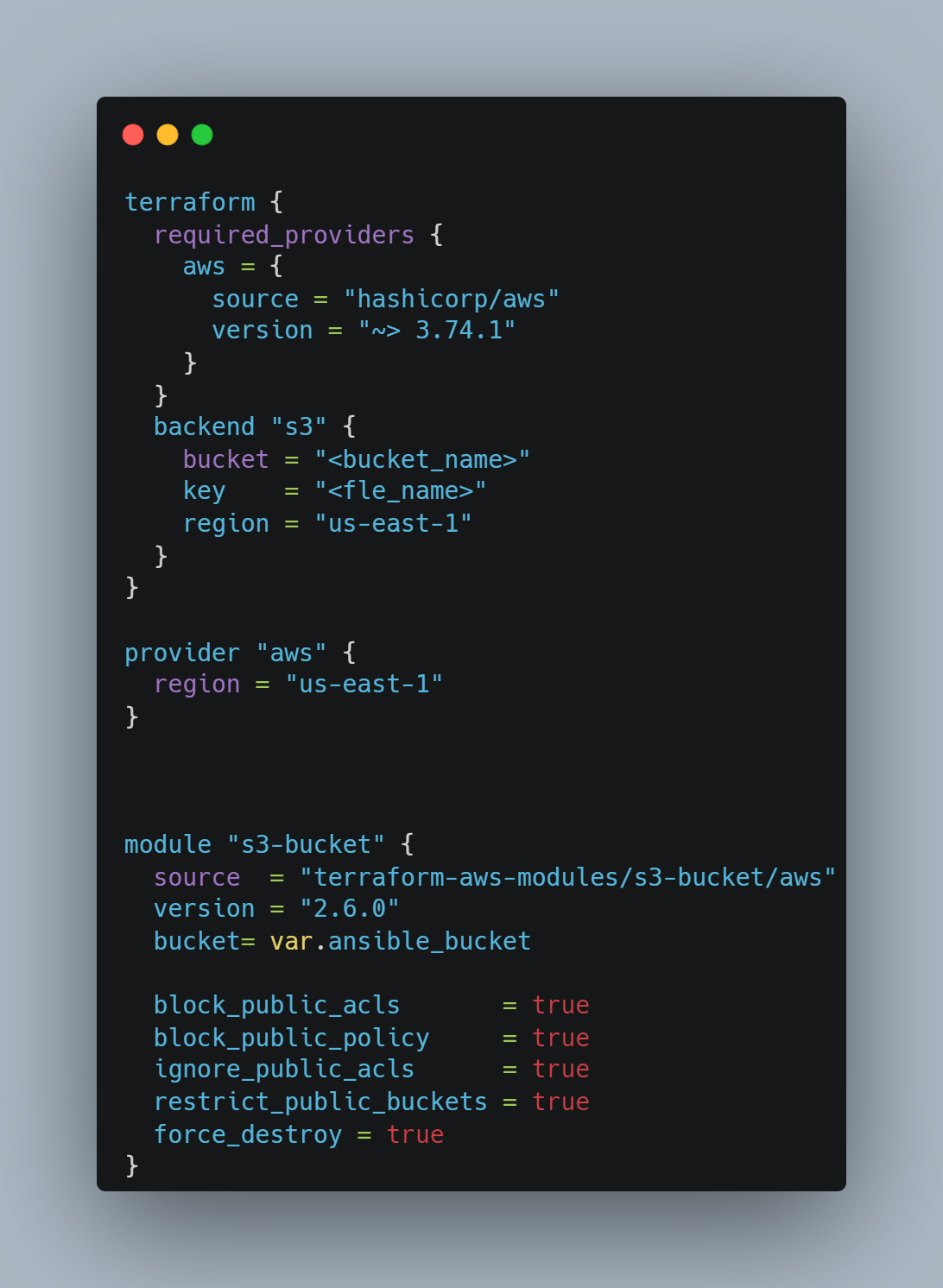

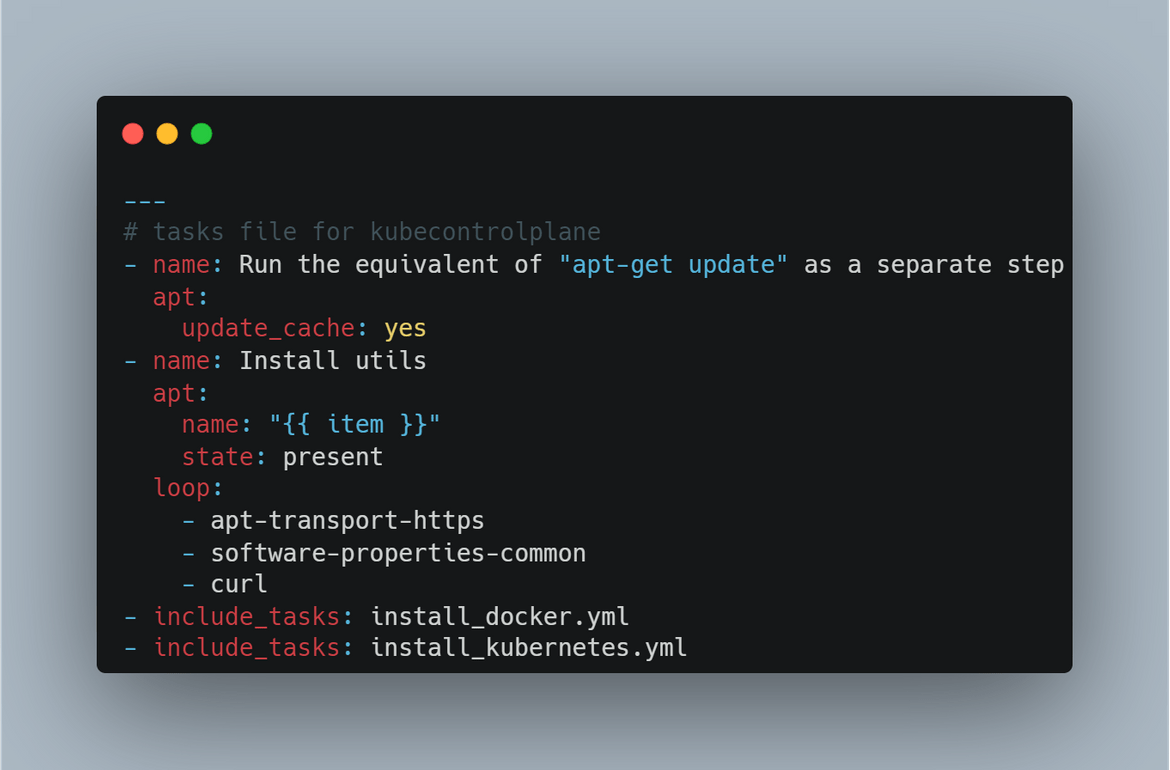

- Deploy Infra for Ansible: In this stage, S3 buckets are created which will be used by Ansible to store the updated Inventory files and read the Inventory files to run commands. Terraform is used to create the buckets. The bucket name is passed via the variable file.

-

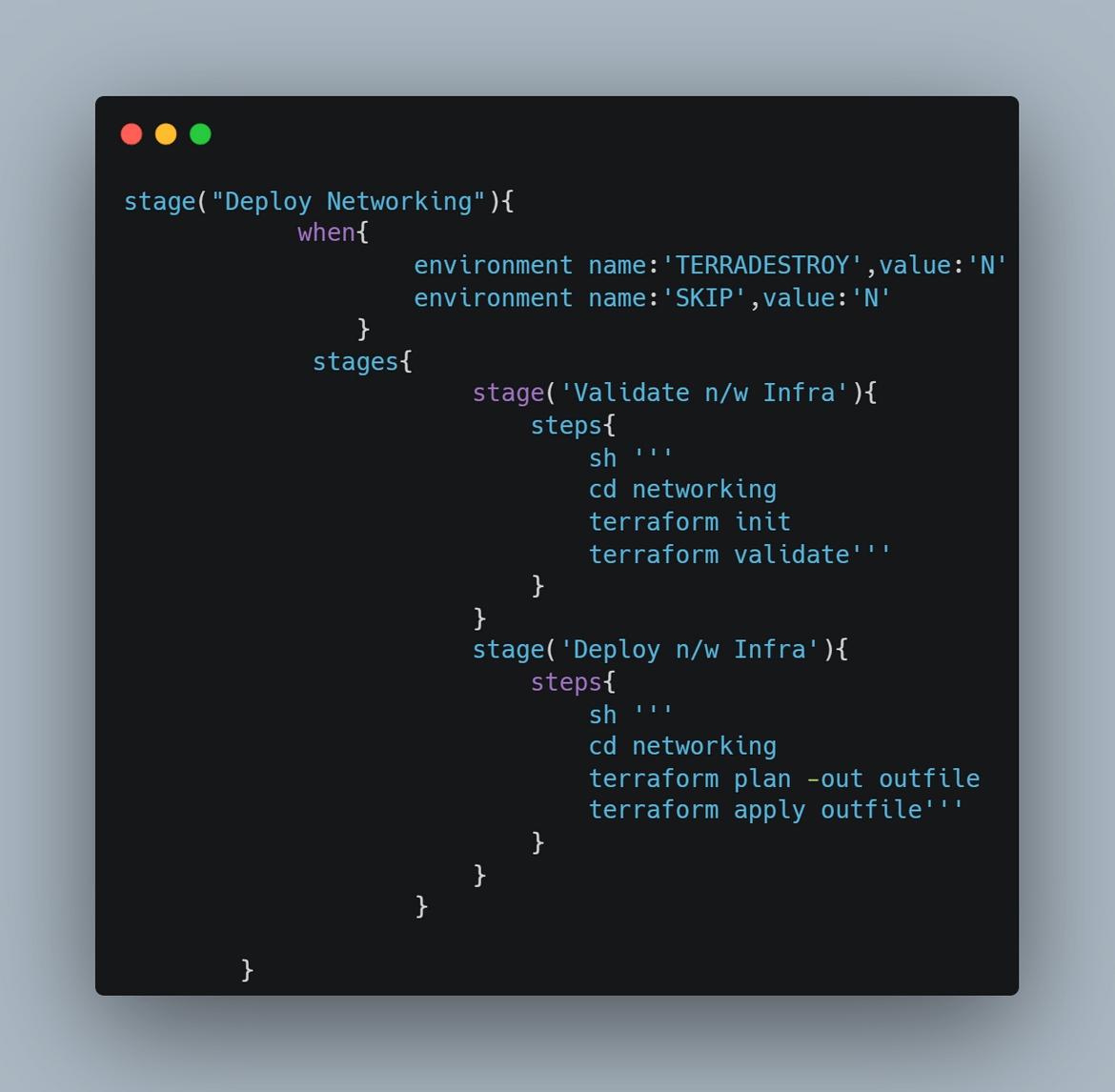

Deploy networking modules: In this stage the networking components are deployed. All of the network components are defined in a Terraform script. The Terraform module gets deployed in the pipeline stage. These are the items which get deployed in this stage:

- VPC

- Subnets

- Security Groups

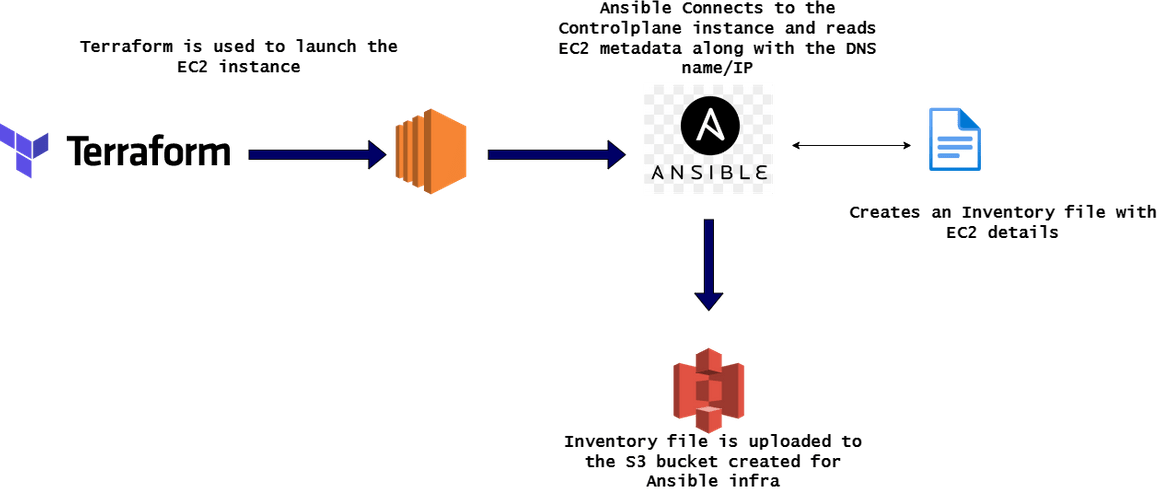

- Deploy EC2 Instances: In this stage the EC2 instance for controlplane get created. The EC2 instance is defined in a Terraform module which gets deployed in this stage. But its not just deploying the instance, in this stage, the instance details also gets updated to an inventory file. This is what happens in this stage.

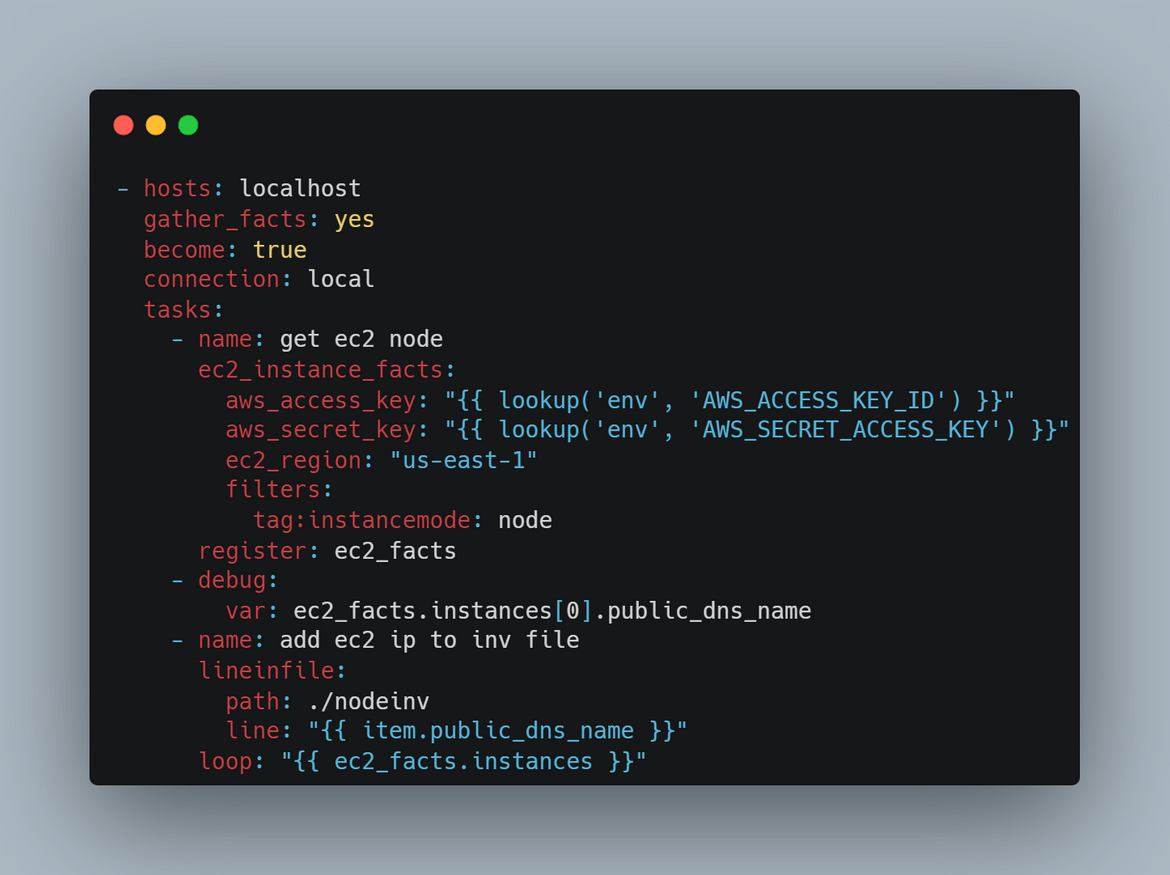

An Ansible playbook is created to perform the Inventory file update and upload to S3. The playbook can be found in the repo I shared.

-

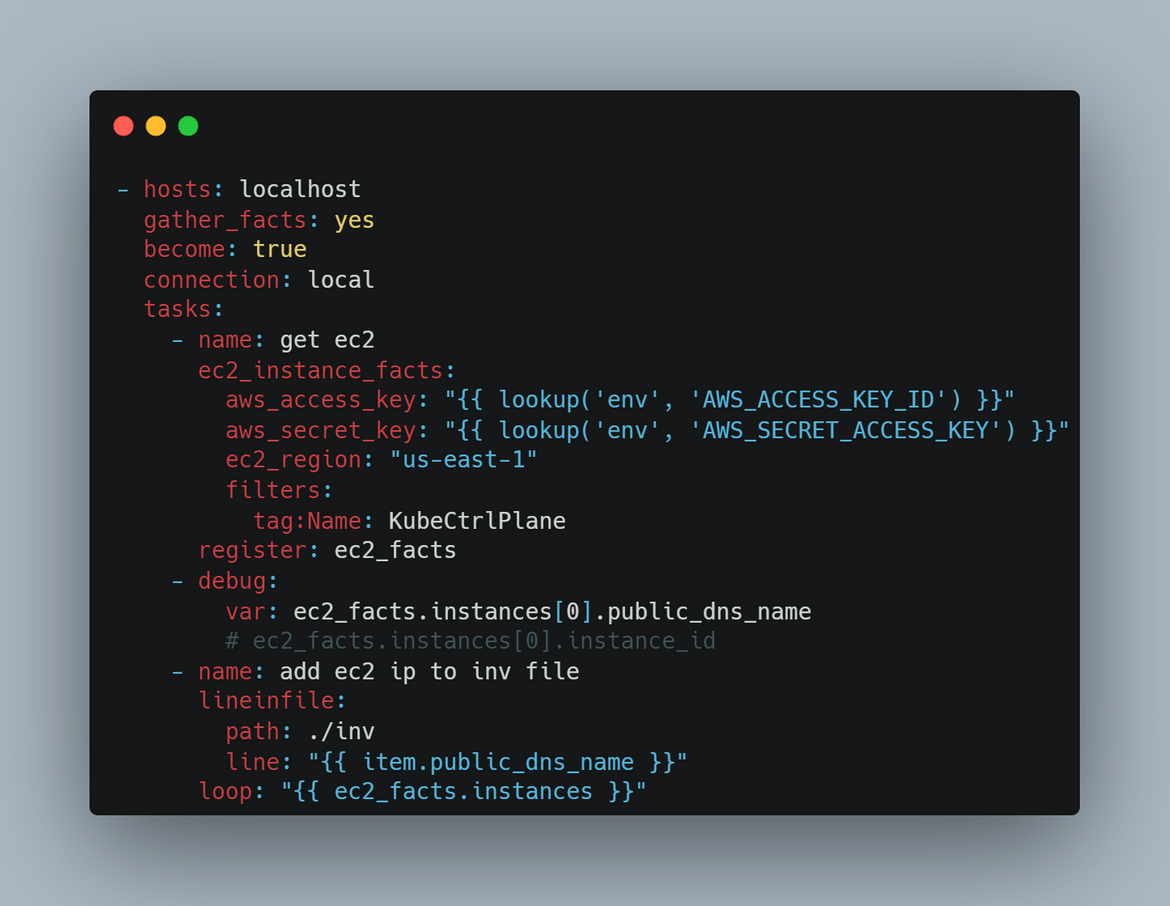

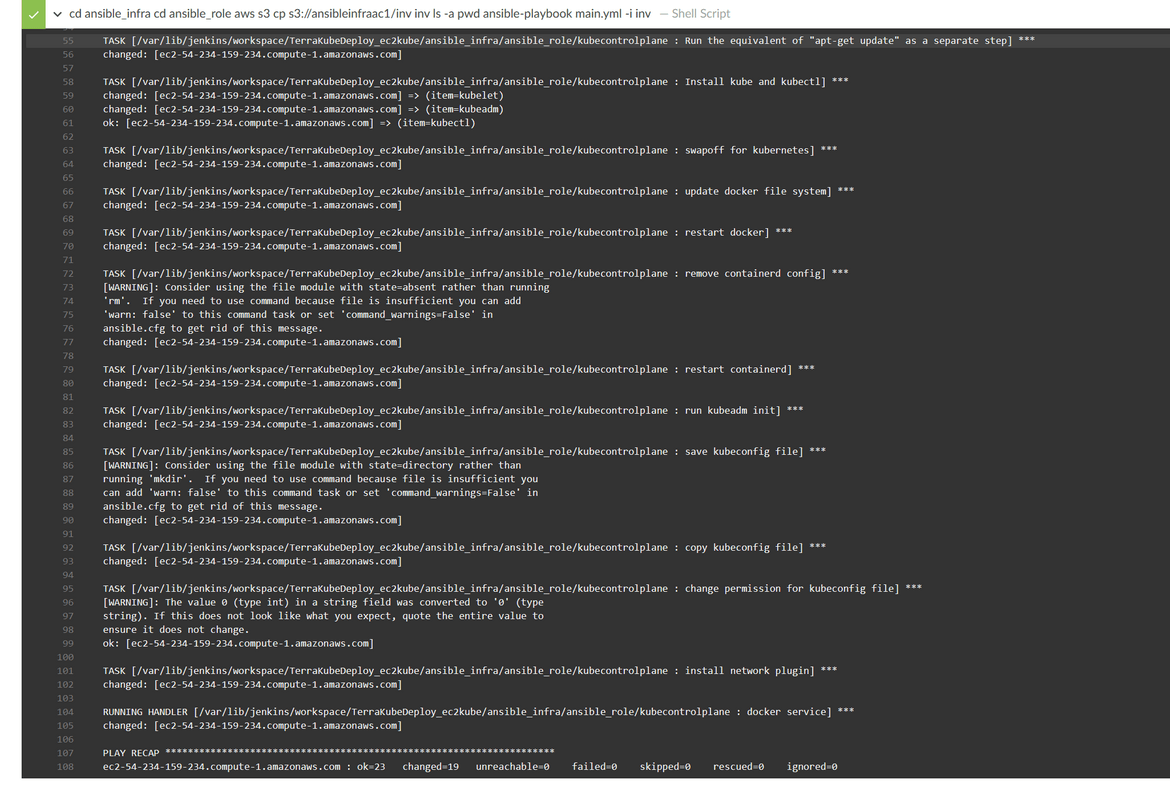

Bootstrap Controlplane Instance: In this stage the controlplane EC2 which was launched earlier, gets bootstrapped where all the needed packages and Kubernetes gets installed on the instance. An ansible role has been created to perform the bootstrap steps. These are the steps which happen in this stage:

- The inventory file which was uploaded earlier, is pulled from the S3 bucket in the local folder within pipeline

- The ansible role is executed which installs all the necessary packages and starts the Kubernetes service on the controlplane node

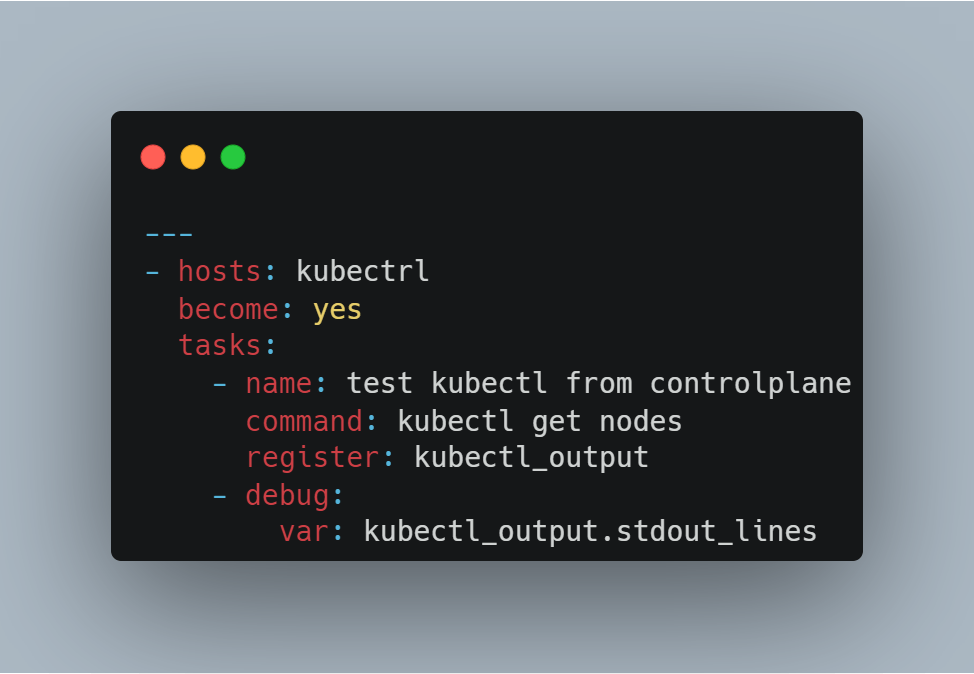

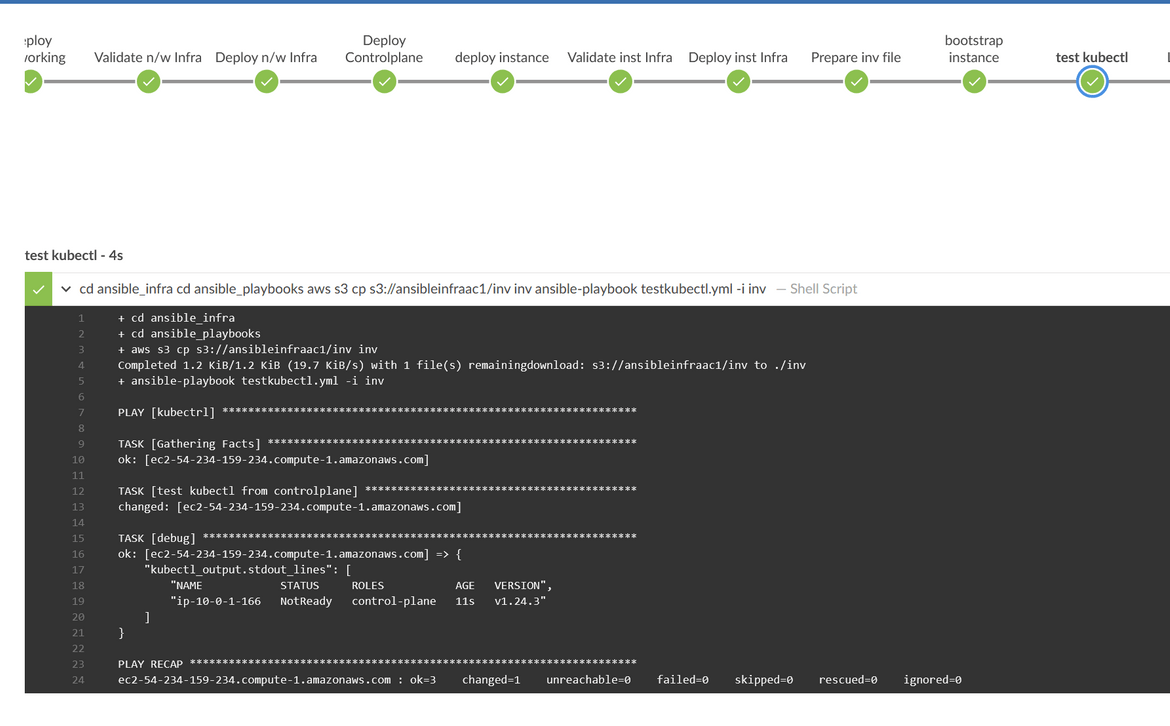

After the Kubernetes service starts, there is also a test step which runs in this stage to ensure the Kubernetes starts properly on the controlplane EC2. The kubectl test step is also performed using Ansible. During the test step, same Inventory file is also downloaded from the S3 bucket, and used by Ansible to connect to the EC2.

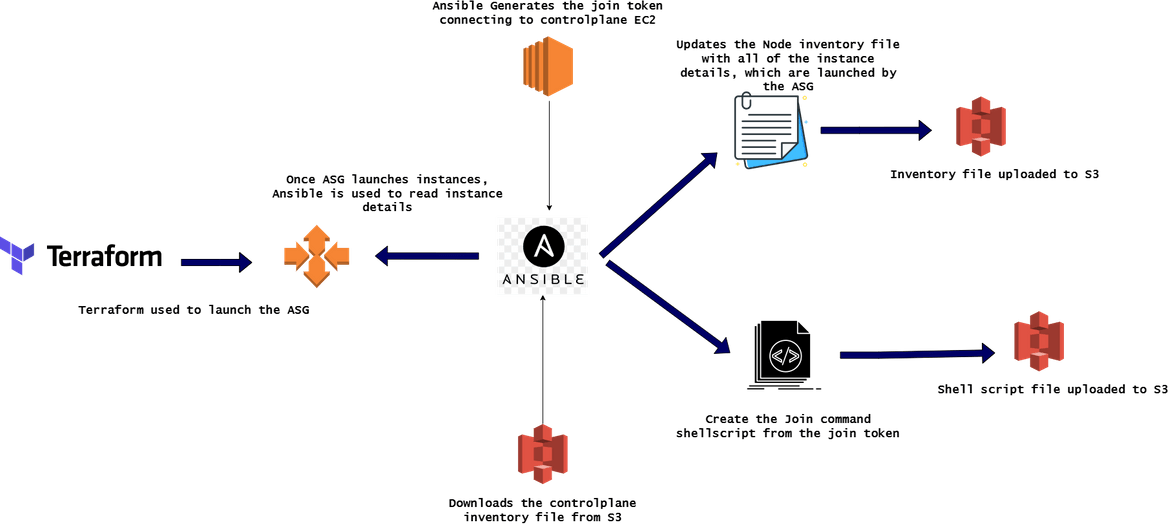

- Launch Auto Scaling Group for Nodes: Now that we have the controlplane up and running, in this stage we will launch the instances which will join as nodes to the cluster. There are few things happening in this stage apart from launching the ASG. This should explain the steps:

An Ansible playbook has been created to get the launched instance details and update in an inventory file. That inventory file gets uploaded to a S3 bucket for further steps.

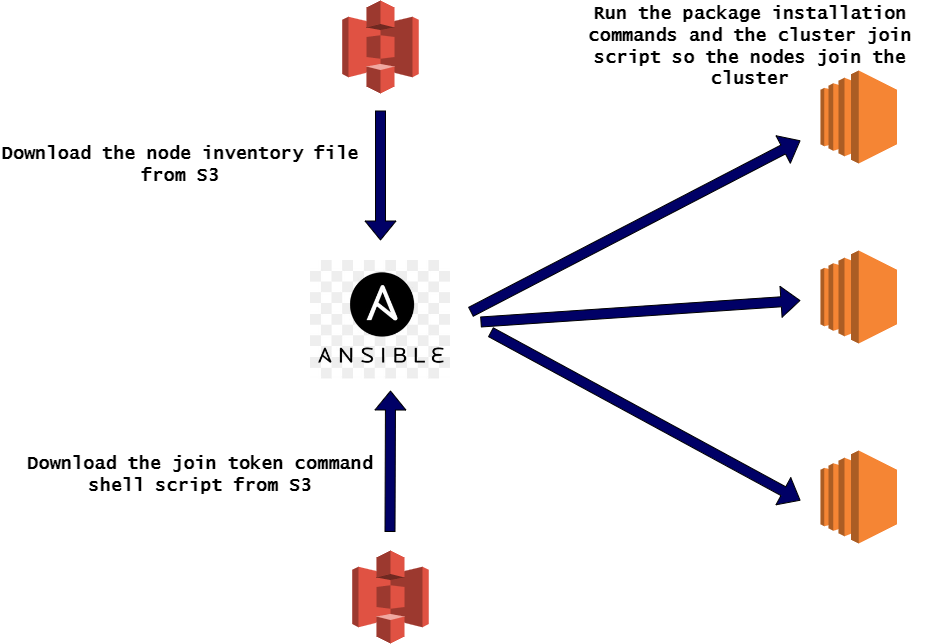

- Bootstrap Node Instances: In this stage, the node instances which were launched on last step, get bootstrapped. All the needed packages are installed on these nodes and the Kubeadm join commands are executed on these nodes so these join the cluster. This is the flow which happens in this step:

To bootstrap the nodes, another Ansible playbook has been created which runs the commands. Once the nodes successfully join the cluster, finally a test kubectl command is executed using an Ansible playbook. This kubectl will test that the nodes have successfully joined the cluster. You can check all the nodes and the controlplane running on the console

That completes the deployment of the cluster using EC2 instances. Once these steps complete, you should have a working cluster using the EC2 instances. Once all the changes are done push the changes to the Git repo which is the source for the pipeline.

git add .

git commit -m "updated pipeline files"

git push -u origin main To execute the steps, run the pipeline which was created. Once the pipeline finishes, the cluster will be up and running.

Note: If you are running through spinning up a cluster just for learning, make sure to destroy the resources which were created or you wil incur charges on AWS. Just ru the destroy phase of the pipeline in my repo by setting the environment variable. It will destroy all of the resources which were created by the pipeline.

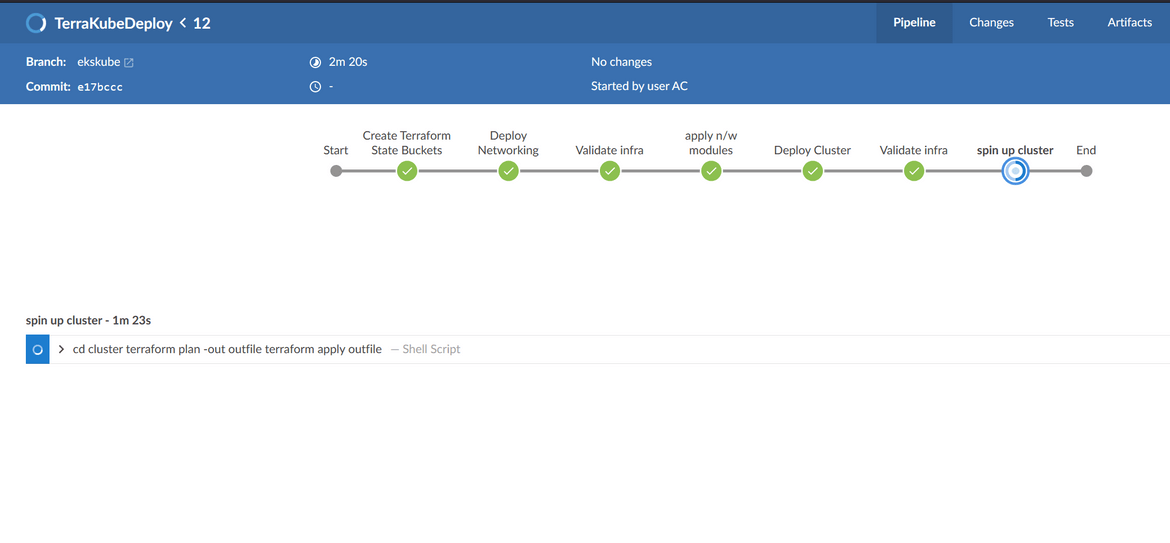

Deploy EKS Cluster

Now lets move focus to the other type of process. In this process the cluster will be deployed on AWS EKS. This deployment is also orchestrated using Jenkins. Below is the overall pipeline:

Lets go through each of the stages:

- Environment Variables: First step is to set the needed environment variables which are used by different steps in the pipeline. Here are the environment variables which are being set:

- Create S3 bucket for Terraform state: This stage is identical as the one from the EC2 process. In this stage the S3 bucket is created which will house the Terraform state file.

- Deploy Networking modules: This stage is also identical to the EC2 process. All of the networking components are deployed in this stage. Terraform is used for this stage and it follows the same steps from the EC2 process.

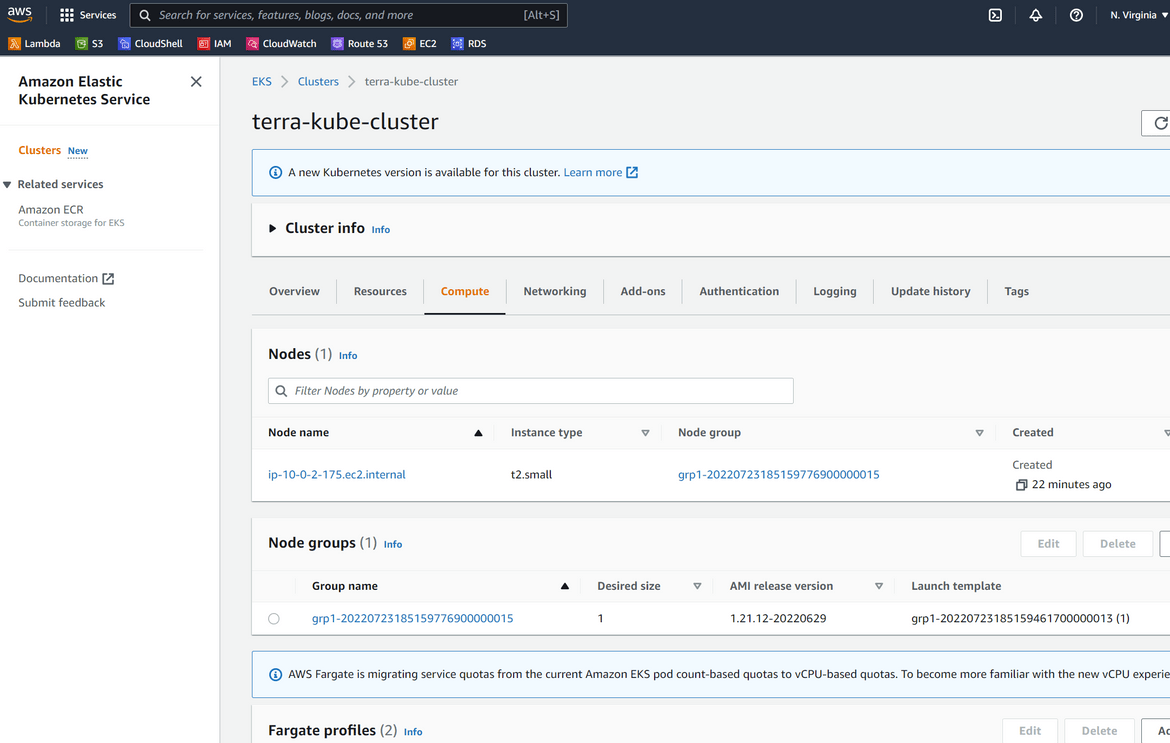

- Deploy EKS Cluster: In this stage the EKS cluster is launched. Since its a cluster managed by AWS, we just need to declaratively launch the cluster and AWS will handle the rest. A Terraform module is created to deploy the cluster. In the Terraform script all of the parameters for the cluster is specified. As part of the cluster deployed, the node groups are also deployed where the workloads will run. I am using Fargate instance node groups which will spin up as and when workloads starts running on the cluster. In the Terraform module, a Fargate profile is defined which gets deployed along with the cluster, This profile makes sure if any workloads with these specific labels are launched on the cluster, they will be launched on these Fargate instances which will launch automatically based on load.

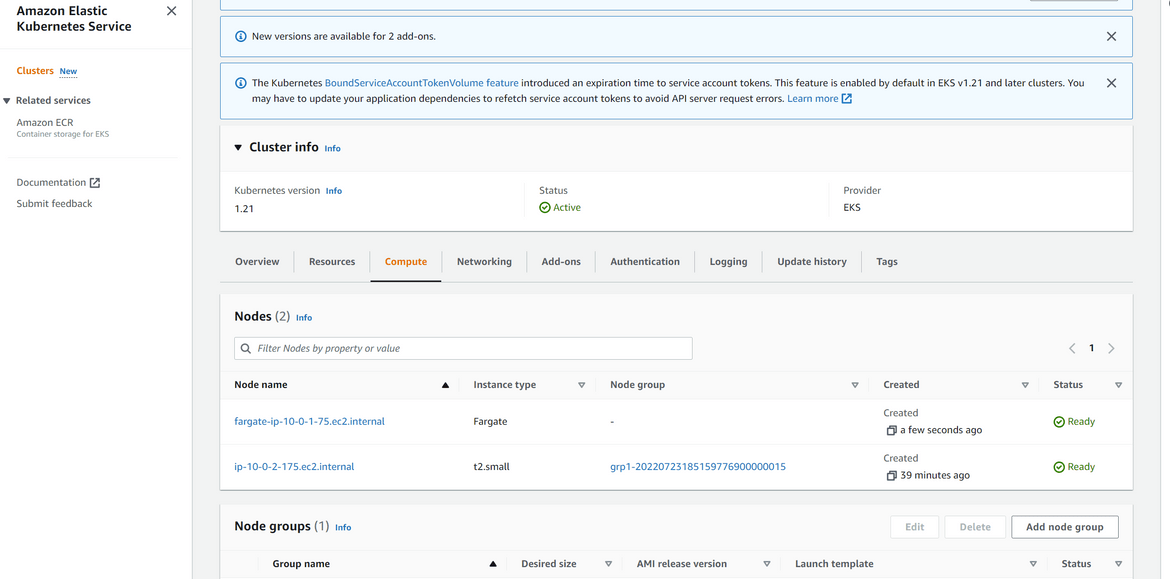

Once the cluster deploys successfully, you can view the cluster on console

One thing to note here is that I am also launching a non-Fargate node group so that the coredns pods can be scheduled. To run coredns pods on Fargate instances is possible but I will not cover that in this post.

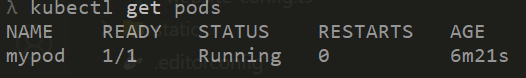

- Deploy Sample App: Since we now have the cluster up and running, to see how the Fargate instances spin up, we will deploy a sample app in this stage. It will be a simple pod with the nginx image which will be deployed to the cluster. The pod will have the specific labels that will trigger creation of the Fargate instance nodes.

Once the pods start running, the Fargate instances can be seen on the AWS console for EKS:

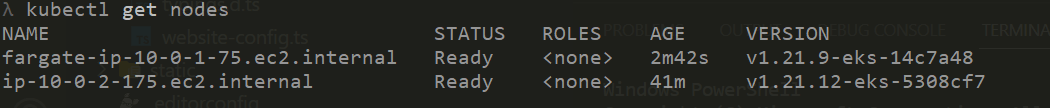

To test the nodes, run this command from your local terminal. Make sure to configure AWS CLI so it authenticates to correct AWS instance

aws eks update-kubeconfig --name cluster_name

kubectl get nodes The sample pod should also be running now on the Fargate instance node:

As last step of this stage, a test kubectl command is executed to ensure the cluster is functioning properly and the nodes are up and running too.

- Notify on Slack: Once the cluster is completely deployed, the pipeline sends out a message to a specific slack channel notifying the successful deployment of the cluster. The message also provides the cluster name.

That completes the deployment of the EKS cluster. Once all these script changes are done, push the changes to the Git repo so the pipeline can execute.

git add .

git commit -m "updated pipeline files"

git push -u origin main Execute the pipeline or the pipeline automatically runs (whatever is configured on Jenkins), once the execution finishes the EKS cluster should be up and running.

Note: If you are running through spinning up a cluster just for learning, make sure to destroy the resources which were created or you wil incur charges on AWS. Just ru the destroy phase of the pipeline in my repo by setting the environment variable. It will destroy all of the resources which were created by the pipeline.

Improvements

The steps explained in the post are very basic way to spin up the cluster and actual Production steps are way more. Some of the improvements I am working on the process are:

- Add TLS to the Kubernetes endpoints

- Enable external access to the cluster (cluster using EC2 instances)

These are some ideas right now but there are so many things to be improved so a perfect production grade cluster can be spun up. I will plan to write another post in future for a production grade cluster.

I also wanted to point out a resource which I found very useful around Kubernetes strategies. This should definitely help you: Check This

Conclusion

In this post I explained a process to quickly spin up a basic Kubernetes cluster using two methods. You can use any of the methods to easily spin up a cluster of your own. Of course the basic cluster may not be production grade but will serve good for your practice. You can make modifications to the process to launch a Production grade cluster too using this process. Hope I was able to explain the process and it helps in your learning. Let me know if you face any issues or have any questions, by contacting me from the Contact page.