Chatops on AWS: Leveraging AWS Chatbot to get Glue Job notifications on Slack

Recently I started reading about Chatops and it really intrigued me to learn more about it. It really fascinated me to see how easy it is now to setup alerting and be notified about operational stuff. While I was learning more, I thought why not explore the same on AWS and thats when I bumped onto AWS Chatbot.

In this post I will go through the basics of this AWS managed service called ‘Chatbot’ and demonstrate how quickly you can setup an alerting mechanism to Slack, monitoring an AWS Glue ETL job.

The GitHub repo for this post can be found Here

What is ChatOps

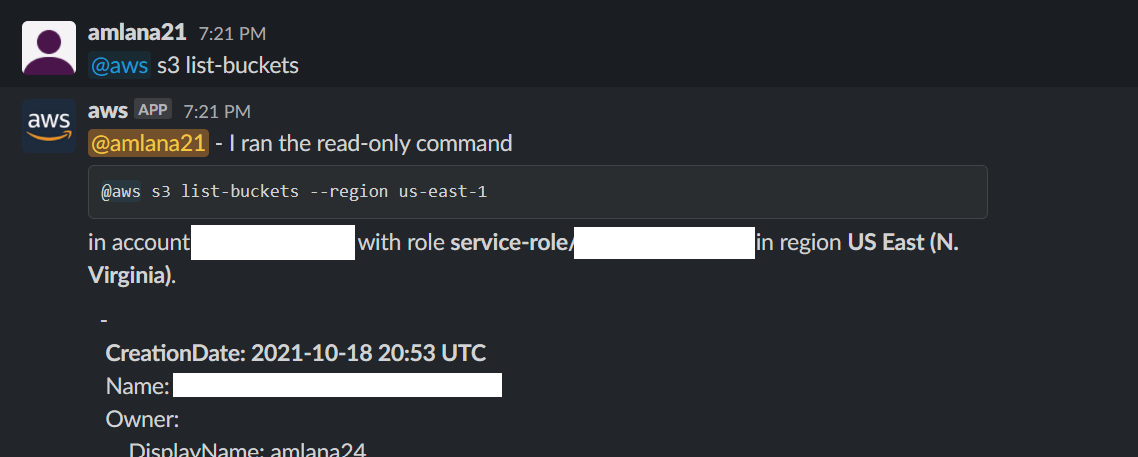

Before we move to understanding Chatbot, lets first understand what Chatops is. Simply put Chatops is a collaboration model where teams can interact with systems and gain operational insights in a conversational manner. A typical scenario will be where teams are typing commands on a chat application and the bot is executing the commands on backend systems, all in a way of chatting with a bot. This has recently become very widely accepted because of the ease with which teams can interact with the systems. Think of a scenario where if you want to list all buckets on your AWS account, just ask the bot on a chat and it shows you all buckets.

What is AWS Chatbot

We saw above how easy operational tasks become with Chatops. Of course we can build our own bots and handle the chatops behavior hosting it as an application. But AWS made it simple to use Chatops by providing a managed service called ‘AWS Chatbot’. The name is a bit misleading though. Yes it is a chatbot but for a very specific use case: Handle operational tasks on AWS cloud. Using this service teams can monitor and respond to operational events on AWS cloud. One can also run CLI commands right from the collaboration application itself. Currently it supports two chat applications:

- Slack

- Amazon Chime

If teams are using either of these two for collaboration, they can easily setup monitoring and alerts using Chatbot on AWS.

How Does it work

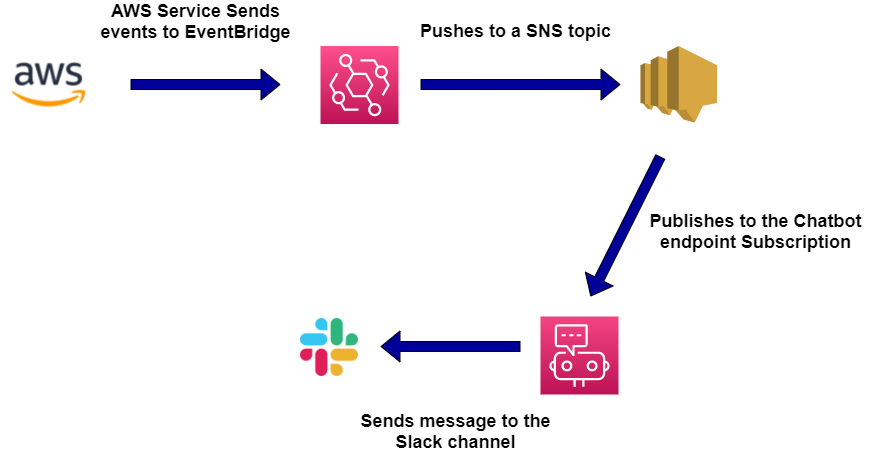

In short, AWS chatbot uses Amazon Simple notification service (SNS) to send out alerts to chat rooms like Slack channels. Below diagram will show high level how the alerting mechanism works.

We will go through in detail with an example to understand how the flow happens.

Pre-Requisites

Before we move further, if you want to follow along and setuo your own Chatbot, there are some pre-requisites which are needed:

- AN AWS Account. You can register for a free tier

- A Slack workspace and admin access to it. Free Slack account can be registered Here

- Terraform installed

- Python installed

- A system or server with Jenkins to run the pipeline

What am I building

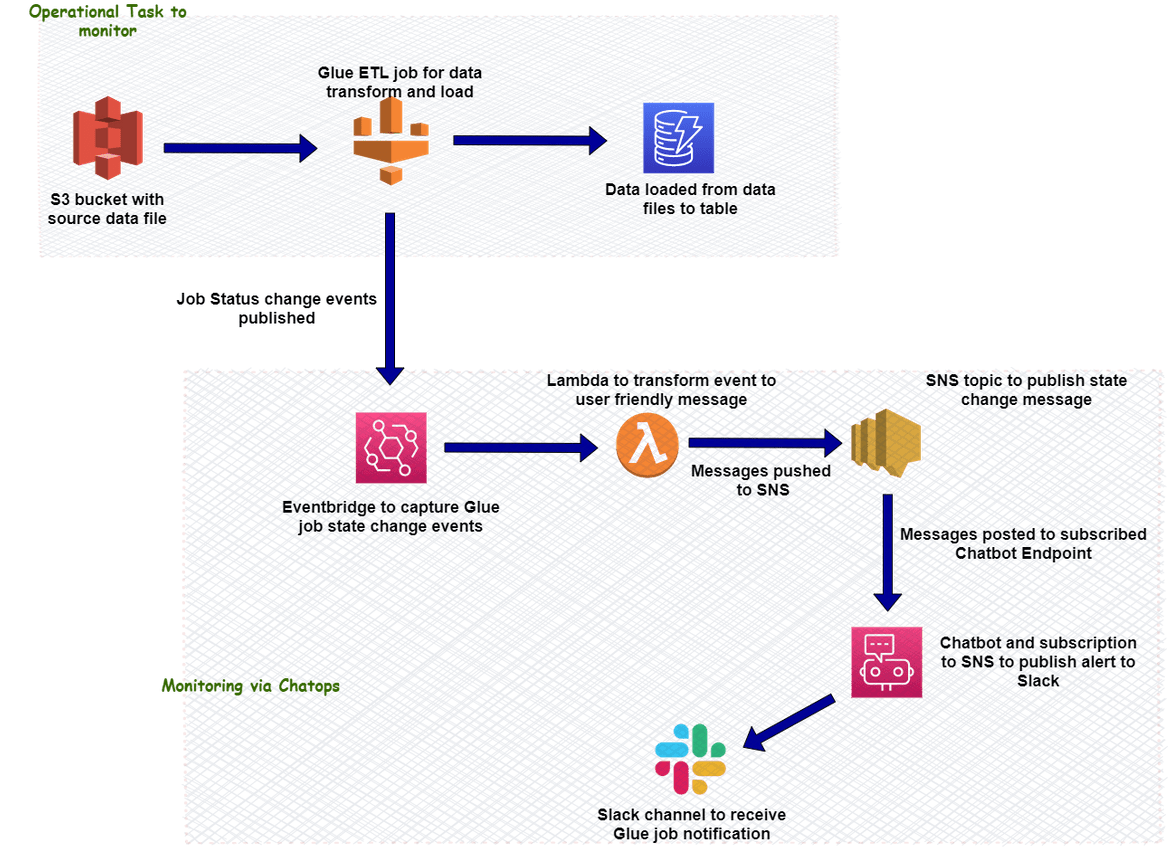

To explain how AWS chatbot works and how it can be setup, I have built a simple process setup which will help demonstrate the same. Below image will show the whole process architecture and its components. Here I am building a simple data transfer ETL process where data is being loaded into a DynamoDB table from a data file in S3 bucket. The ETL part is handled by a Glue job which also transforms the data.

Here are the components involved:

S3 Bucket and DynamoDB: This will serve as the source an destination of the data load process. The S3 bucket will hold the source raw data file. DynamoDB will be the destination of the data where the data will be transformed and loaded.

Glue Job: This is the ETL job which will handle reading the data file from the S3 bucket, transforming the data and loading into the DynamoDB table. Separate Glue catalogs are created for the source and destination tables.

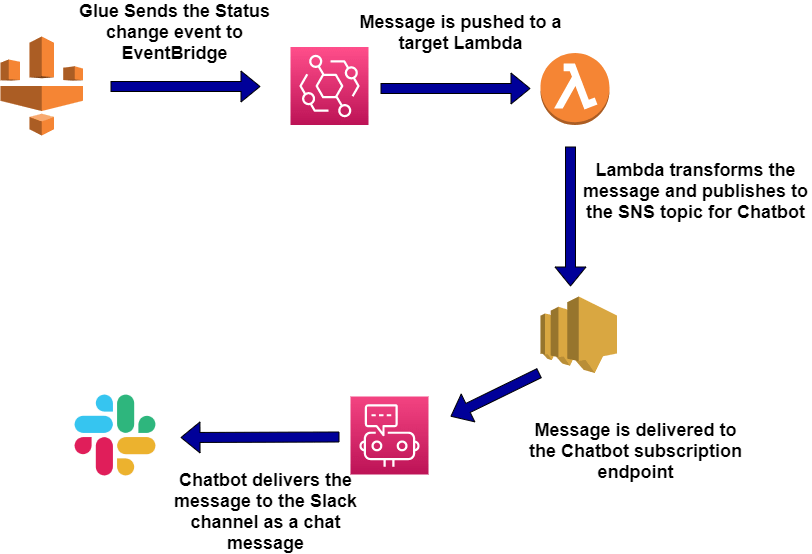

Eventbridge Rule: This rule is to capture the Glue job status change event so the event change can be sent to the SNS topic. This will filter the Glue job state change events and forward to a Lambda target for further processing.

Transformation Lambda: This Lambda takes the input event from the Eventbridge and transforms the message to a user friendly message. This is to have a understandable status message on the Slack channel for team members to view. This Lambda transforms the message to add the Job name details and any error message if the job fails.

SNS Topic: This SNS topic is the delivery mechanism to deliver the status message. The Lambda publishes the status message to this topic, to be sent to the Chatbot subscription. This topic contains a subscription for the Chatbot endpoint where the message gets delivered.

AWS Chatbot: The Chatbot service is configured to communicate with Slack. SNS subscription is also added for the Chatbot so the messages get delivered to the Chatbot. This service handles delivery of the message to the Slack channel.

Slack Channel: The final component is the Slack channel. This channel will receive the status message and will show the details as chat message. The private channel is configured in a workspace. All members who have access to this channel will be able to view the status messages.

This image will explain how the status message from the Glue job will land into the Slack channel.

This should give you a general idea about the demo process which I will be setting up next to demonstrate setting up of AWS chatbot.

How am I building

Now lets move to setting up the whole architecture which we went through in earlier section. I will provide a high level step by step process which should help you setup the same on your own. There are two parts of the setup which we will be handling:

Infrastructure: In this we will be deploying the infrastructure components needed to run the whole data flow and the alerting mechanism. The components which we will deploy in this part are:

- S3 Bucket for the source file

- DynamoDB table for destination load

- The Lambda for data transformation and the role which will be assumed by this Lambda

- SNS topic for Chatbot

- Eventbridge rule to capture the glue state change event

All of these componenets will be deployed using a Terraform script which can be found in the Github repo.

Glue Job and Alerting setup: In this we will setup the Glue ETL job and the required chatbot setups to handle the alerting. The components which we will deploy as part of this are:

- Glue Catalog and ETL job

- Chatbot Setup

- Slack channel setup

Lets move on to deploying these parts.

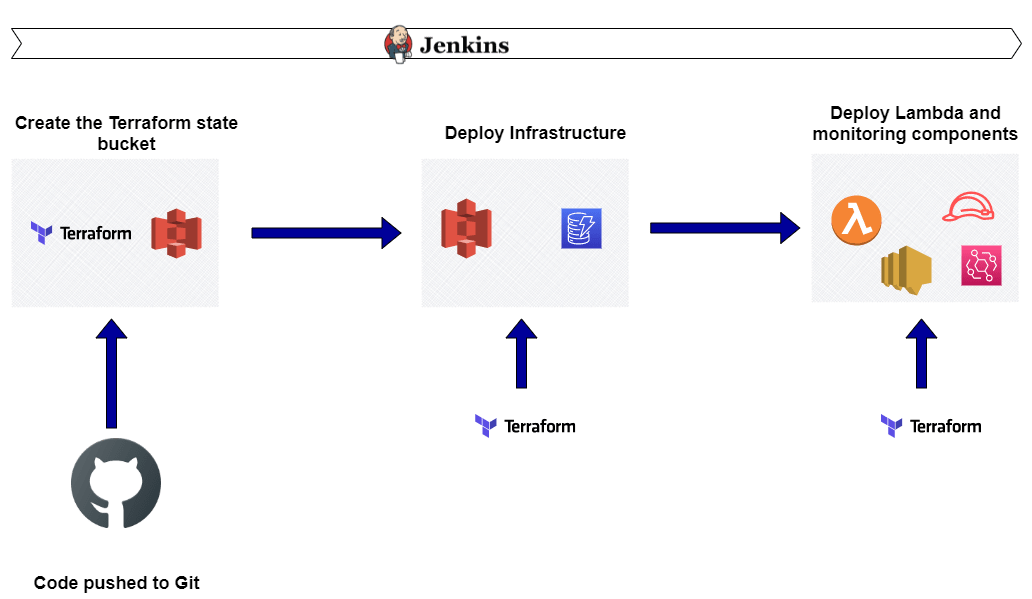

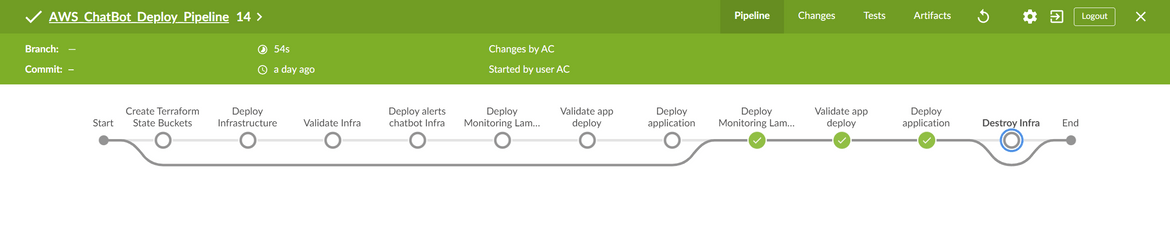

Deploy Infrastructure

To deploy the infrastructure, I have created a Jenkinsfile which will run a Jenkins pipeline to deploy each component. Each of the component is define as Terraform module and resources and will be deployed via Terraform in the Jenkins pipeline. Here is what the pipeline will be deploying:

The Jenkinsfile and the Terraform scripts are provided in my Github repo. Let me go through some details about each folder and the main Terraform file in the repo:

- main.tf file: This is the main Terraform script which will deploy all of the infrastructure modules. I am using an S3 bucket here to store the Terraform state.

- security-module: This folder contains the local Terraform module which deploys all the security components for e.g the role for Lambda

- infra_deploy: This folder contains the Terraform module to deploy the infrastructure components like the S3 bucket, DynamoDB table etc. It also loads a sample data file to the S3 bucket

- chatbot_lambda: This folder contains the Terraform module to deploy the Lambda function that will be used for the data transformation. Make sure to zip the files in the src folder since that zip file is used by the Terraform to create/update the Lambda

To setup and deploy the components, a Jenkins pipeline need to be created. If you are trying to use the Jenkinsfile I provided, there are few pre-steps you need to handle before you can run the same:

- In the Terraform modules, there are files for variables. You need to modify the variables to provide names which are suited for your deployment. For example the name of the S3 bucket. All those variables need to be updated before the deployment

- In Jenkinsfile there are some environment variables defined which control the folw of the pipeline. Provide appropriate values according to need. Here are the variables and their explanations

Login to the Jenkins and follow these high level steps to setup and run the pipeline. Make sure Terraform is installed on the Jenkins system and you have cloned the Github repo to your own repo:

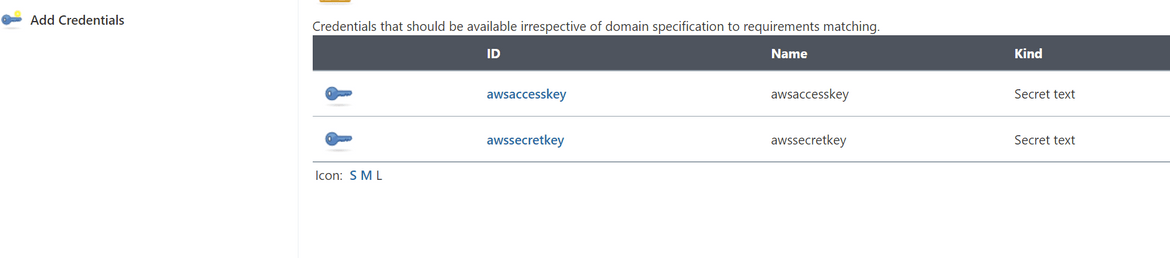

- Login to Jenkins and setup the credentials for AWS from the Manage Credentials page. The AWS keys need to be setup here since that is used in the pipeline

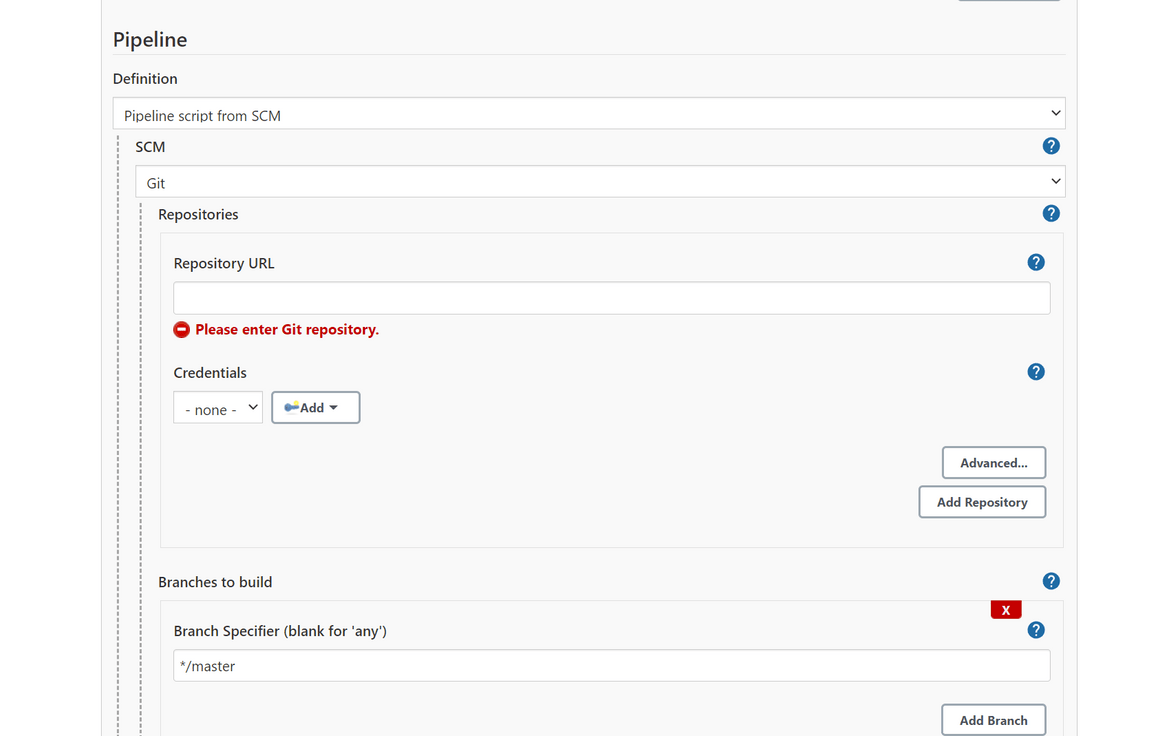

- Create a new Pipeline project and select the git repo as source for the Jenkinsfile

- Once the pipeline is defined, go ahead and run the pipeline. Once it completes all the components would have been deployed on AWS

Login to AWS to verify the components are deployed.

Deploy Glue ETL Job and Alerting

Now that we have the basic infrastructure components deployed, lets move on to setup the other components and complete the alerting setup.

Glue Job:

I will go through each step to setup the the job which will load the data from the S3 bucket to the DynamoDB table:

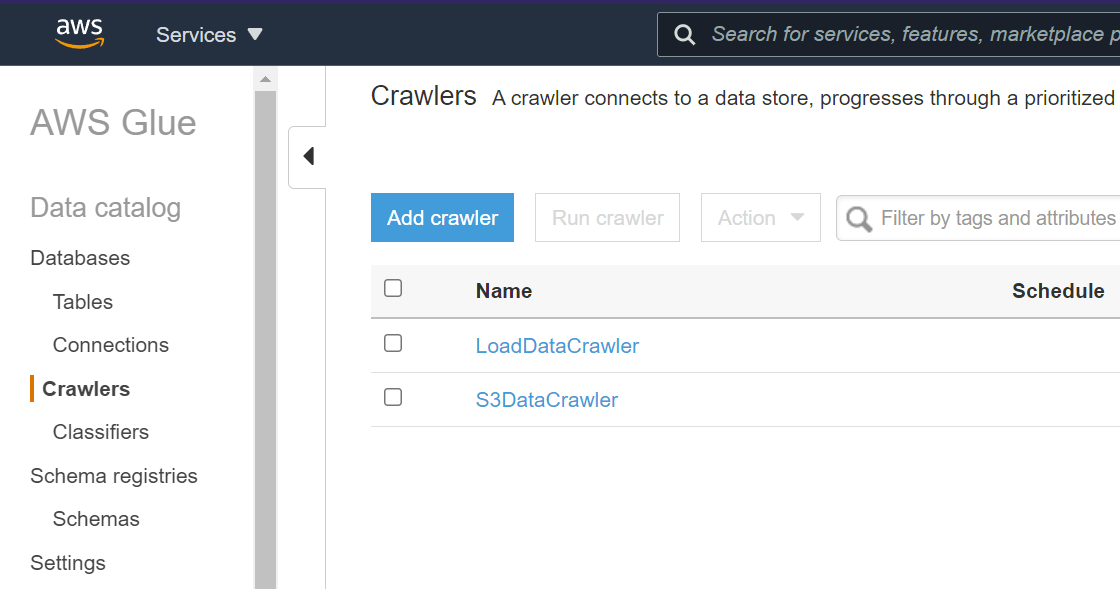

- Login to AWS console and navigate to AWS Glue. On Glue service page, click on Crawlers and click Add crawler.

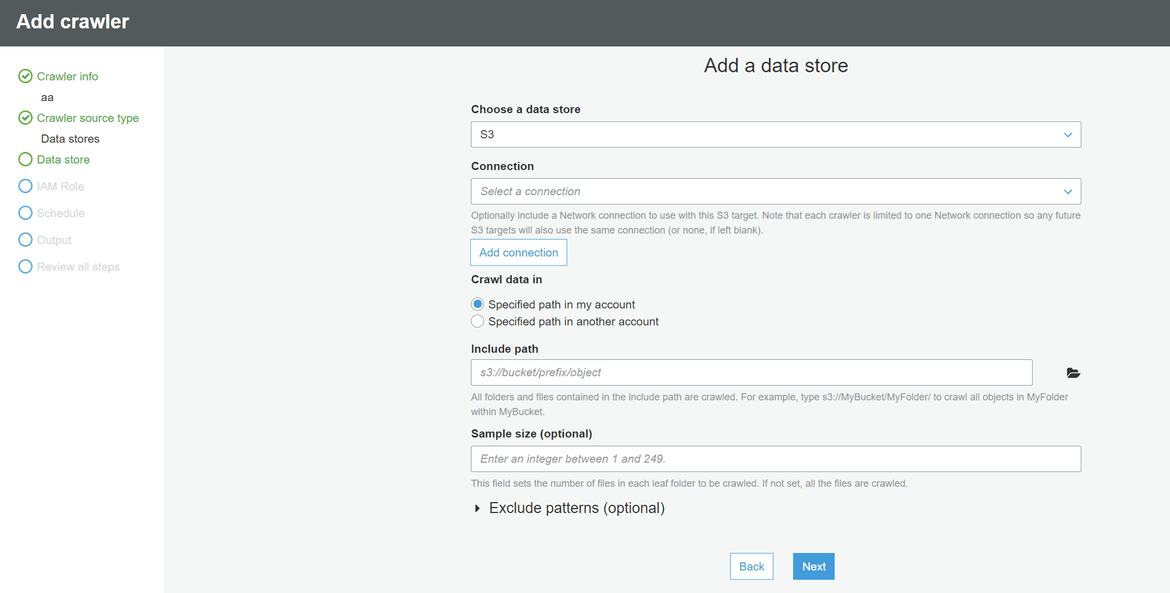

- On The Crawler creation page provide a name to the crawler and select the S3 bucket (the source S3 bucket create above that contains data file) as the Data store

- When prompted to create IAM role, select create new and provide a name

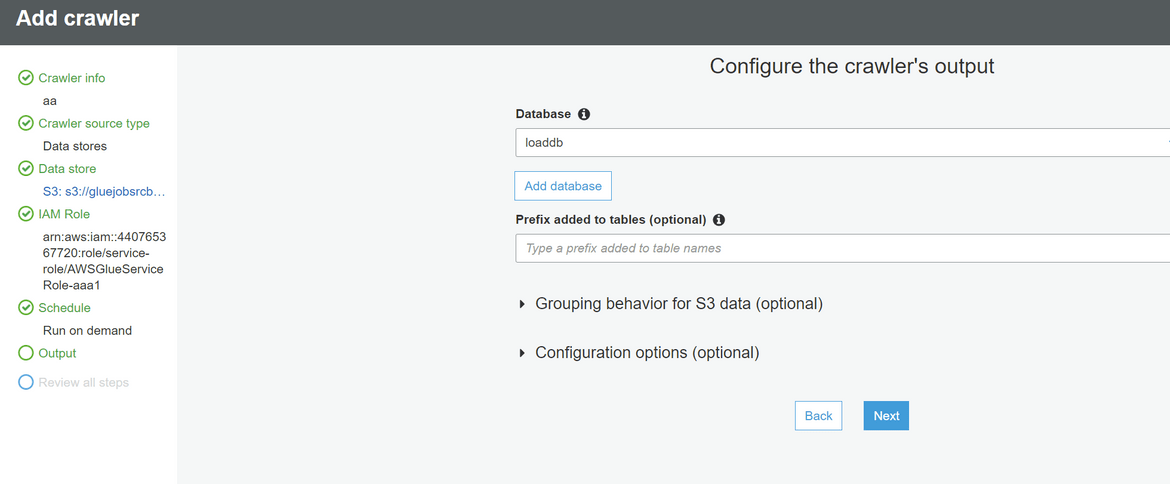

- On the Output option, provide a name for the database and the data table which will host the source data from S3. You can keep all other options default

- Complete and Finish creating the crawler

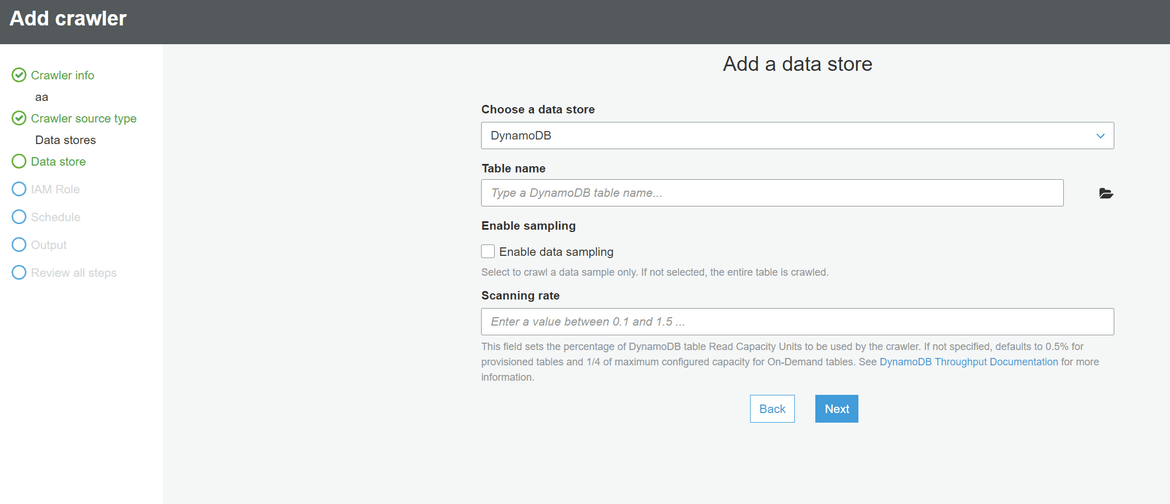

- Repeat the above steps to create a crawler for the Destination dynamoDB. Select data store as DynamoDB and select the dynamodb created earlier

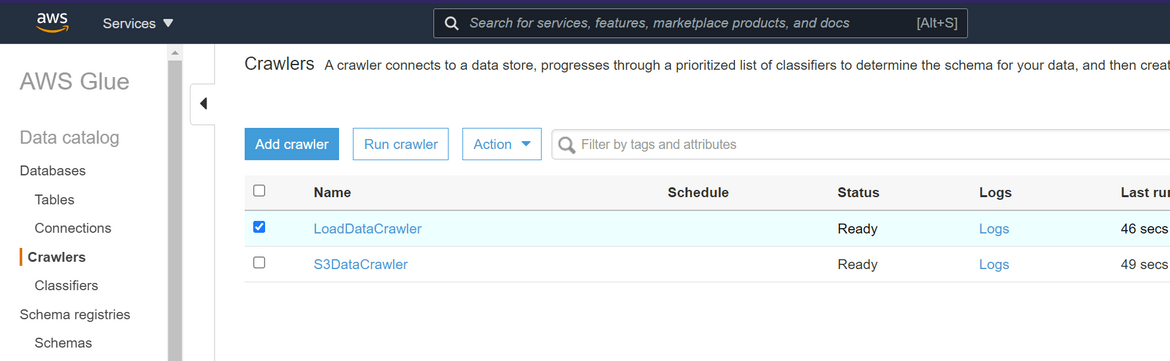

- Once both crawlers are created, select each one by one and click ‘Run Crawler’. This will run the crawler and create the respective Glue data catalogs which will be needed for the Glue ETL job

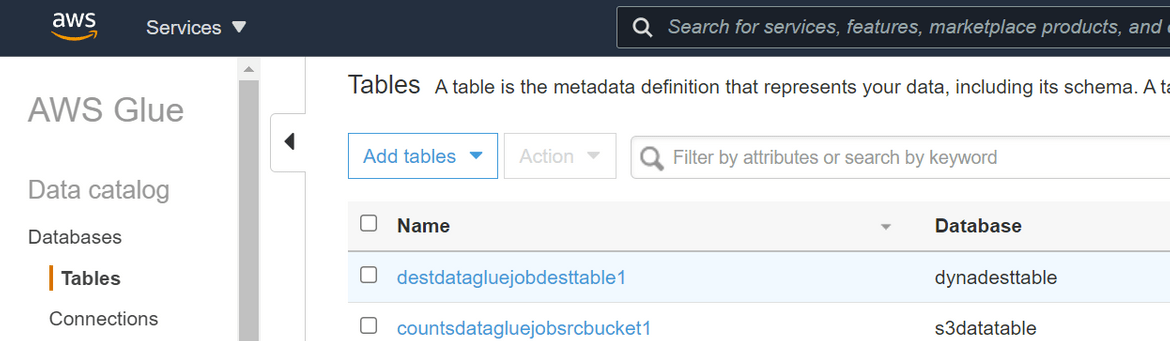

- Once the crawler finishes, navigate to the Tables section. There should be two tables created

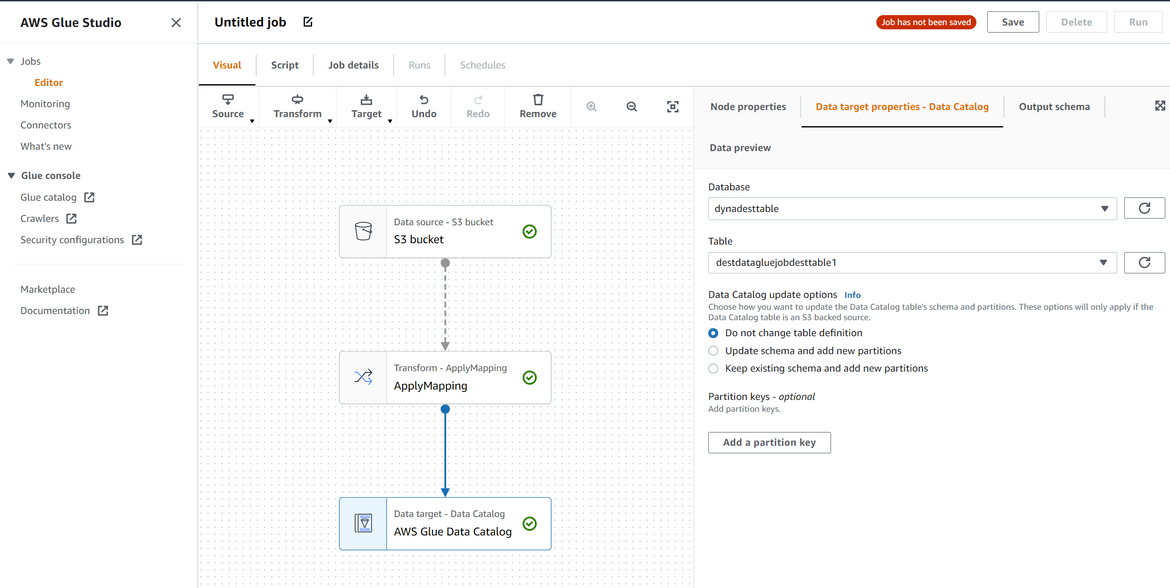

- Now to create the job, Click on Glue Job Studio. It will open the Job studio for visual setup of jobs. Click on create for Jobs. It will open the visual editor for the job

- On the Source step select the Glue catalog created for source. On the transform step select transformation as needed. On the Target step, select the glue catalog created for the target Dynamodb table

- On the Job details tab, select an IAM role to be used by Glue. If not existing, go ahead and create an IAM role for Glue. The role should have access to the S3 and DynamoDB tables.

- Once done, provide a name to the job and save the job. This will complete creation of the Glue job

That completes setting up the job.

Chatbot Setup:

Now lets setup the Chatbot. Before proceeding make sure you have your own Slack workspace where you have admin access.

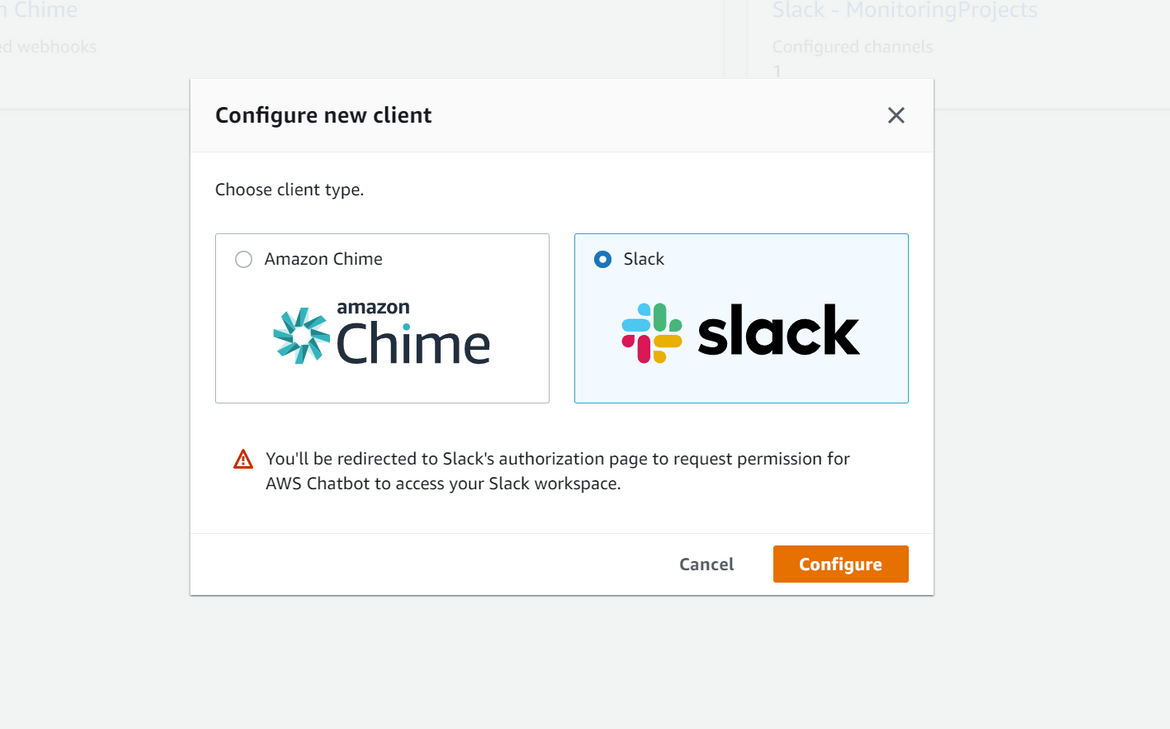

- Navigate to the Chatbot service. If its first time, Click on the Slack. Or else click on Configure new client. It will provide two options. Select Slack.

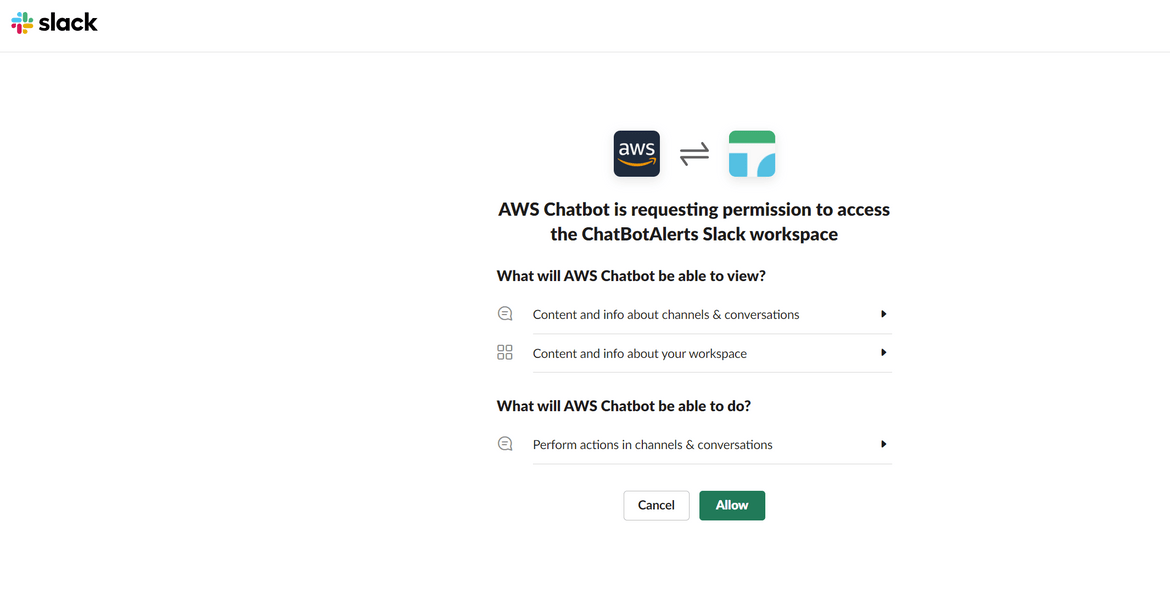

- It will open up a new tab and if already signed in to the slack workspace, it will ask for the permission to access. Select Allow

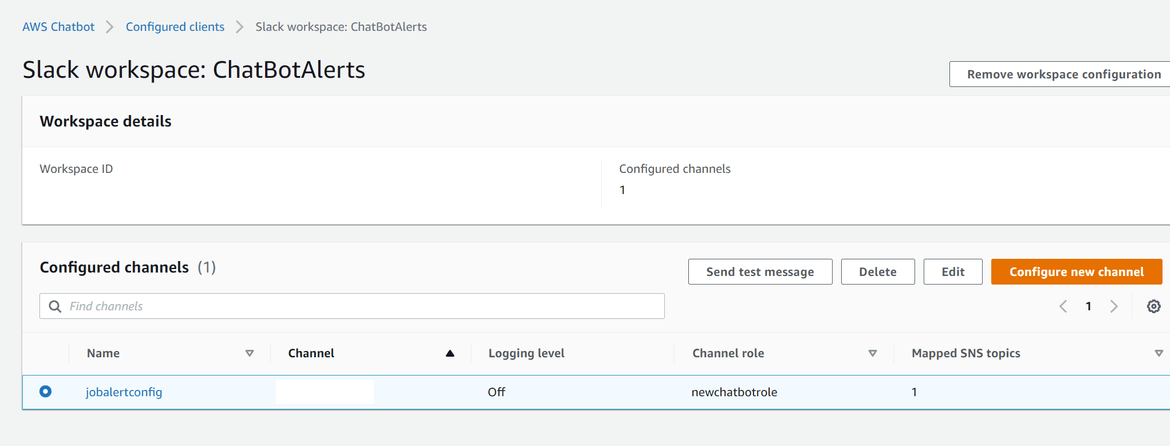

- Once done it will create the connection and comeback to the Chatbot AWS console

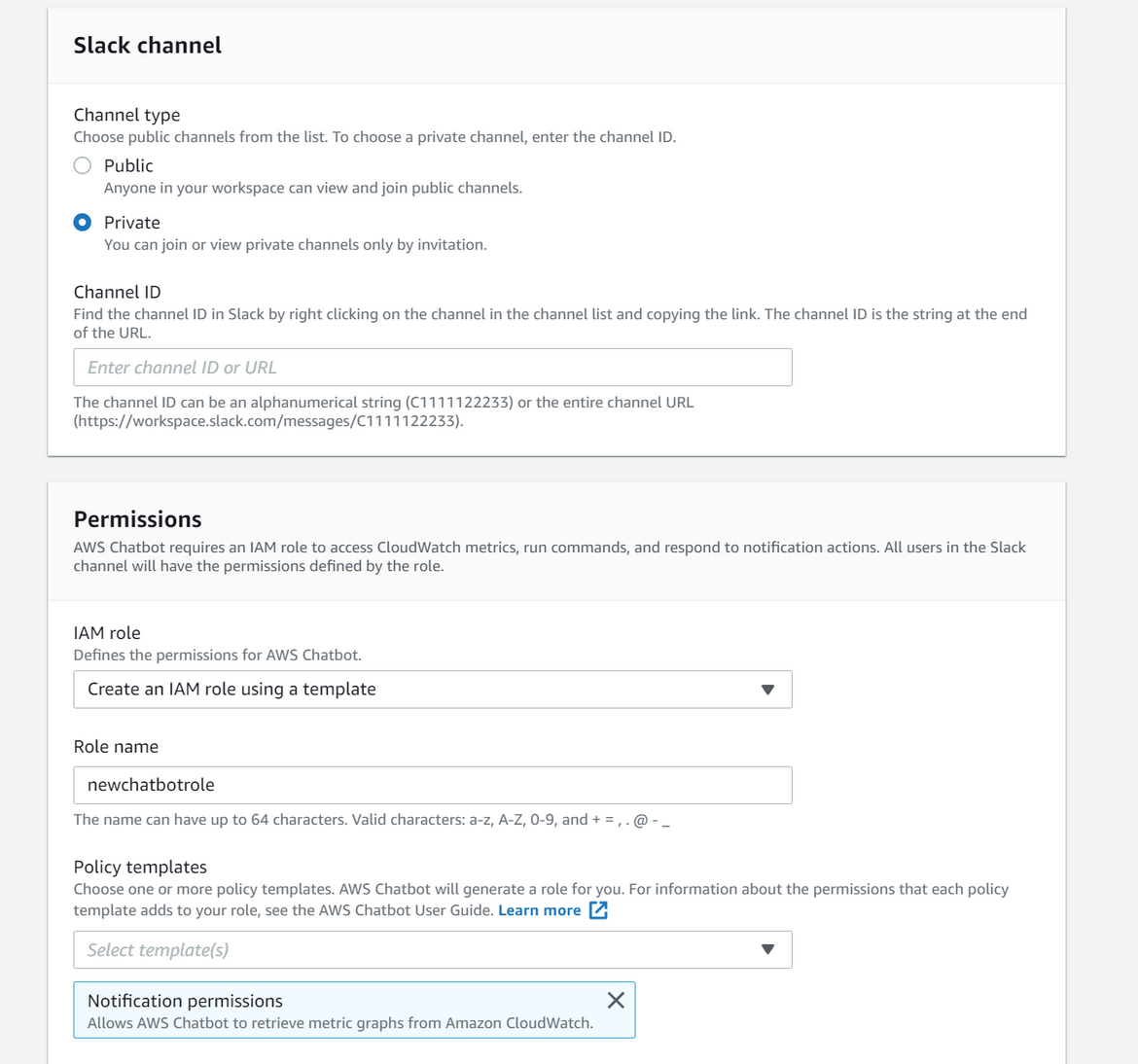

- Click on Configure new channel. On the configure page, select the public channel you want to send alerts to. If its a private channel, select private and provide the channel id. For permissions, select creating a new role with template and give a name

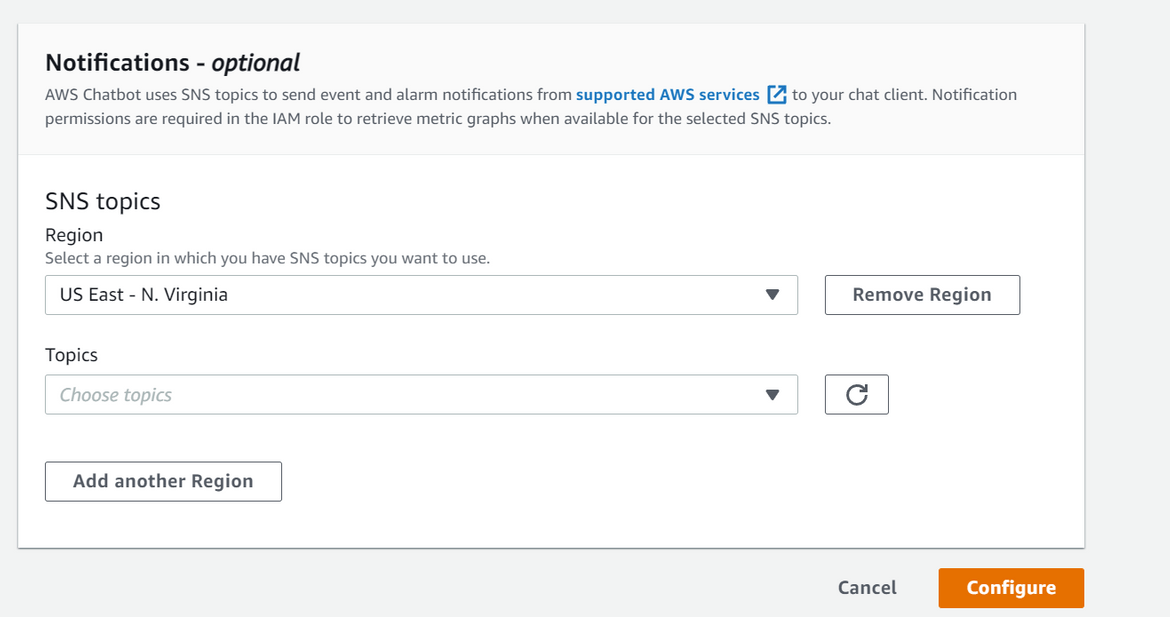

- For the SNS topic, select the SNS topic which was created earlier by the Jenkins pipeline

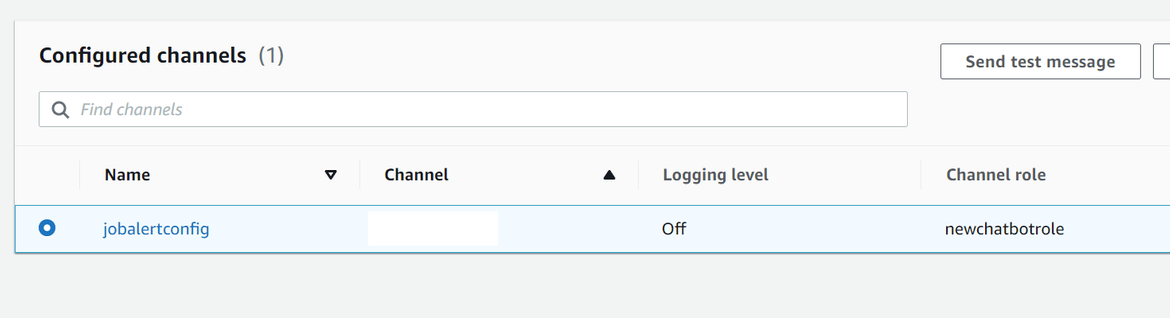

- Save the Chatbot channel settings. Now there should be one channel configured.

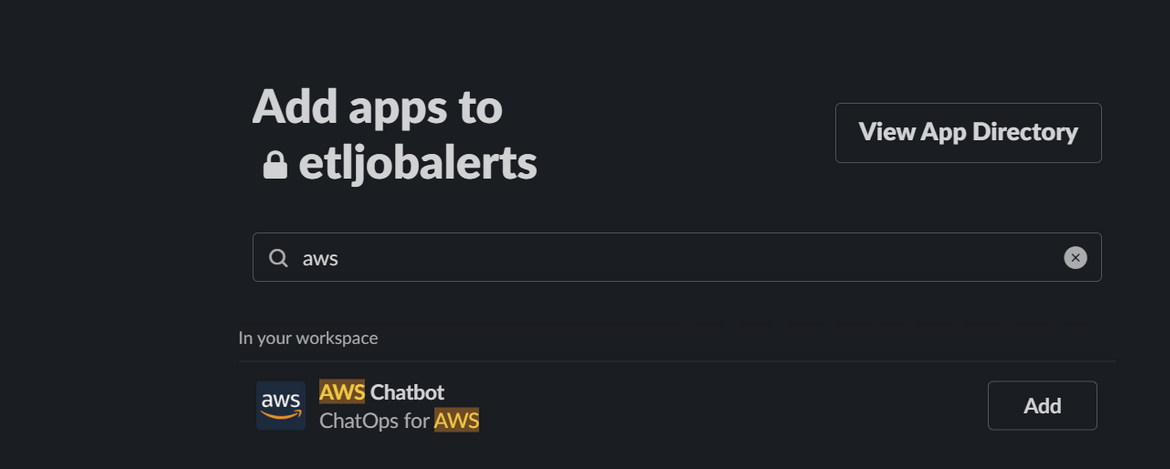

- On the Slack channel, go ahead and add the AWS app to the channel

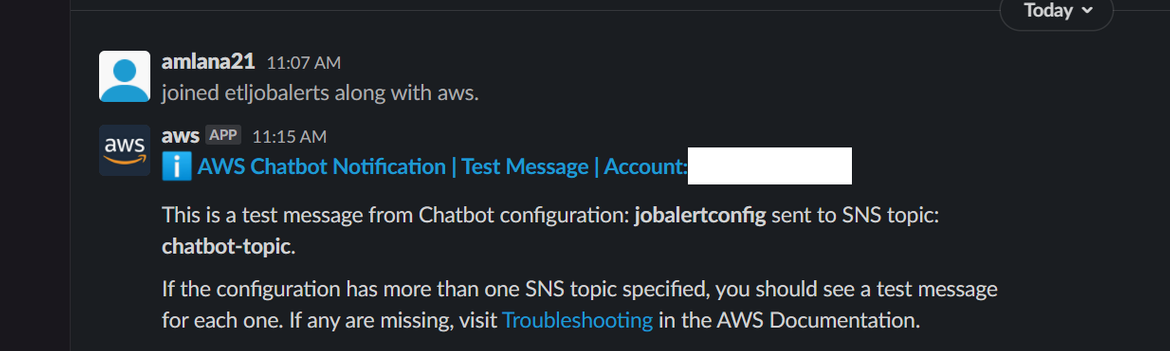

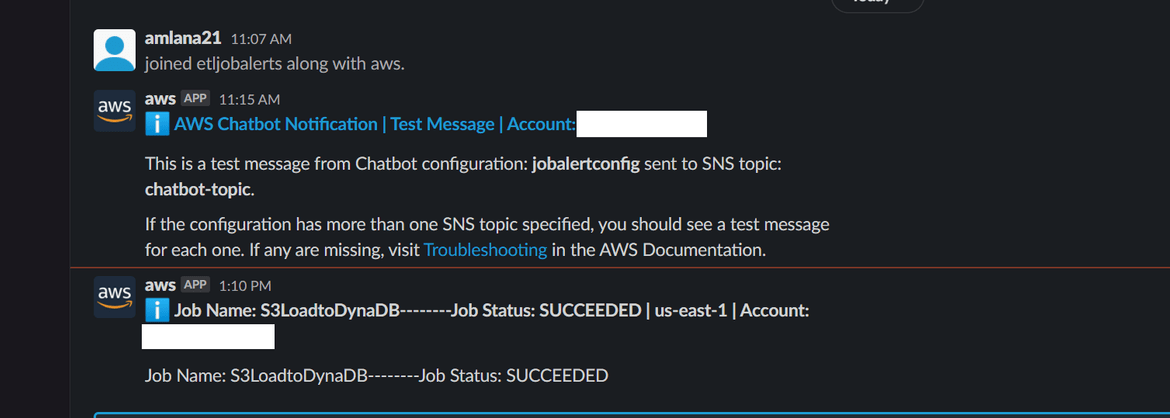

- Once done, go back to the AWS console for the chatbot. On the channels page, select the channel which was configured above and select send test message. It should send out a test alert to the Slack channel

That will complete the Chatbot setup. Event messages sent to that SNS topic will end up as alerts on the Slack channel. The Lambda which was created earlier should route the messages to the SNS topic. Make sure the SNS topic ARN is updated on the Lambda as the environment variable so it knows which SNS topic to send the message to.

Testing

Now that the setup is complete, lets test the Chatbot and verify if the Glue job is sending out alert to the Slack channel.

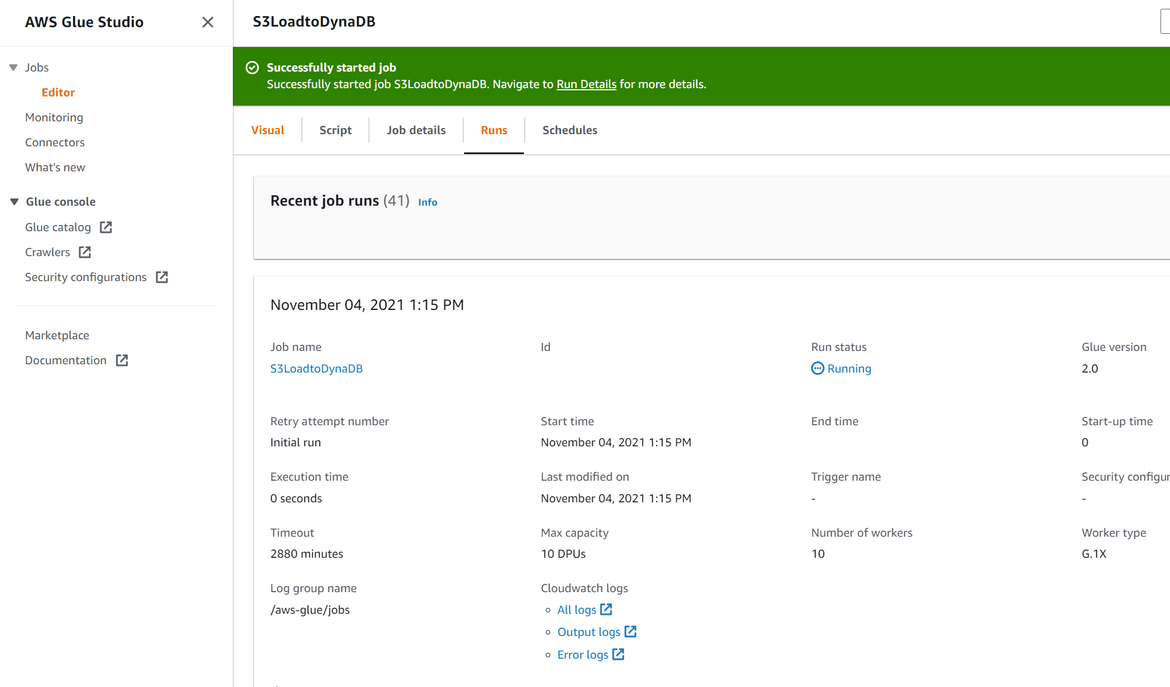

- Navigate to the Glue job which we created earlier

- Click on run. Click on run details and it will show that the job is currently running

- Once the job finishes, after a while an alert should pop up on the Slack channel with the Job state message

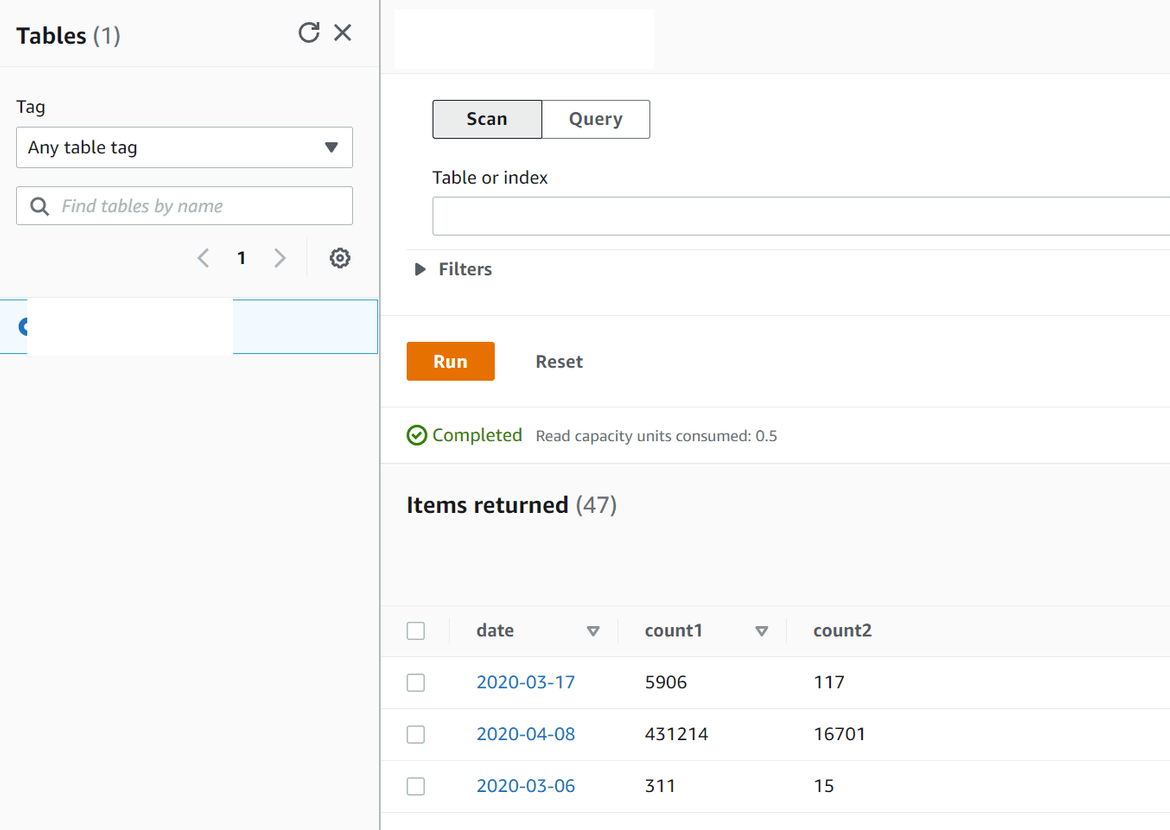

Anytime the Glue job runs, even on a schedule, the job status will be alerted on the Slack channel as a message. Then you can login to AWS console and check the DynamoDB for the records loaded:

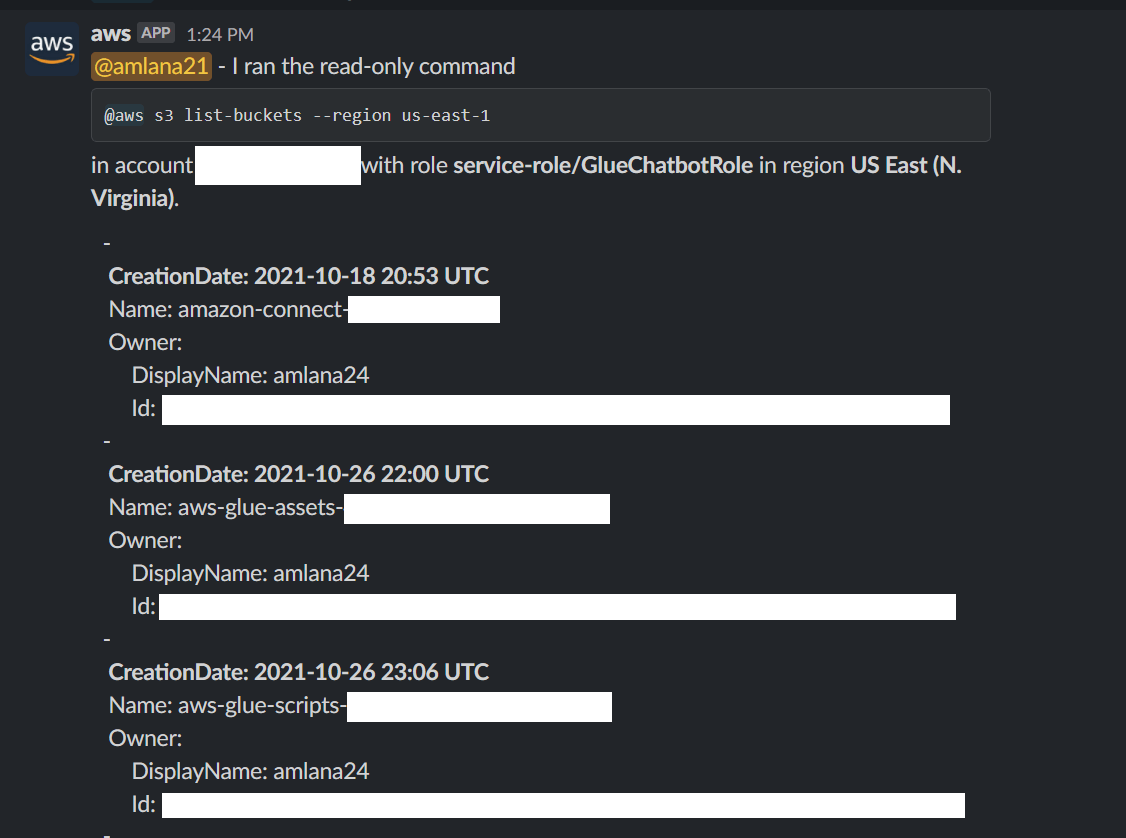

One more scenario which can be tested here is that you can run AWS CLI commands directly from the Slack channel. Type this command in that specific Slack channel and view the results being returned from AWS:

@aws s3 list-buckets --region us-east-1Conclusion

That completes the short demonstration of how Chatbot works and how to setup one. Hope I was able to help you understand the basics of this very useful service. Chatops is becoming very popular now and it also provides the ease for teams to monitor operational tasks. This service will be of help to teams who use Slack or Chime as their collaboration tool as they don’t have to go out of the tool to get some operations view. This post provided the basic understanding and the workings of the same. If you have any questions or face any issues please reach out to me from the Contact page.