How to stream LLM responses using AWS API Gateway Websocket and Lambda

Nowadays LLMs are everywhere. Many tasks are getting automated using AI (LLM) models. Most of these use cases are chat based where you chat with the LLM and it responds back with answers. In these scenarios it becomes useful to have a streaming mechanism where the LLM can stream responses back to the user. In such cases, the client can connect to the LLM and the LLM can stream responses back to the client as and when they are ready. This is where Websockets come into play. Websockets provide a full-duplex communication channel over a single TCP connection. This allows the LLM to stream responses back to the client. In this post, I will explain how to stream LLM responses using AWS API Gateway Websockets and Lambda. We will use AWS API Gateway to create a Websocket API which will be used to stream responses from a backend LLM inference service , to the client. We will use AWS Lambda to process the LLM responses and send them to the client over the Websocket connection. Finally we will automate the deployment of the infrastructure using Terraform.

The GitHub repo for this post can be found Here. If you want to follow along, the repo can be cloned and the code files can be used to stand up your own infrastructure.

Pre Requisites

Before I start the walkthrough, there are some some pre-requisites which are good to have if you want to follow along or want to try this on your own:

- Basic AWS knowledge

- Github account to follow along with Github actions

- An AWS account

- AWS CLI installed and configured

- Basic Terraform knowledge

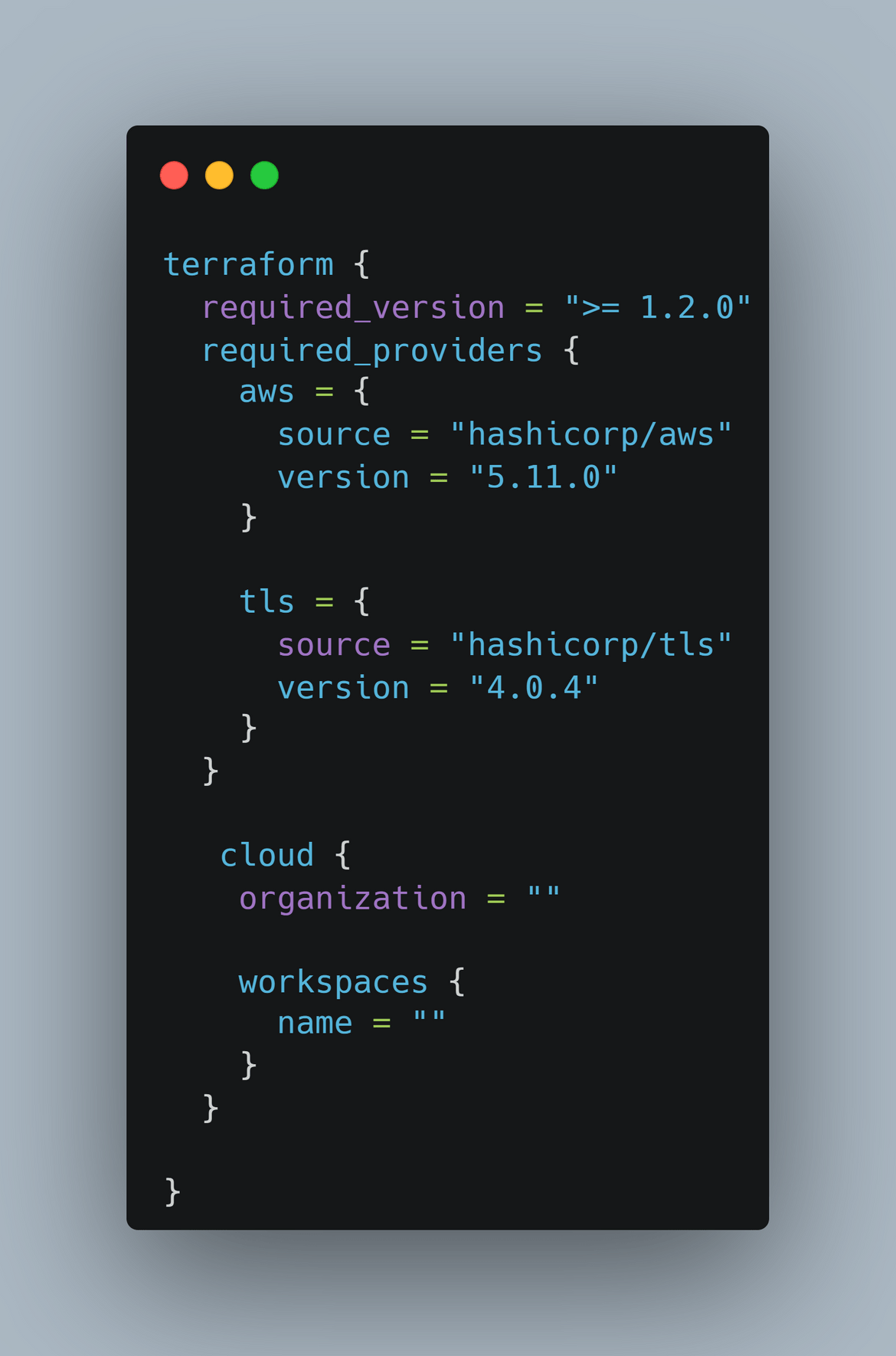

- Terraform Cloud free account. You can sign up here. Its not mandatory but I have used this for state management in the Terraform scripts. You can use S3 as well.

With that out of the way, lets dive into the details.

What is LLM?

LLM stands for Large Language Model. LLMs are a type of AI model that can generate human-like text. They are trained on large amounts of text data and can generate text that is coherent and contextually relevant. LLMs have a wide range of applications, from chatbots to content generation to language translation. Some popular LLMs include GPT-3, BERT, and T5. In this post, we will be using a pre-trained LLM model to generate text responses to user queries. The LLM model will be hosted on AWS Lambda and will be invoked via an API Gateway Websocket API. The LLM model will generate text responses to user queries and stream them back to the client over the Websocket connection.

What is AWS API Gateway Websockets?

AWS API Gateway Websockets is a managed service that allows you to create real-time two-way communication between clients and servers over a single, long-lived connection. Websockets provide a full-duplex communication channel over a single TCP connection. This allows you to send messages from the client to the server and from the server to the client as and when they are ready. Websockets are ideal for use cases where you need real-time communication between clients and servers, such as chat applications, real-time notifications, and live data feeds. In this post, we will be using AWS API Gateway Websockets to create a real-time communication channel between the client and the LLM model hosted on AWS Lambda. The client will connect to the Websocket API and the LLM model will stream responses back to the client over the Websocket connection.

LLM Inference service used for this post & Overall functional flow

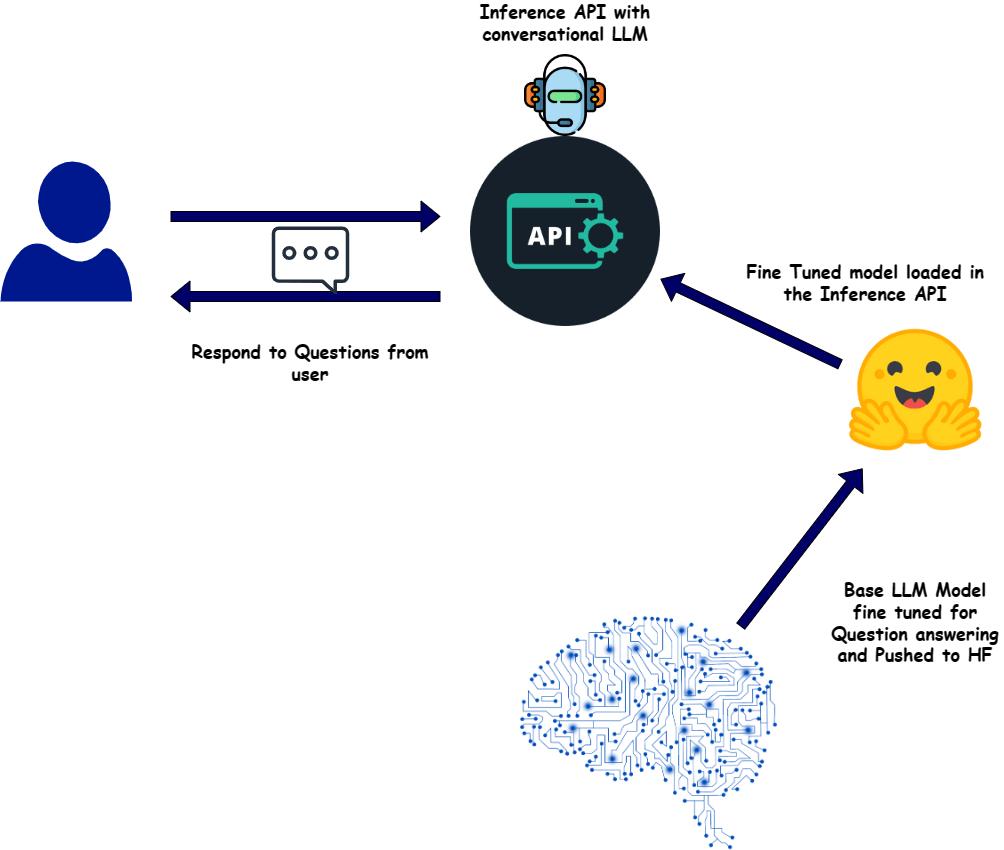

Lets first understand the LLM and its inference service which I will use as an example in this post. Below image shows the overall functional flow of the LLM inference service.

For the LLM I am finetuning from a base model which is hosted on Huggingface. The base model details can be found on Huggingface distilbert-base-cased-distilled-squad. I have fine tuned the base model using the SQUAD dataset. The base model has been finetuned for Question-Answer task. So the LLM behaves as a chatbot answering questions asked based on a context which is passed as input. The fine tuned model is pushed to HF from where the Inference API pulls the model and loads for the API. You can find the fine tuned model here The flow can be explained as below:

- The client connects to the Inference API endpoint(this is different than the streaming endpoint) and sends a message to the LLM model.

- The LLM model processes the message and generates a response.

- The LLM model responds with the answer as API response

For this post scenario, the Inference API is invoked by the Lambda function which is triggered by the Websocket API. The Lambda function processes the message and generates a response. The response is then sent back to the client over the Websocket connection. The client can connect to the Websocket API and send messages to the LLM model. The LLM model will generate responses to the messages and stream them back to the client over the Websocket connection.

Overall Tech Architecture

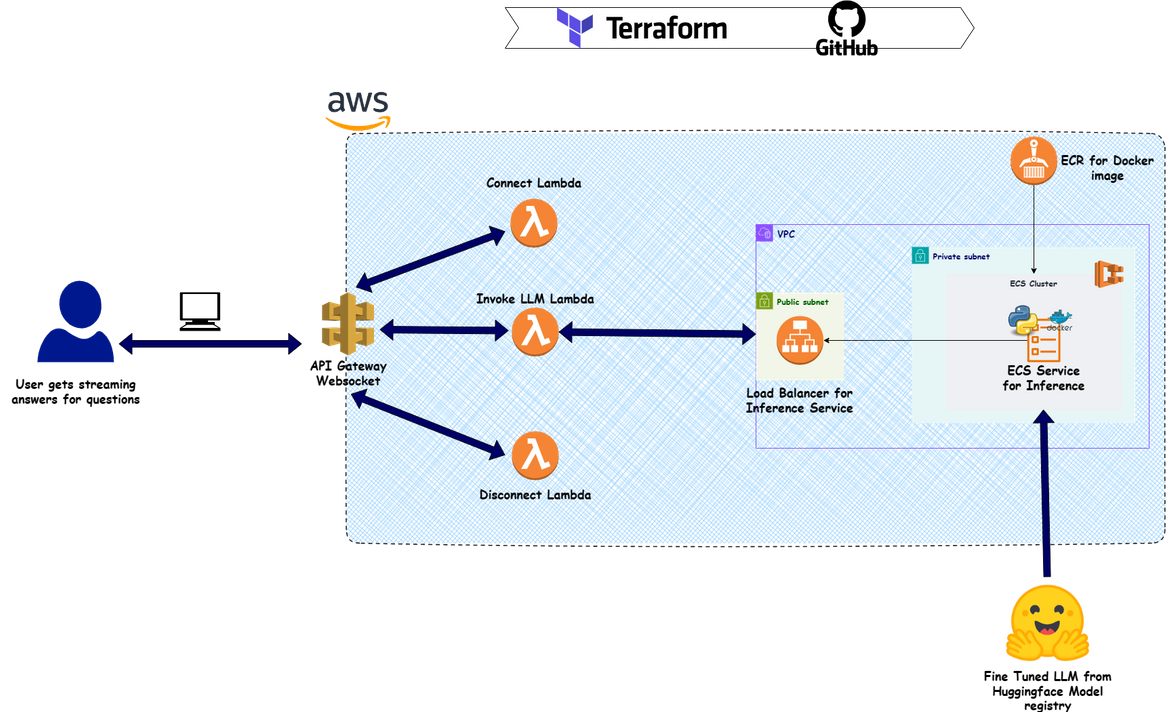

Now lets see the overall tech architecture which we will be using for this post. Below image shows the overall tech architecture of the LLM streaming service.

Lets go through each of the components.

- API Gateway (Websocket): This is a Websocket API which has been created on AWS API gateway. This API will be used to stream responses from the Inference API to the client. The client can connect to the Websocket API and send messages to the LLM model. The LLM model will generate responses to the messages and stream them back to the client over the Websocket connection.

-

Websocket API Lambda Functions: These are the Lambda functions that process the requests on the Websocket API and provide needed responses. There are three Lambda functions in this architecture:

- Connect Lambda: This function is invoked when a client connects to the Websocket API. It initializes the connection and sends a welcome message to the client.

- Disconnect Lambda: This function is invoked when a client disconnects from the Websocket API. It cleans up the connection and sends a goodbye message to the client.

- Invoke LLM Function: This is the actual Lambda function which gets invoked when a client sends websocket API request along with the question text and context. It processes the input payload and then invokes the Inference backend API along with the payload. This Lambda receives the LLM response and sends back as response to the API caller, over the websocket connection which was already open. This function is responsible for streaming the responses back and forth

-

Networking: These are the networking components which support the backend Inference API. The networking consists of components like

- VPC

- Subnets

- Security Groups

- Load Balancer: This is the Application Load Balancer which is used to route traffic to the Inference API. Technically this is also part of the networking components. This load balancer is responsible for providing an endpoint for the backend Inference API and routing inference requests to the API

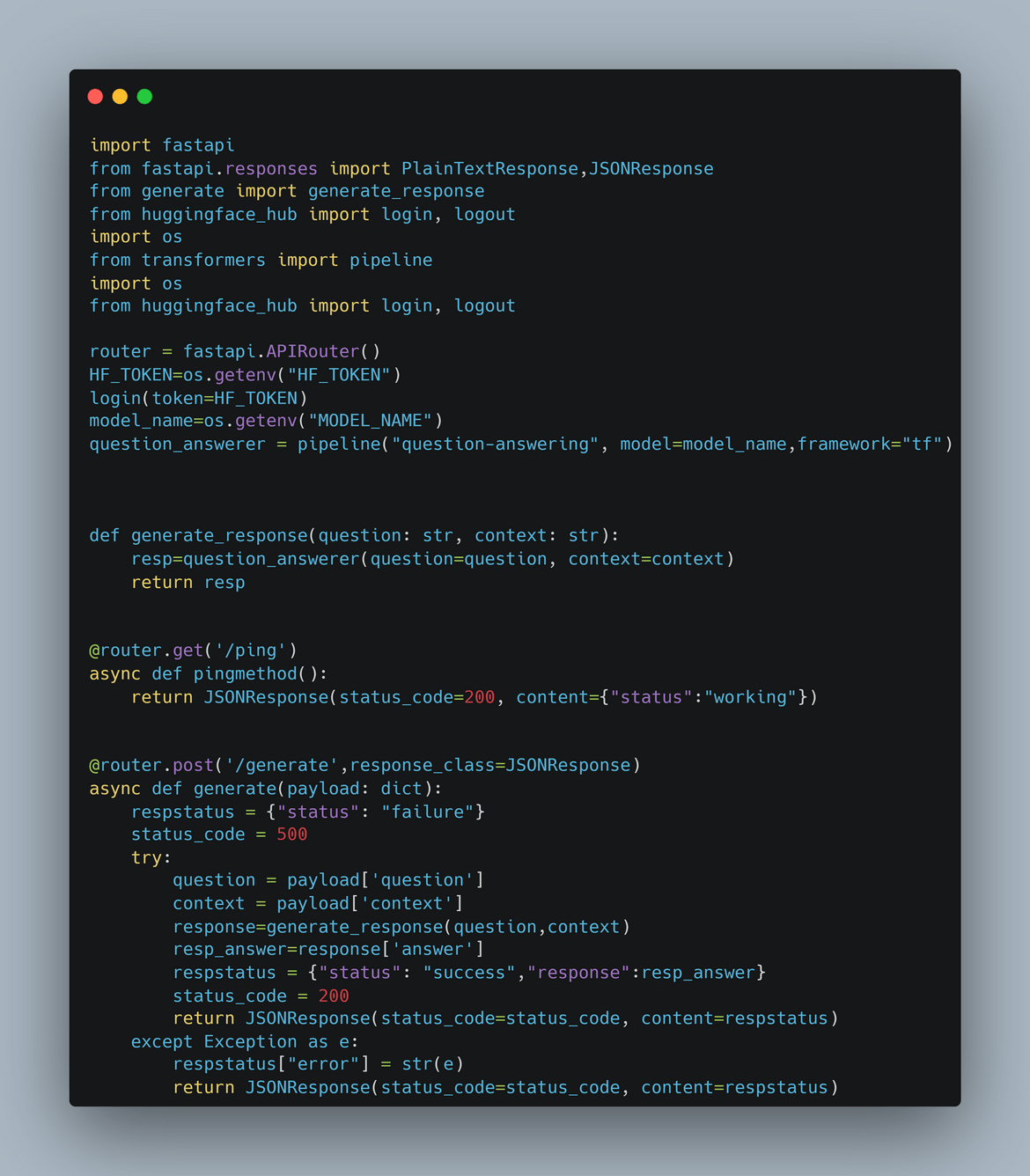

- Inference API: This is the backend Inference API which is responsible for processing the LLM requests. This is the API which loads the fine tuned LLM and exposes the interface via a Fast API. It exposes a generate endpoint which takes the question and a context as input, and provides a response with the answer. The API is deployed as an ECS service on the ECS cluster. The service endpoint is exposed via the load balancer.

- ECS Cluster: This is the ECS cluster which hosts the Inference API service. The ECS cluster is responsible for running the Inference API service and scaling it as needed. The cluster runs inside a private subnet for security and the endpoints are exposed via the load balancer.

-

IAM Roles: These are the IAM roles which provide permissions to the Lambda functions and the ECS Tasks to interact with other AWS services. The different roles which are created:

- Lambda Execution Role: This role provides permissions to the Lambda functions to interact with other AWS services like API Gateway, ECS, CloudWatch, etc.

- ECS Task Execution Role: This role provides permissions to the ECS Tasks to interact with other AWS services like S3, CloudWatch, etc.

- API Gateway Execution Role: This role provides permissions to the API Gateway to invoke the Lambda functions.

- ECR: This is the Elastic Container Registry which hosts the Docker image for the Inference API. The Docker image is pulled by the ECS Tasks to run the Inference API service

- HuggingFace model repo: This is the HuggingFace model repository which hosts the fine tuned LLM model. The model is pulled by the Inference API service to load the model and generate responses. The model is hosted on HuggingFace and is accessible via the transformers package. The fine tuned is pushed to this repo from the training process which fine tuned the base model.

Now that we have an understanding of all the components, lets move on to deploying all of these to AWS.

Deploy the infrastructure

Before we start the deployment, if you are following along form my repo, there are few installations which need to be done on your local system

- Install Terraform. You can download the binary from here.

- Install AWS CLI. You can download the binary from here

- Terraform cloud free account. Get a free account here and prep a workspace which will be used for this. Make sure to follow steps from Here to setup the local repo for use with Terraform cloud

Once the installations are done, clone the repo and follow the below steps to deploy the infrastructure.

Folder Structure

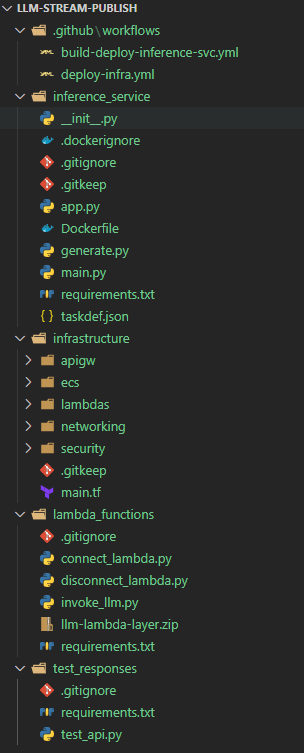

Let me first explain the folder structure of the repo. Below is the folder structure of the repo.

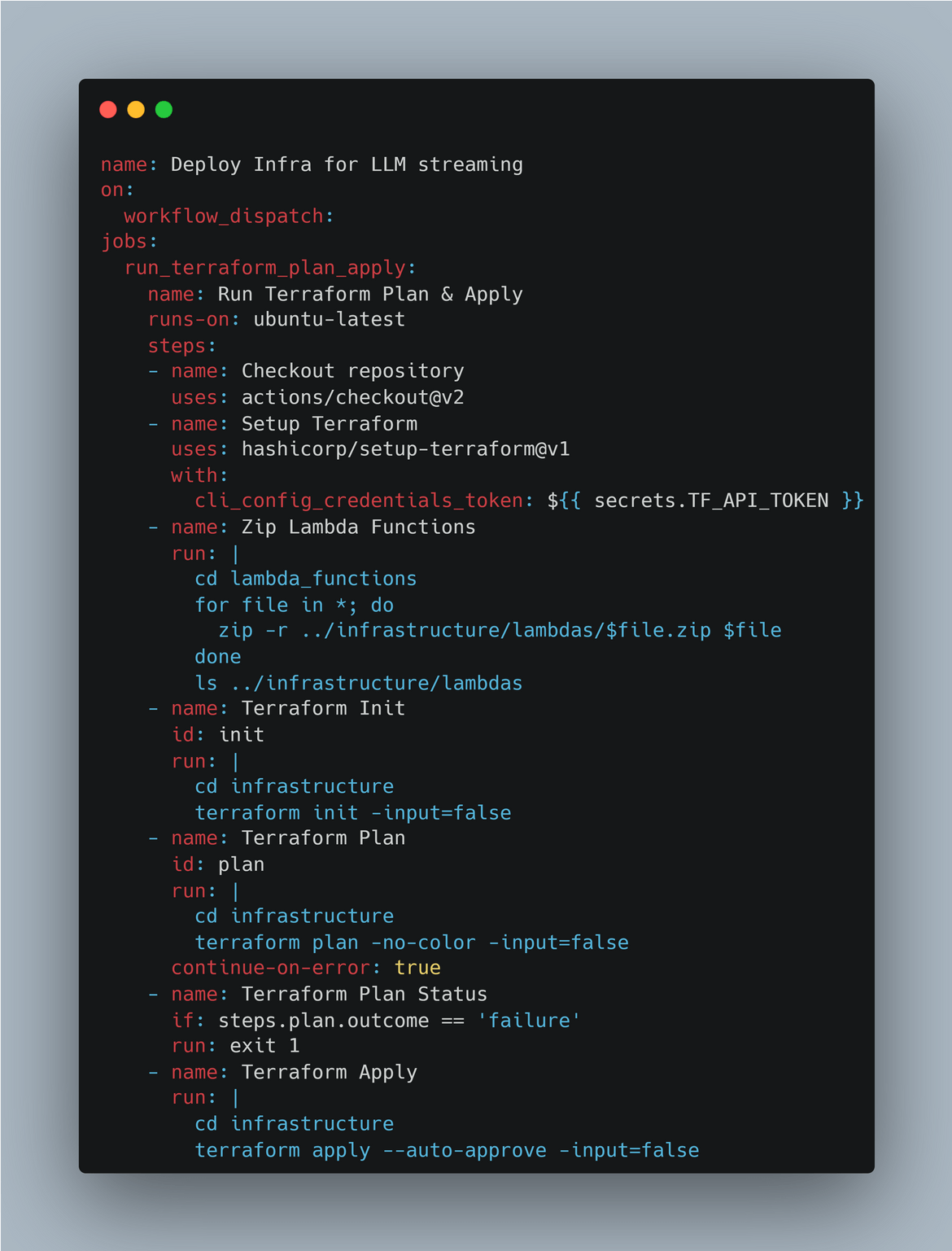

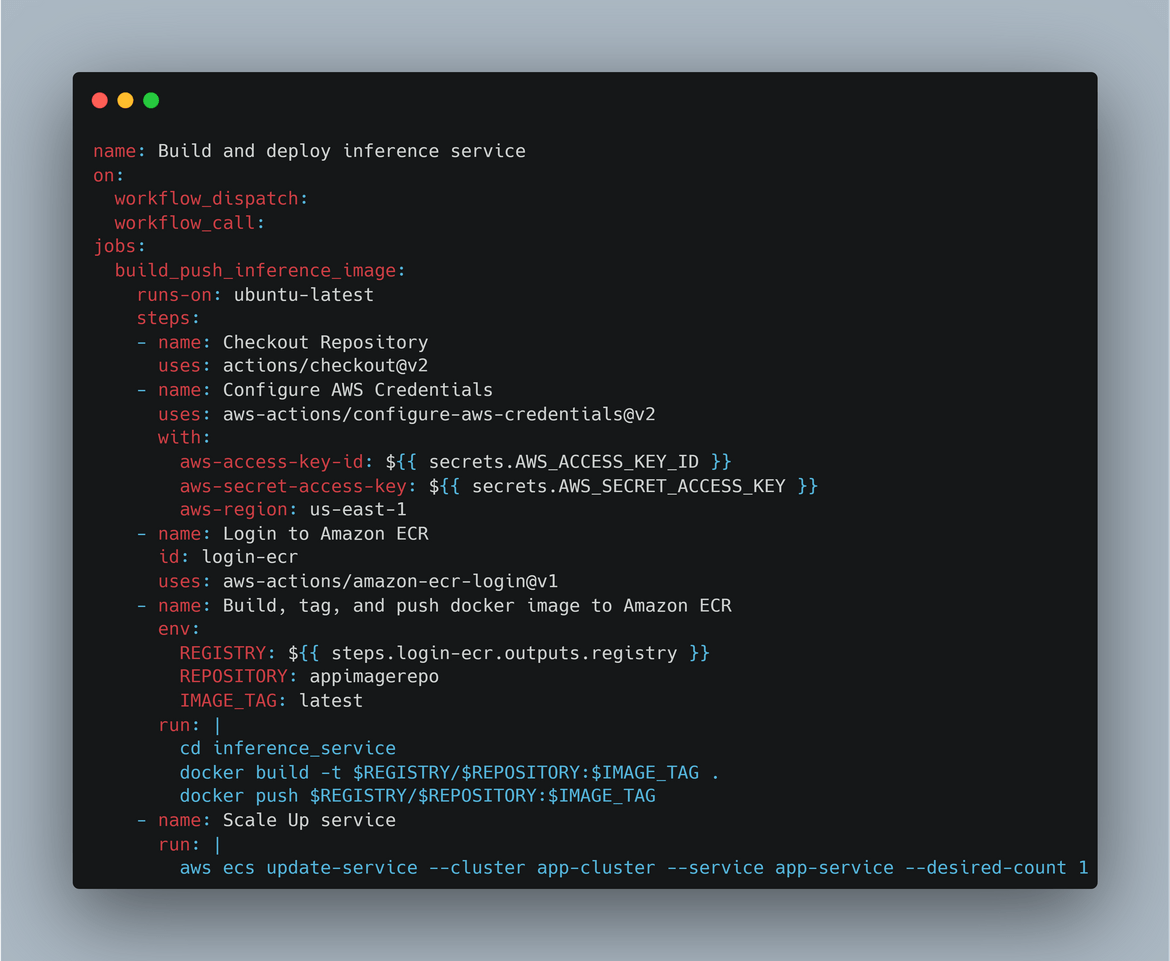

- .github/workflows: This folder contains the Github actions workflow file which is used to deploy the infrastructure using Terraform. This includes workflows for deploying the infrastructure and deploying the Inference service to ECS

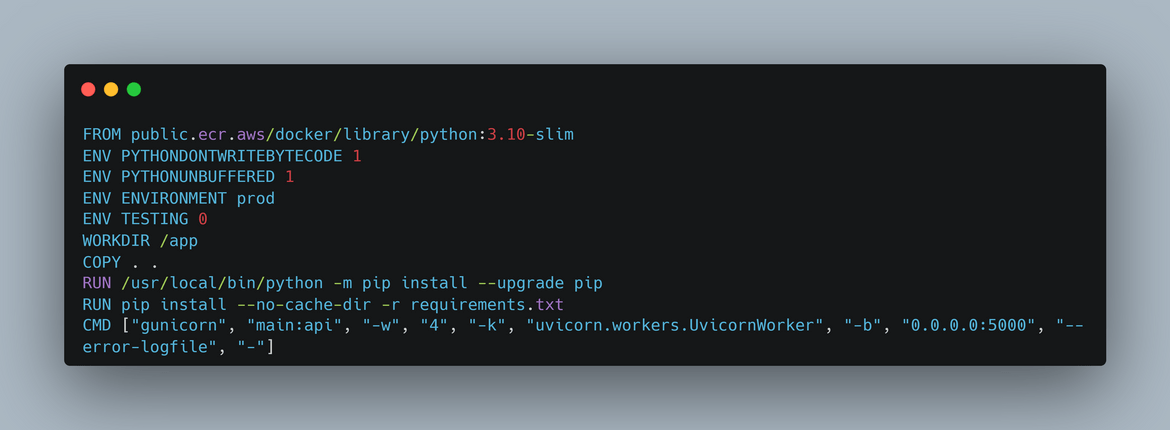

- inference_service: This folder contains the code for the Inference API service. The code is written in Python using the FastAPI framework. It also contains the Dockerfile which builds the image for the Inference API service

- infrastructure: This folder contains the Terraform code for deploying the infrastructure. It contains each of the modules for infrastructure components like VPC, Subnets, Security Groups, ECS Cluster, etc.

- lambdafunctions: This folder contains the code for the Lambda functions which process the requests on the Websocket API. Before deploying, copy these file to the infrastructure folder. This folder also contains the zip package which is used to create the lambda layer for the invokellm lambda function

- test_responses: This folder contains a python code which we will use to test the streaming responses from the Websocket API

Components Deployment

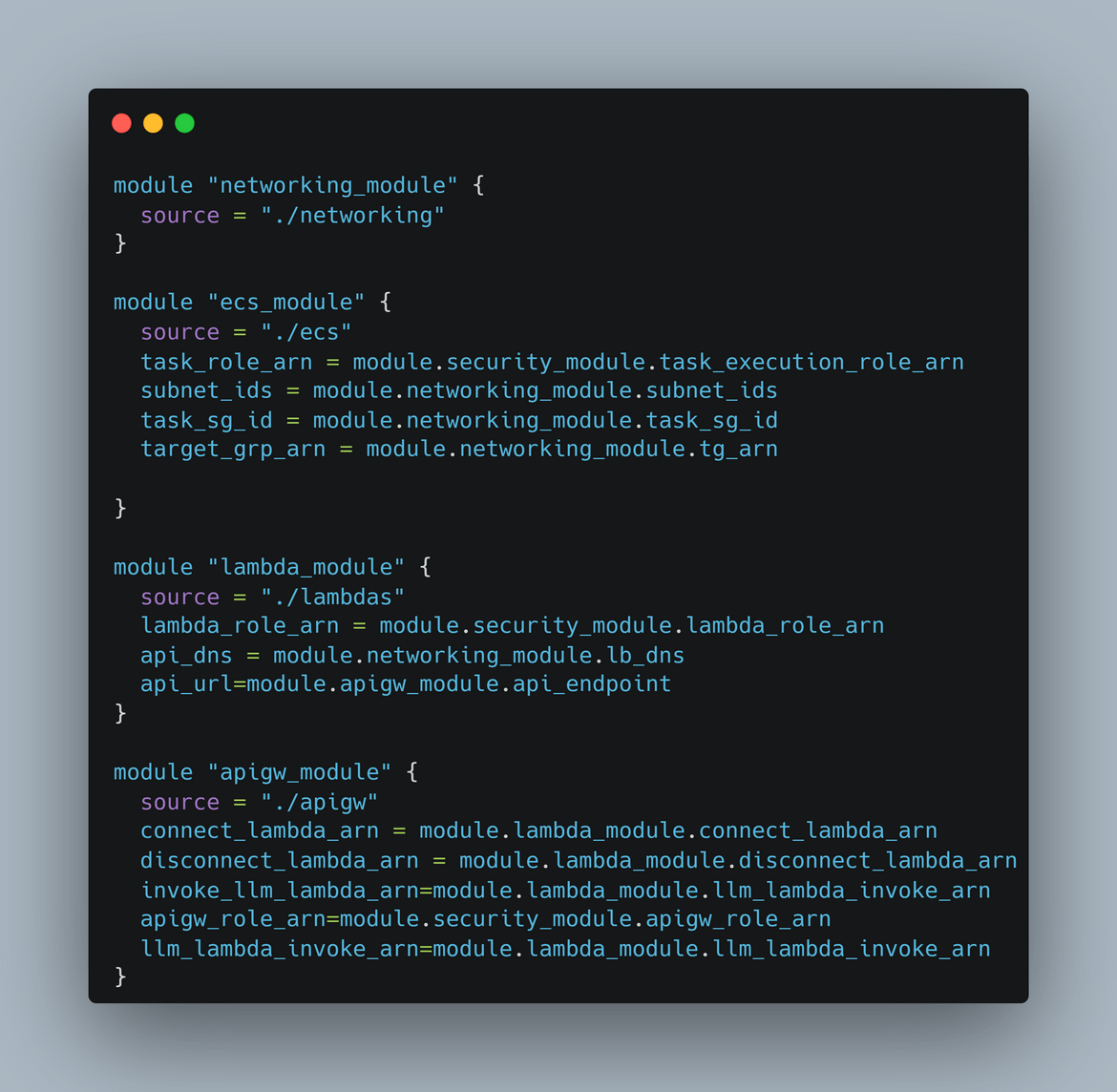

Now lets move on to deploying the stack. The infrastructure is deployed using Terraform. Each components have been divided into different modules. Lets look through each of the modules

networking: This module creates the VPC, Subnets and Security Groups for the infrastructure. The VPC is created with public and private subnets. It also creates te load balancer which is used to expose the Inference API service.The module is defined in the networking folder.

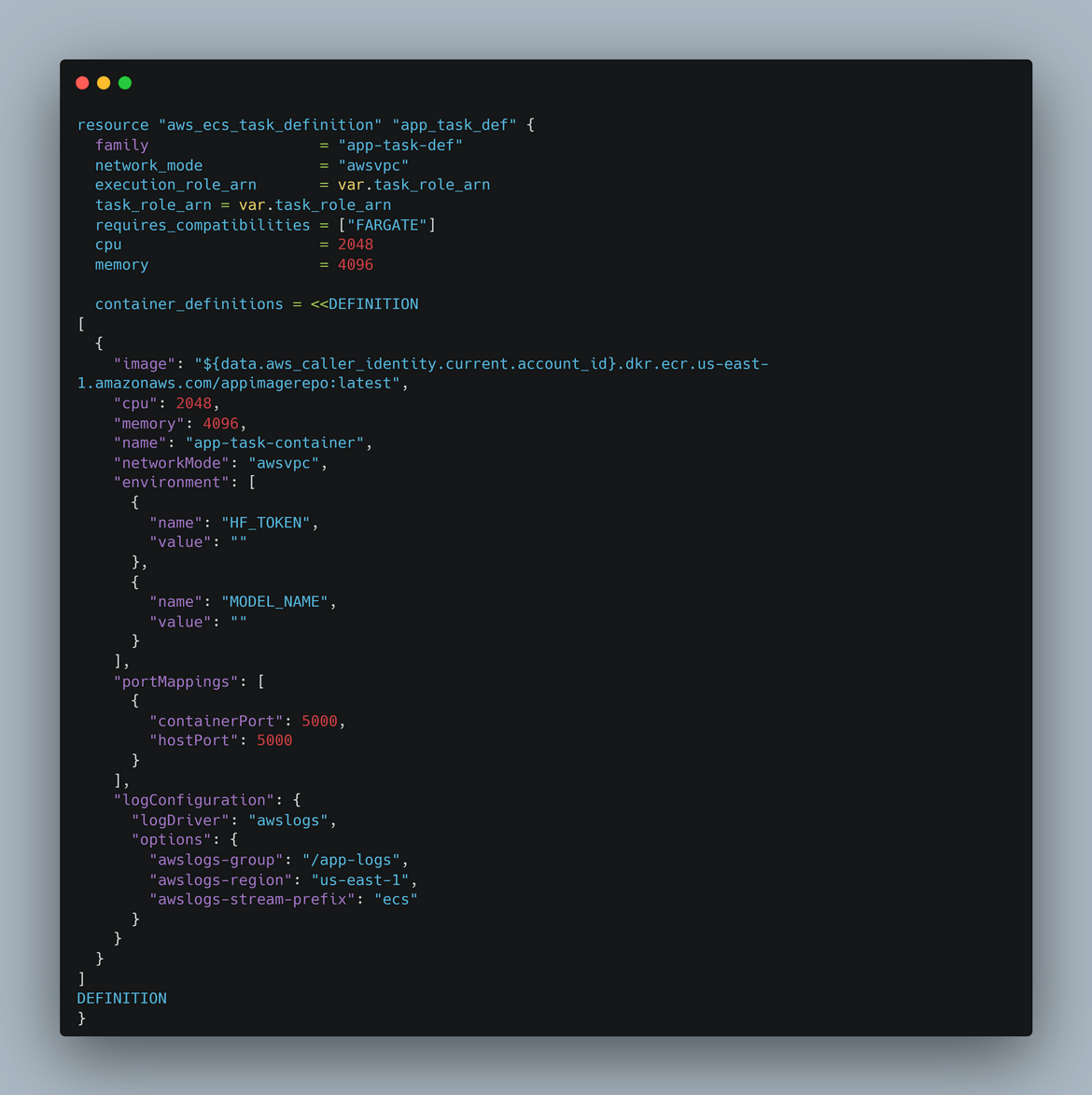

ecs: This module creates the ECS cluster which hosts the Inference API service. The ECS cluster is created with a private subnet for security. This also creates the task definition and the service for the Inference API. The module is defined in the ecs folder.

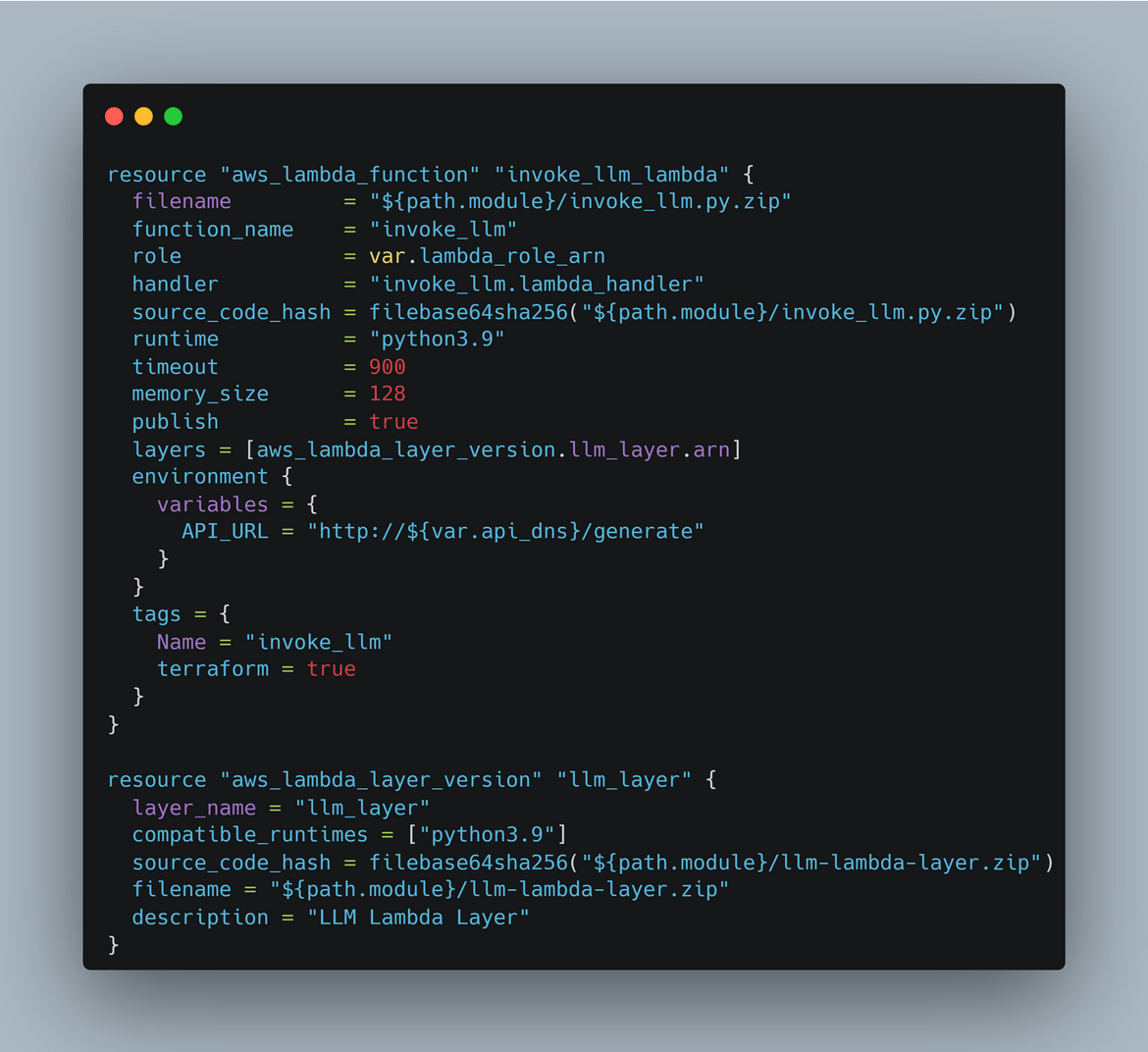

lambdas: This module creates the Lambda functions which process the requests on the Websocket API. The module is defined in the lambdas folder. The module creates three Lambda functions: connect, disconnect, and invokellm. The connect and disconnect functions are used to initialize and clean up the Websocket connection. The invokellm function is used to process the LLM requests and stream responses back to the client. The lambda functions are created with the necessary permissions to interact with other AWS services. This also creates the Lambda layer needed for the invoke llm function to work

The lambda codes have been packaged into separate zip files for Terraform to use and create the Lambda. The Lambda layer is also zipped and put in the same folder.

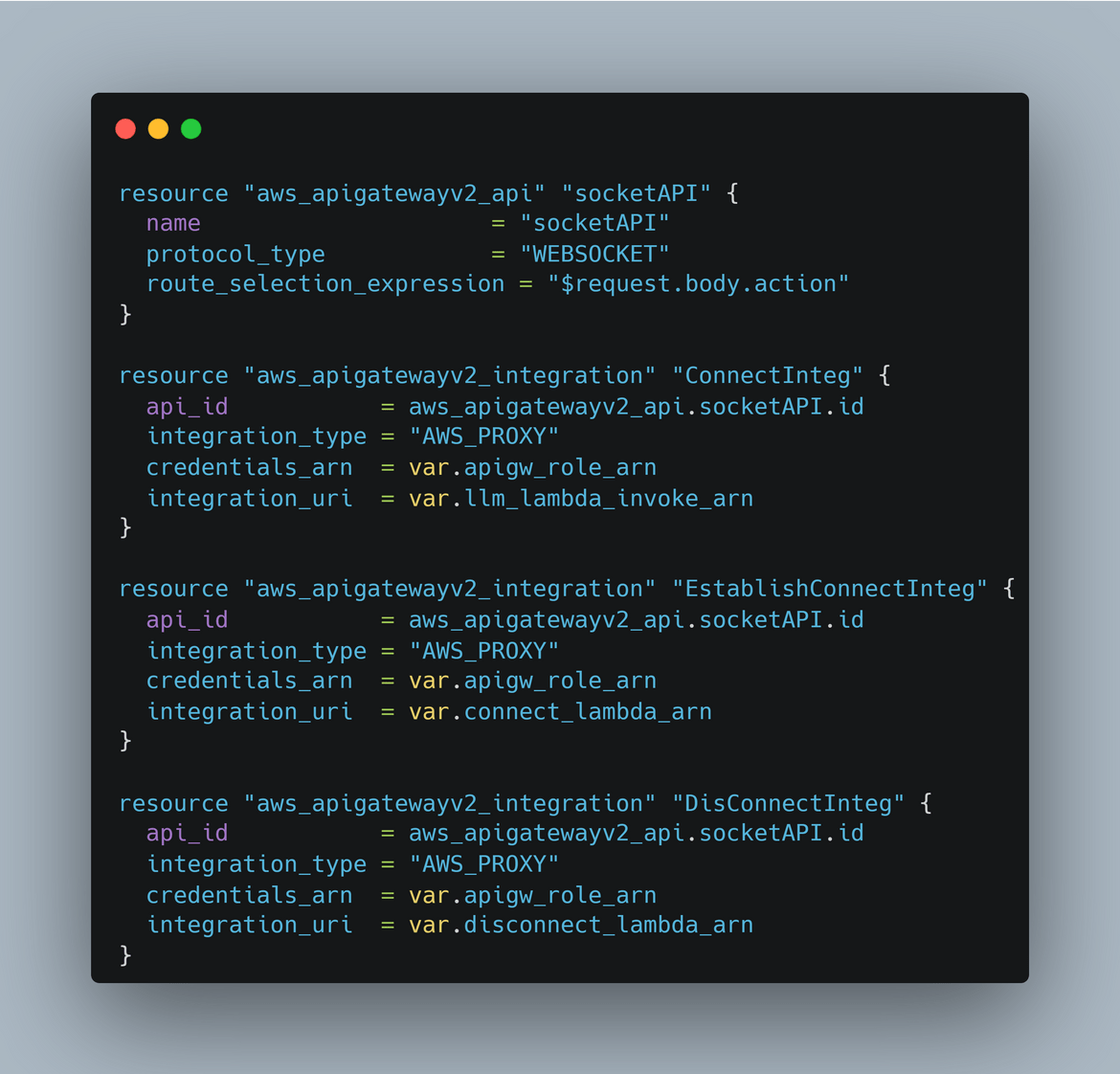

apigw: This module creates the Websocket API on AWS API Gateway. The module is defined in the apigw folder. The module creates the Websocket API with the necessary routes and integrations. It also creates the deployment and stage for the API. The respective Lambdas are integrated with the API.

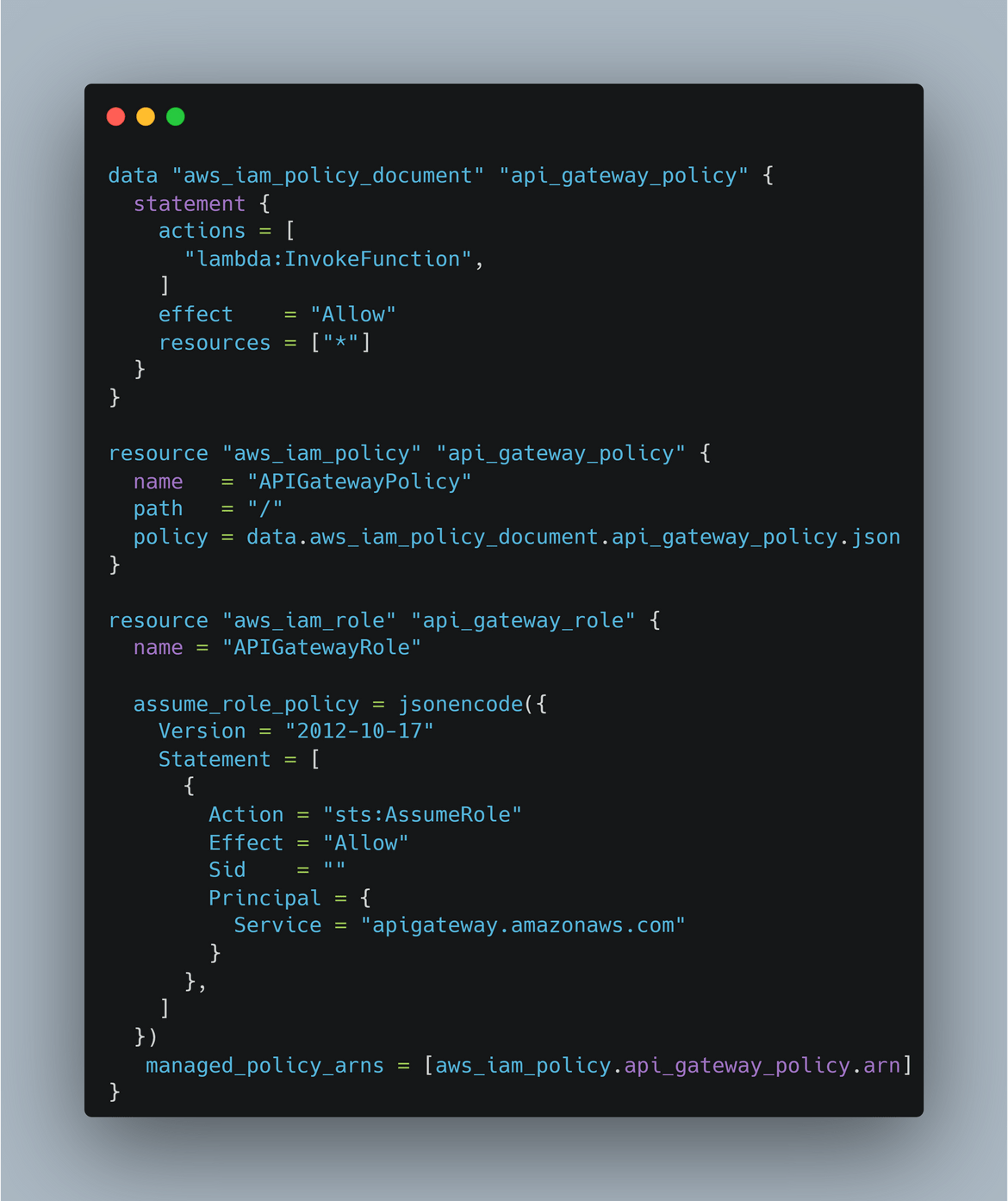

security: This module creates the IAM roles and policies needed for the infrastructure. The module is defined in the security folder. The module creates the IAM roles for the Lambda functions, ECS tasks, and API Gateway. It also creates the policies needed for the roles to interact with other AWS services.

Finally all of these modules are tied together in the main.tf file in the infrastructure folder. The main.tf file creates the Terraform resources for the infrastructure. The main.tf file calls each of the modules and passes the necessary variables to create the resources.

I am using Terraform cloud, so the org details are defined in the main.tf file.

Apart from the infrastructure, the Inference API service is also deployed to ECS. To build that Docker image, a Dockerfile is created. Its in the inference_service folder.

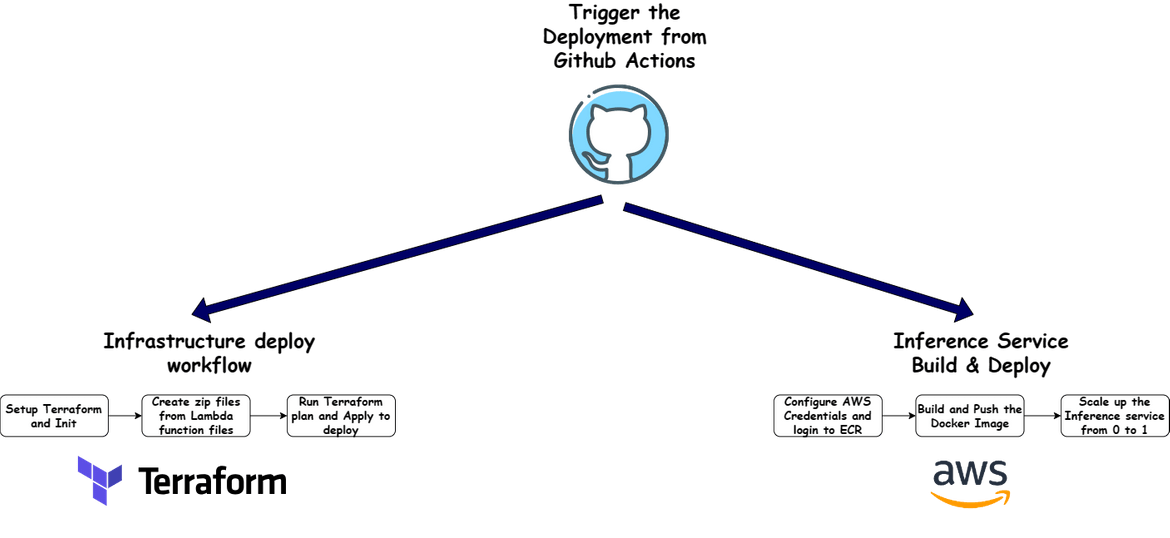

Now we have all the components defined. Next we will deploy this. The deployment is handled by 2 Github actions workflows.

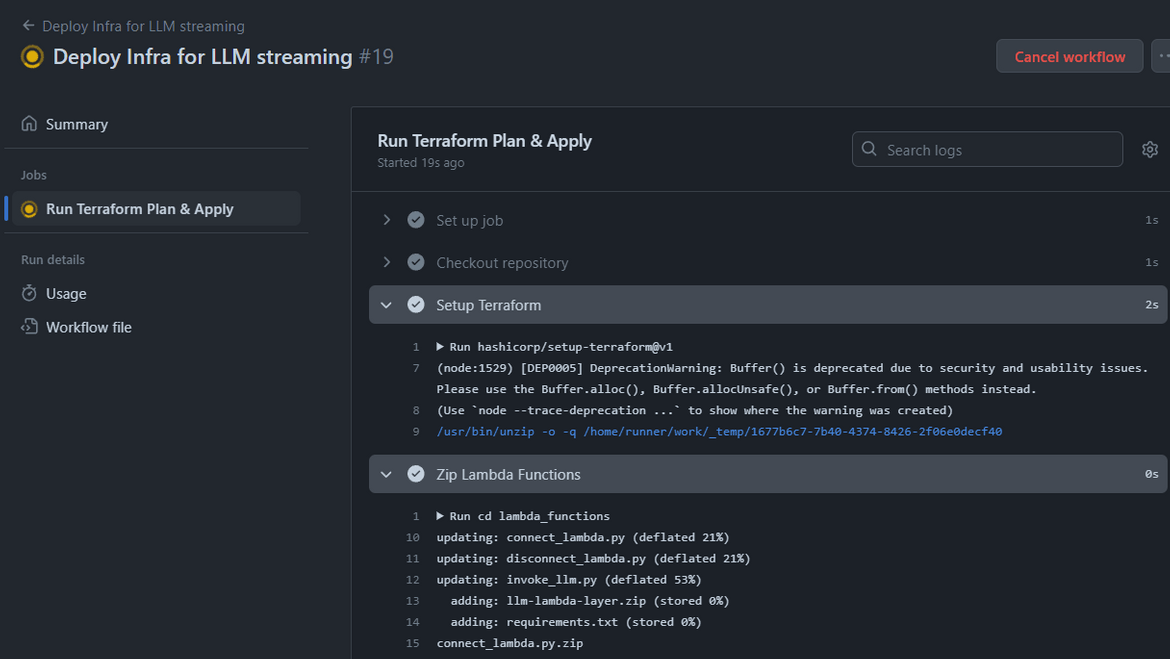

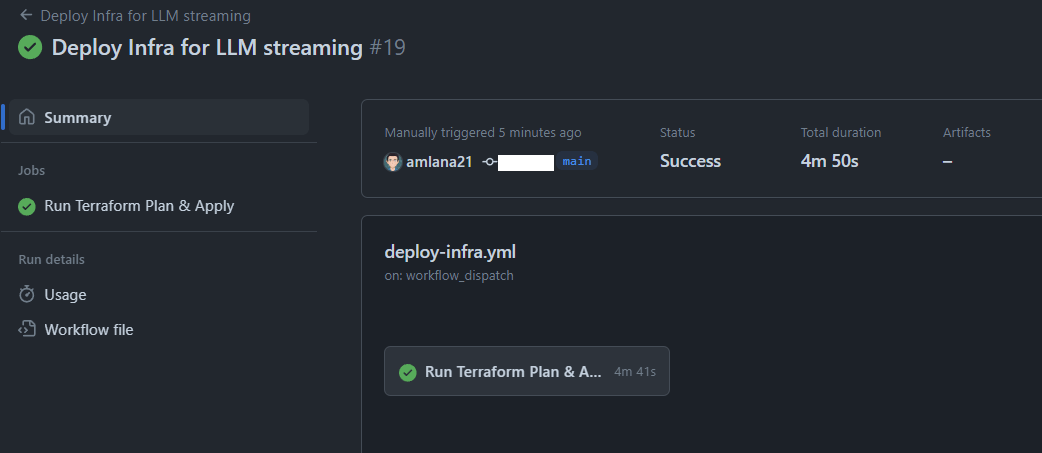

- Deploy Infrastructure: This workflow deploys the infrastructure to AWS using Terraform. The workflow is triggered from Github. On trigger it configures Terraform and runs Terraform apply. Once finished, you will have the infrastructure deployed on AWS.

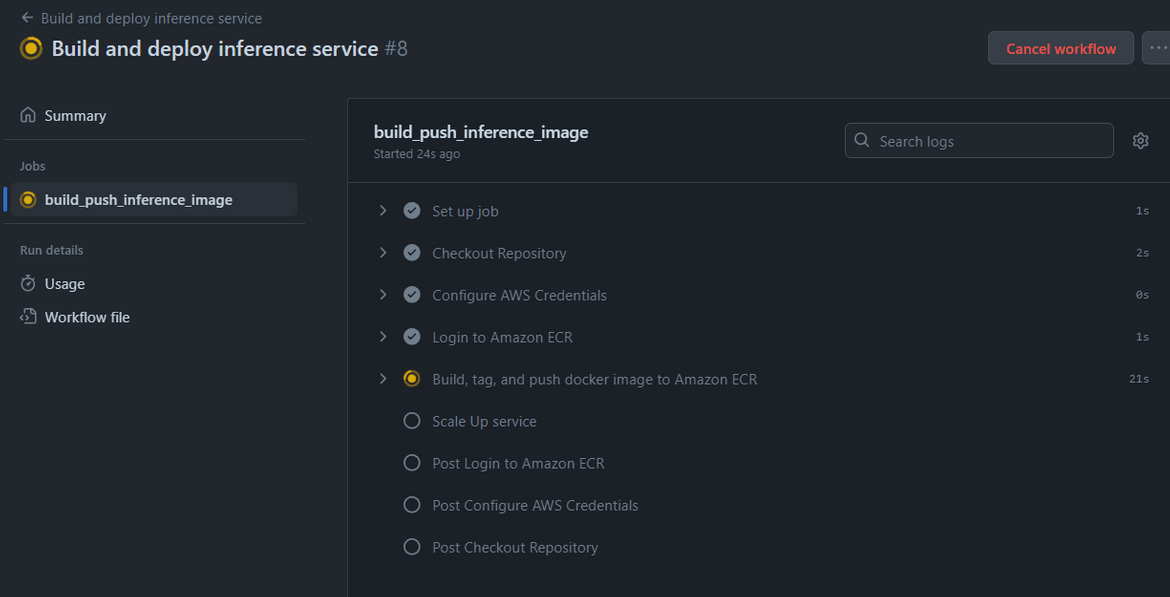

- Deploy Inference Service: This workflow deploys the Inference API service to ECS. The workflow builds the Docker image for the Inference API service and pushes it to ECR. It then deploys the service to ECS using the image from ECR. As last step, it also scales up the ECS service from 0 to 1 so the API becomes available. Initially when the service is deployed as part of the infrastructure, it is deployed with 0 replicas. This workflow scales it up to 1.

Now that we have all the components defined and the workflows ready, lets deploy the infrastructure.

Deploy!!!

We will follow this order to deploy the infrastructure:

- Deploy the infrastructure using the Deploy Infrastructure workflow

- Deploy the Inference API service using the Deploy Inference Service workflow

Before running the workflows make sure :

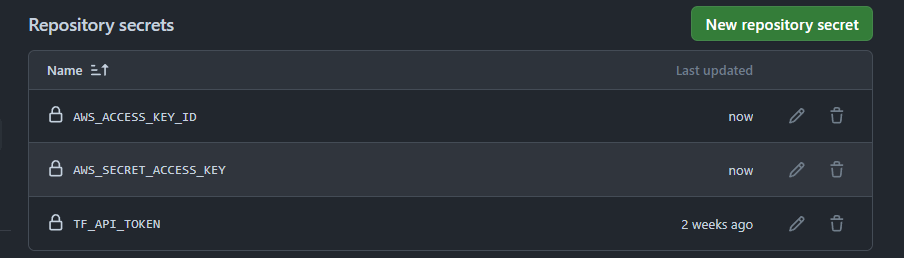

- AWS access keys entered as secret on the Github repo. Also need to set the Terraform cloud API token as a secret, since I am using Terraform cloud for deploying. You wont need it if you are not using Terraform cloud

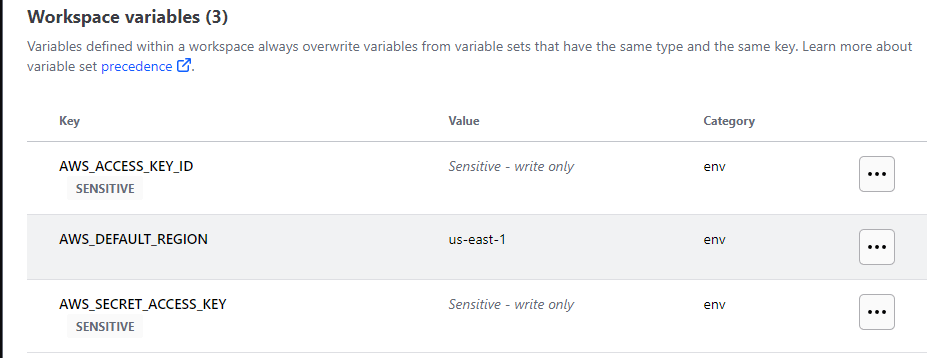

- Set the access keys as variables on the Terraform cloud workspace.

Now lets run the workflows. First run the Deploy Infrastructure workflow.

It will take sometime for the workflow to complete. Wait for it to finish.

Lets view some of the resources on AWS.

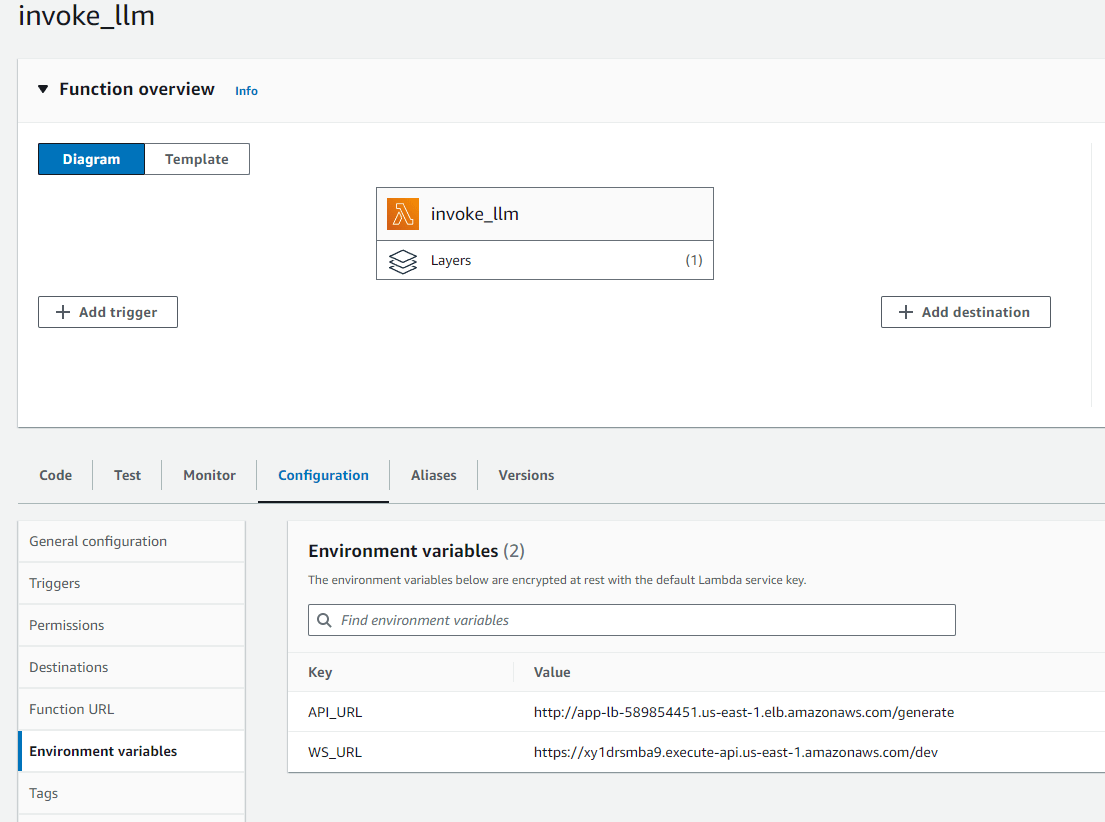

Lambda Function variable with API endpoint and API Gateway response endpoint

Now lets deploy the Inference API service. Run the Deploy Inference Service workflow.

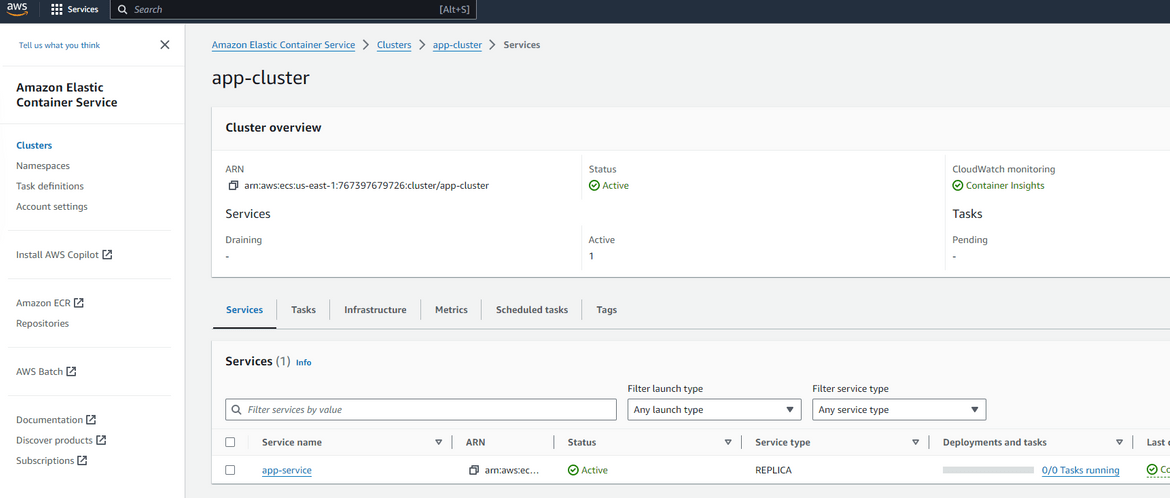

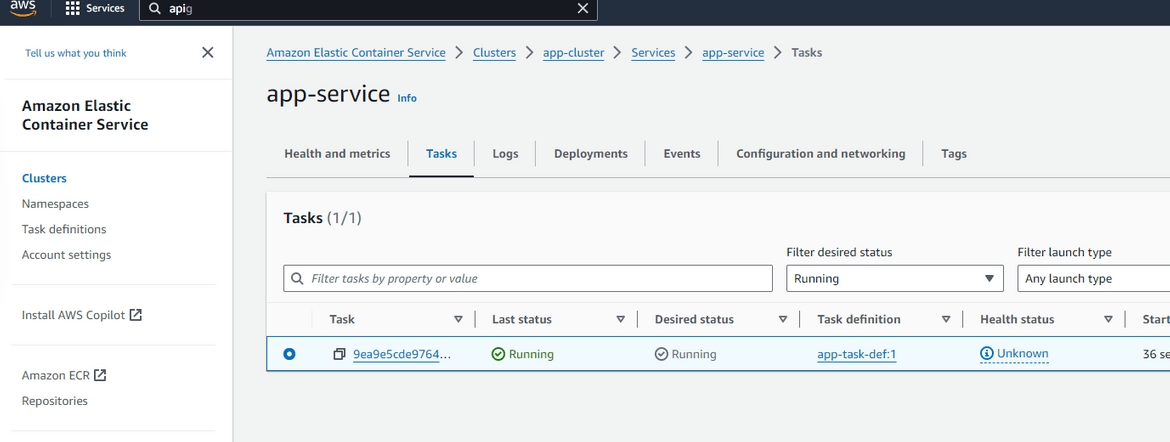

Let the workflow complete. Once done, the Inference API service will be deployed to ECS. Once finished, lets check the service on AWS

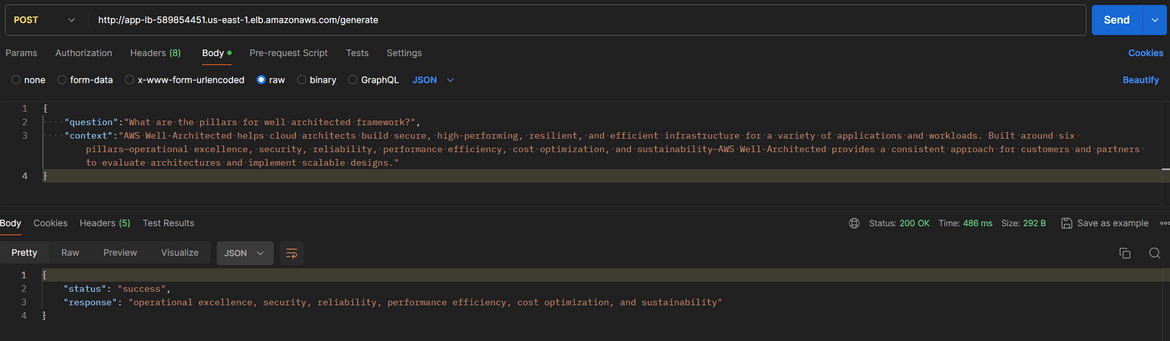

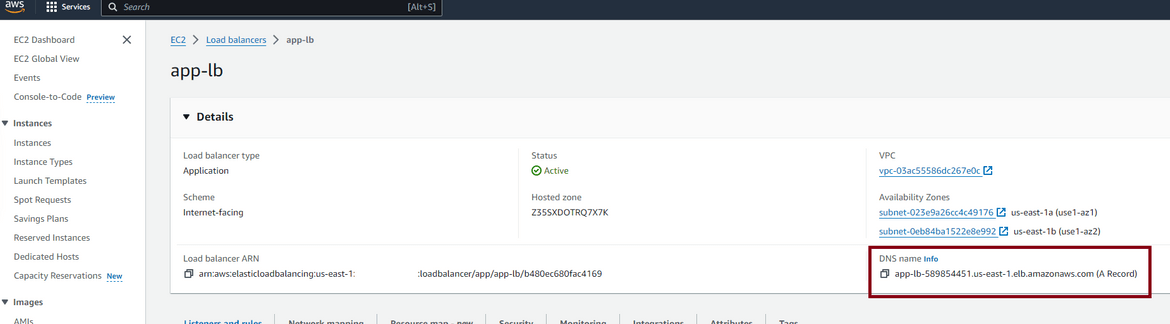

So the service is now running successfully. Lets test this API. Get the load balancer endpoint from the load balancer that was deployed for this service

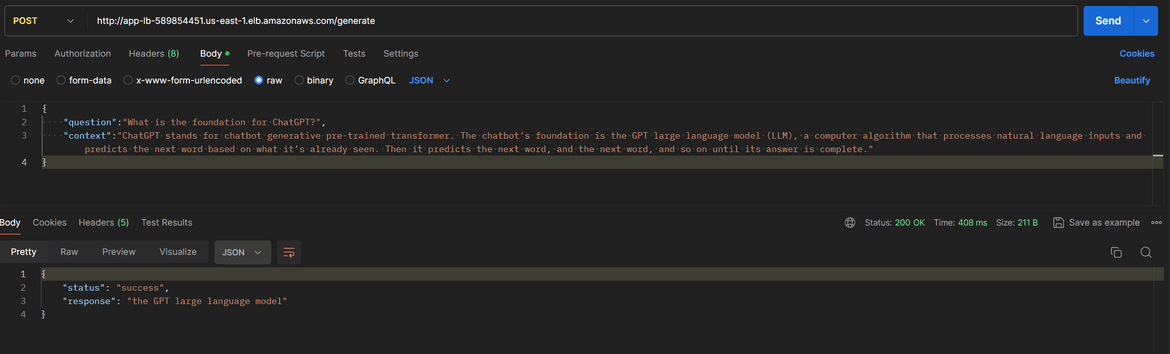

I am testing the API on Postman here. Send a POST request with these details

- URL: http://<lb_endpoint>/generate

-

body:

{ "question":"What is the foundation for ChatGPT?", "context":"ChatGPT stands for chatbot generative pre-trained transformer. The chatbot’s foundation is the GPT large language model (LLM), a computer algorithm that processes natural language inputs and predicts the next word based on what it’s already seen. Then it predicts the next word, and the next word, and so on until its answer is complete." }Send the request. It should respond with the answer

So our API is now ready. Now we are ready to test our websocket API. Lets move on to the next section.

Lets test it

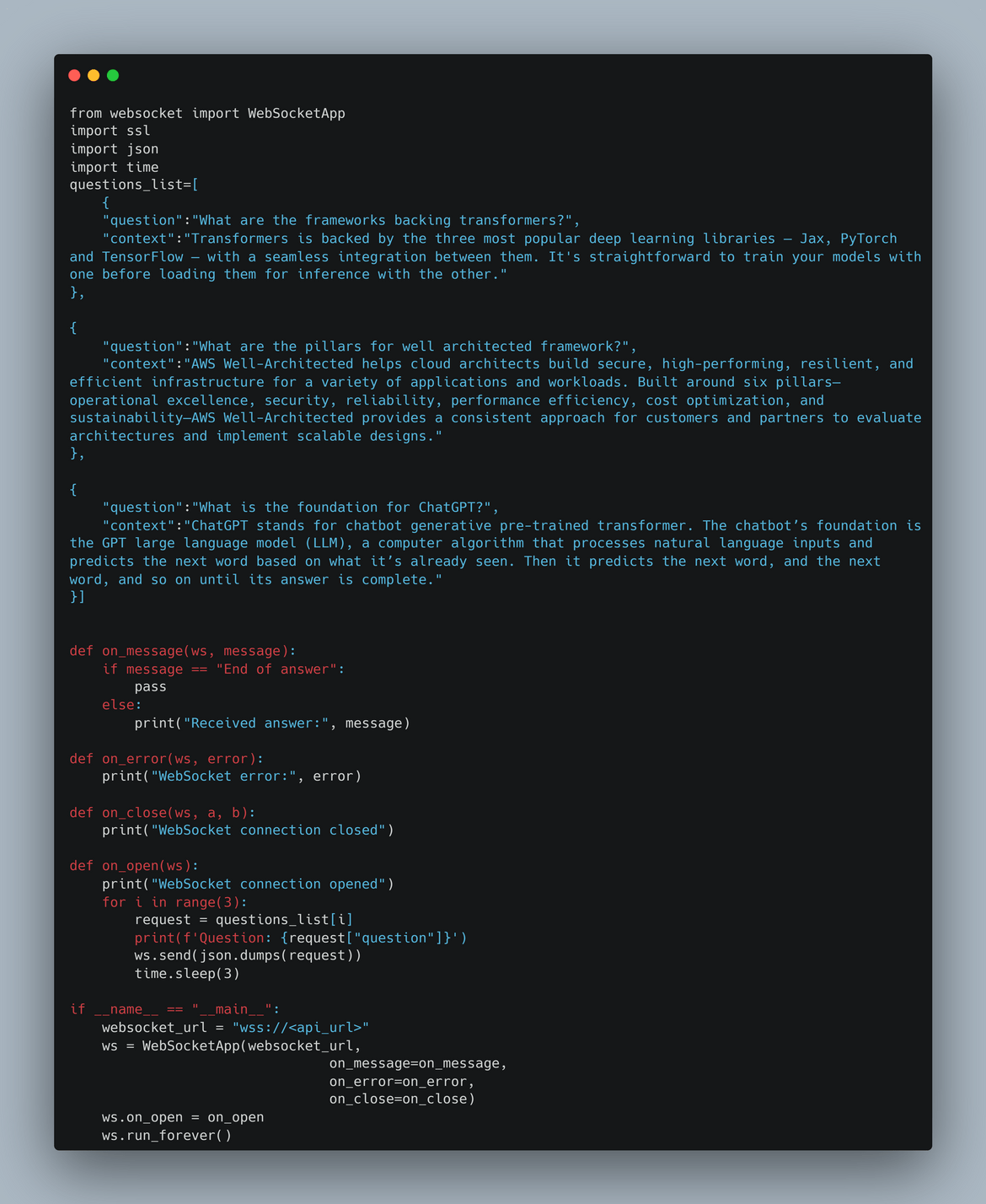

To test the Websocket API, I have created a sample code which will stream 3 questions one after another to the API over the same open Websocket connection and get the responses back. The code is in the test_responses folder.

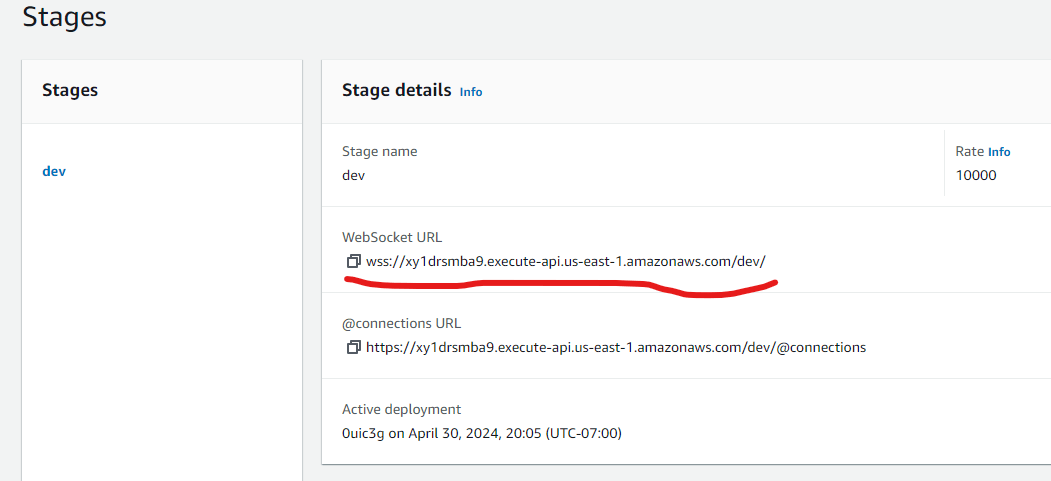

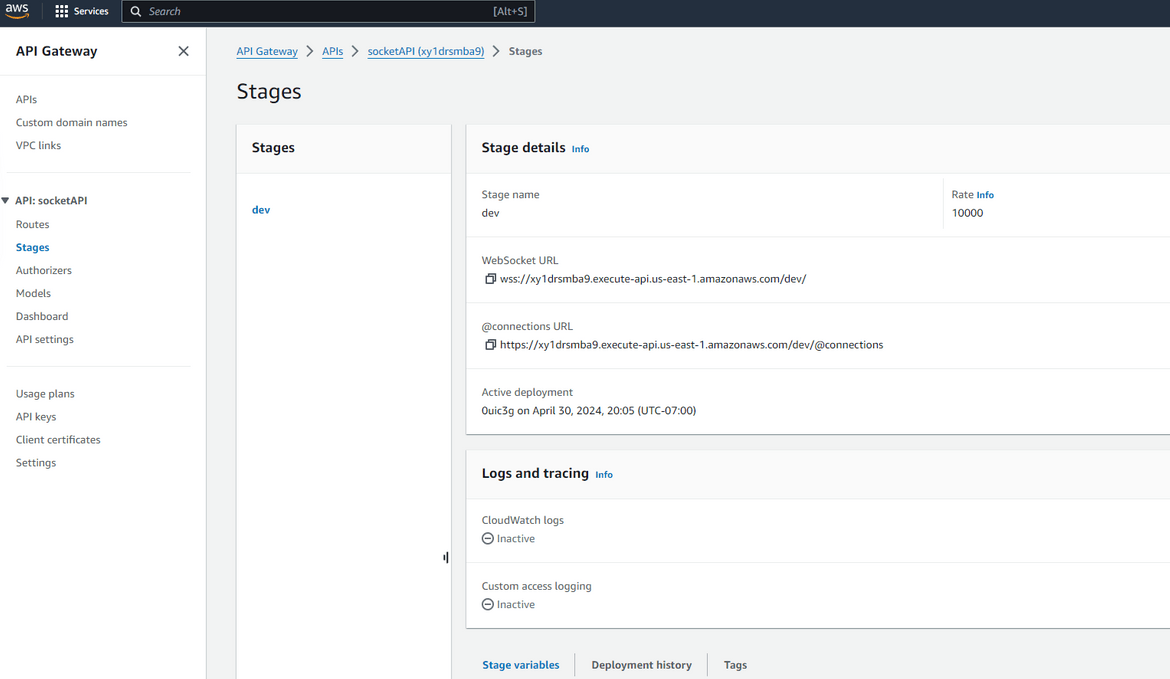

Before you start the test, update the websocket url in the code with the API Gateway Websocket URL. You can get this from the API Gateway console.

Follow these steps to run the test client

-

Install the required packages. Run the below command to install the required packages

pip install -r requirements.txt -

Run the test client. Run the below command to start the test client

python test_api.py

These are the questions and the contexts which were asked

{

"question":"What are the frameworks backing transformers?",

"context":"Transformers is backed by the three most popular deep learning libraries — Jax, PyTorch and TensorFlow — with a seamless integration between them. It's straightforward to train your models with one before loading them for inference with the other."

},

{

"question":"What are the pillars for well architected framework?",

"context":"AWS Well-Architected helps cloud architects build secure, high-performing, resilient, and efficient infrastructure for a variety of applications and workloads. Built around six pillars—operational excellence, security, reliability, performance efficiency, cost optimization, and sustainability—AWS Well-Architected provides a consistent approach for customers and partners to evaluate architectures and implement scalable designs."

},

{

"question":"What is the foundation for ChatGPT?",

"context":"ChatGPT stands for chatbot generative pre-trained transformer. The chatbot’s foundation is the GPT large language model (LLM), a computer algorithm that processes natural language inputs and predicts the next word based on what it’s already seen. Then it predicts the next word, and the next word, and so on until its answer is complete."

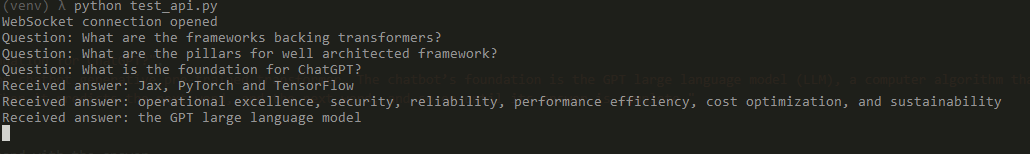

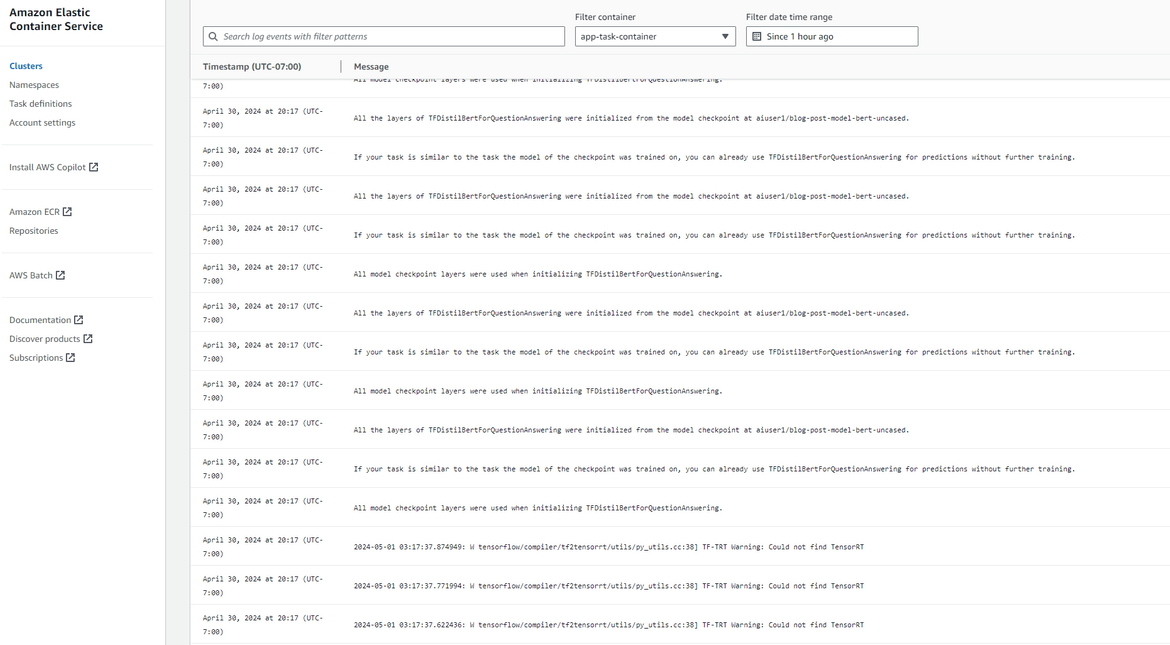

}As we see above, the Websocket API responds with the answers from the Inference API and streams back to the client. You can close the connection by hitting Ctrl+C. We can see the LLM logs on the Inference API logs

So the Websocket API is working as expected. The LLM responses are being streamed back to the client over the Websocket connection. That concludes testing the API. Go ahead and destroy the infrastructure if you are done with it so that you don’t incur any costs.

Conclusion

In this post, I explained how to stream LLM responses using AWS API Gateway Websockets and Lambda. We used AWS API Gateway to create a Websocket API which was used to stream responses from a backend LLM inference service to the client. Hope I was able to explain the steps properly and it helps with your learning. If you have any questions or feedback, please leave a comment below or contact me from the Contact page. Thank you for reading!